- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nonlinear curve fitting Lev-Mar vs constrained Lev Mar

Solved!11-26-2022 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The nonlinear Lev-Mar curve fit vi works but when changed to the constrained vi it does not fit. The Fit simple gamma variate demo shows this behavior.

I think the constrained version will be faster and would like to be able to use it.

Help appreciated

JD

Solved! Go to Solution.

11-26-2022 11:50 AM - edited 11-26-2022 11:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can you do a "save for previous" (2020 or lower). Most here don't have LabVIEW 2022.

11-26-2022 12:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here is the LabVIEW 2019 version

11-26-2022 12:13 PM - edited 11-26-2022 12:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks. I was actually able to look at it on a VM with LabVIEW 2022.

The problem is that your x array and y arrays have different sizes. The non-constraint fit can gloss over that, but the constraint can't.

Your X array has 360 elements, while your Y array has 361 elements. Once you delete one element from Y, both fits succeed! Try it!

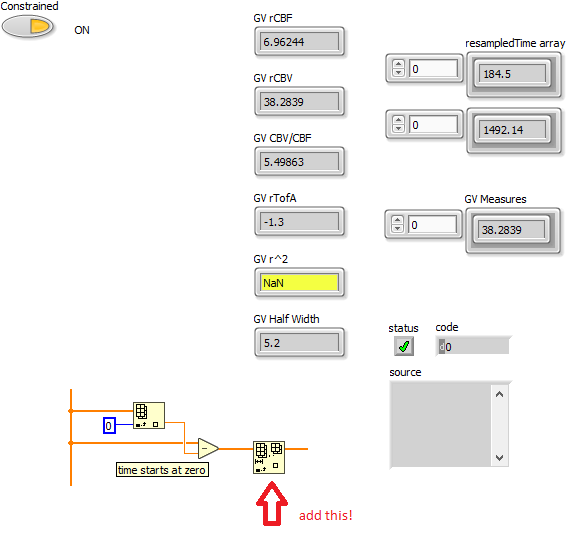

Here's what happens if I delete the last elements (of course you need to decide what needs to go).:

11-26-2022 12:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh, such a simple error!

Thank you very much.

JD

11-26-2022 02:21 PM - edited 11-26-2022 02:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

On a side note, here's a "near literal" translation of your model into graphical code. It is about 40% faster on my rig.

(Yours could probably be streamlined a bit more too if you would take some of the scalar calculations out of the loop. I have not tried, because I don't do text based code. 😄 )

11-26-2022 02:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you,

That is a great help.

I had tried that too, but yours is a better implementation.

I have about 100K of these fits to do in a single brain scan so anything to speed things up helps a lot.

11-26-2022 03:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think you really need to fit the raw sparse data instead of the derivative of splined data at a much finer (fake!) resolution. No need to resample with a much larger number of points that all directly depend on the original sparse data anyway. (I haven't studied how the function would look like.). I am sure it would be significantly faster, and more importantly, more honest. You cannot base your goodness of fit on 360 points if you actually only have 25!

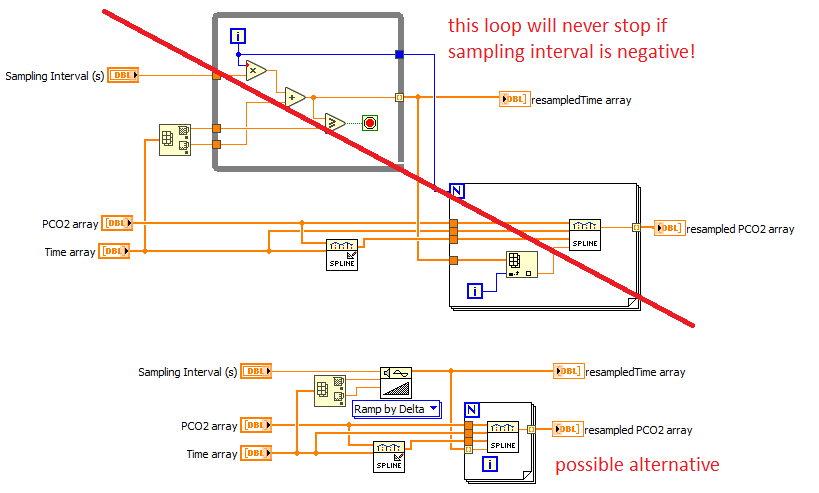

You should really eliminate all these small loops because there is always the danger that they will never stop. For example in your "resample" code, the wile loop would never stop if the user would accidentally enter a negative interval. Not bulletproof!

Also, wiring [i] to index array is the same as autoindexing, no need to wire N.

11-26-2022 04:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks,

I will try it out.

I wanted to increase my timing resolution, hence the resampling.

JD

11-27-2022 02:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Prof_Dee wrote:

I wanted to increase my timing resolution, hence the resampling.

JD

Think about it -- by resampling, you are adding "guesses/estimates/values based on pre-set assumptions" on a fixed data set, then hoping to increase "timing resolution" based on the "real" and the "made-up/interpolated" data. If you attempted to publish this data and a reviewer who knew a bit of math and statistics saw it, you'd be in Deep Weed ...

Bob Schor