- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Network stream performance on crio

Solved!10-27-2018 10:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

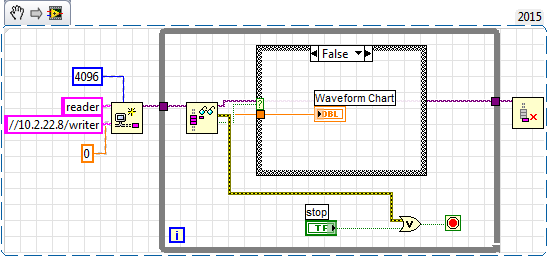

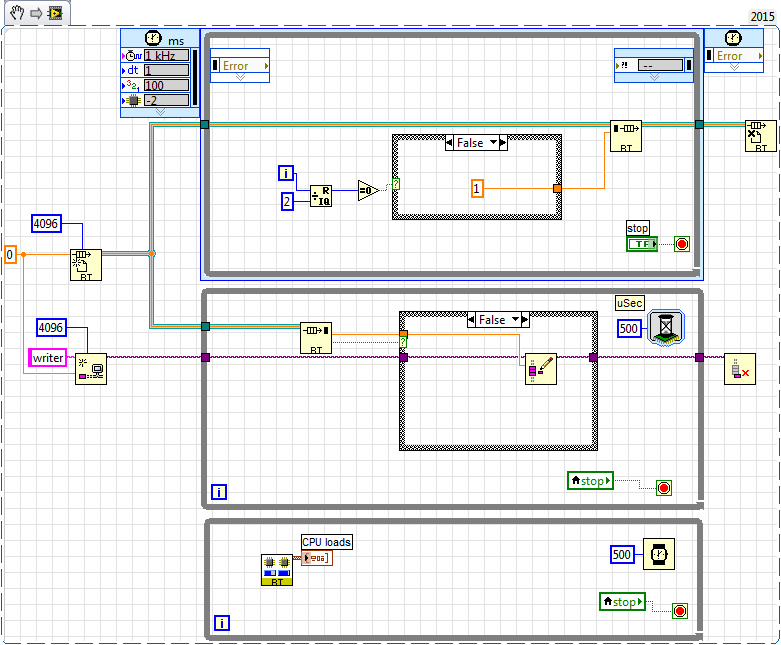

i'm evaluating network streams on a real-time target (cRIO 9035). I have a timed loop which acts as the producer and a while loop which is the consumer. With the producer loop i simulate analog input with a sampling rate of 1kHz. The consumer sends the samples over to the PC with a network stream. My concern is the cpu usage of 20% with this rather small program. Are there any ways to lower cpu usage/improve my code? I'm planning to have much more channels with the scan engine.

Solved! Go to Solution.

10-28-2018 01:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Why are you using non-deterministic communication within a deterministic loop?

10-28-2018 04:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm not sure what you mean. In the timed loop i use a rt fifo which is deterministic. The second loop with the stream is non deterministic. I took the following link as an example.

Part 5: Sending RT Data to the Host Computer (Real-Time Module)

10-30-2018 12:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Why does the CPU usage go up to 100% if i remove the wait from the consumer loop? I thought that the consumer follows the speed of the producer?!

10-30-2018 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Juko wrote:

Why does the CPU usage go up to 100% if i remove the wait from the consumer loop? I thought that the consumer follows the speed of the producer?!

RT Fifos default to a timeout of 0, you want to give it a timeout for the consumer to follow the producer's speed.

Your cpu load won't increase as much as you think when you scale up. 1 kHz loop and a 2 kHz loop on a cRIO using several APIs to move a single double-precision floating point value around is quite a bit of overhead. Change that to a constant 1000 element array and I bet you'll find CPU load goes up a small amount. It also depends on how much junk you have installed on the cRIO -- for example, it the display enabled? Web services? Scan engine? It may be that your application is only a small fraction of that CPU load.

Also, are you just trying to stream all the samples or do you have latency needs? Network streams are designed for streaming performance not latency performance.

10-31-2018 12:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

After playing around with different settings, i found out that a timeout alone does not change anything. You also have to change the FIFO access mode to "blocking" instead of "polling". (see https://forums.ni.com/t5/LabVIEW/RT-FIFO-results-in-100-cpu-load/td-p/2808832)

So i set the FIFO mode to blocking, the read timeout to 1ms and removed the wait in the consumer loop. The CPU usage went down by 10%.

Now i have a CPU usage of 10%, where the usage without any user program is 5%. That makes 5% for the acquisition and the streaming which is fine i think.

I want to analyze step responses of a control system so latency is not that important, but resolution is. You mean i could send the data in chunks? So i would aggregate them in an array? Then i have to change to a graph for indication.

10-31-2018

01:06 PM

- last edited on

01-06-2026

02:07 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Juko wrote:

I want to analyze step responses of a control system so latency is not that important, but resolution is. You mean i could send the data in chunks? So i would aggregate them in an array? Then i have to change to a graph for indication.

Network streams buffer data automatically (look at https://www.ni.com/en/shop/labview/lossless-communication-with-network-streams--components--archite.... ) and so if latency is important, you might want to use UDP (if some loss were ok) or TCP with nagle's algorithm turned off (if loss is unacceptable). The stream API you use is abstracting that underlying buffer scheme.