- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Memory Allocation Questions

Solved!08-21-2019 10:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm running out of memory in one of my projects so now I'm trying to understand how memory allocation in LabView works and what I can do about it.

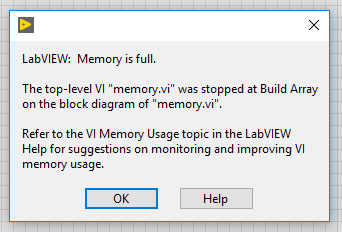

Basically in the main project I have an 2D array to which I add rows of measurement data and processed data and I'm running into the memory full notification at some point:

I'm using 32 bit LabView 2017 on a 64 bit Windows machine and this usually happens around 2 GB of memory usage by LabVIEW, as seen in the task manager. Therefore I was not really worried and thought I had just written bad code. However, on one of the LabVIEW help pages I found that I should be able to use 4 GB of Memory for this configuration (32 bit LabView 2017 on a 64 bit Windows)

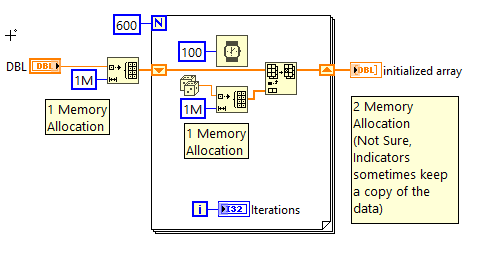

So I wrote the bit of code attached here which basically allocates memory until it runs out.

First thing I noticed was I got the same message of memory full around 1 GB of memory usage using a 16 bit unsigned int as data type.

So if I change the data type of the array I get the following results

quad: 134 runs of the for loops and ~1 GB of memory usage

long: 268 runs of the for loops and ~1 GB of memory usage

word: 536 runs of the for loops and ~1GB of memory usage

byte: 536 runs of the for loops and ~0,5GB of memory usage

And this is were I don't understand what is happening. I since have the same number of runs for the for loop I assumed LabVIEW is as a default allocating at least 2 byte but the memory usage doesn't fit since byte is half of long but the number of runs is the same.

And I'm nowhere close to the 4 GB promised in the first place so who decides how big my arrays can actually be? And can I allocate more memory in one VI if I just keep the length of one array contained?

I read through the memory usage help page and some forum posts here, but I don't feel like those questions were answered anywhere. If they were, feel free to shame me as it is customary on the internet, but please provide a like as well.

Thanks a lot in advance, kiwi6

Solved! Go to Solution.

08-21-2019 11:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Check out these "LabVIEW_Memory" tagged threads.

The posts by Greg McKaskle are always "must read" posts. I is the Chief Architect of LabVIEW.

LV has its own memory management and will use memory as efficiently as it can but it does require contiguous memory for arrays since arrays have a pointer and uses stride and such to figure how to index arrays of multiple dimensions.

You can get to the 4G limit if you do not force it to be a single array.

Queue elements do not have to be contiguous.

While an array of queue references do have to be contiguous for the references, the individual queues can be scattered.

Also be aware that if you are re-running your tests you could be running into an issue with memory being fragmented and subsequent runs may be failing due to no single block of memory being available to do what you ask.

Ben

08-21-2019 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

In your example, you are constantly resizing and adding to the array, each time you do this, you will increase memory, as LabVIEW needs to make a new memory buffer.

Look at the following example:

An array is preallocated and then elements are replaced. Memory should stay constant.

mcduff

08-21-2019 11:28 AM - edited 08-21-2019 11:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Read everything Ben said!

I am actually very surprised that you can even get close to 2GB, because if you continually append to an existing array, it will occasionally run out of the existing memory allocation and needs to find a comfortably larger chunk of contiguous memory to copy everything over, which becomes less and less likely to exist. As Ben said, arrays need to be contiguous in memory. What else do you do with the data?

In the case of this particular attached (you even attached it twice, using twice as much server resources!) example (with constants wired to all size inputs), the compiler might actually be smart enough to calculate and allocate the final size once at the start of the run, but I would not count on it. (why don't you use a concatenating tunnel?)

Now your "request deallocation" actually makes things worse, because with every run the allocation game needs to start from scratch. Without that function, the contiguous allocated final memory from the previous run might still be around.

Still, in addition to the raw size of the array, you'll have at last two extra data copies in memory: The data in the indicator and the data in the transfer buffer of the indicator. Then the entire LabVIEW develoment system (panel, compiler, diagram, palettes, etc.) and such also occupies memory, of course. You don't have contiguous 4GB for one data structure.

Solutions:

- Be more reasonable with memory use. Stay well within comfortable boundaries. Why do you needs these data sizes? Is there a better way?

- Switch to 64bit LabVIEW. (even there you'll run into limitations if you try to shuffle gigantic growing data structures, at least in terms of performance). You still always need to be reasonable!

08-22-2019 03:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not the core issue with the code, but: After seeing AQ's E-CLA presentation, I learned that the use-case for "request deallocation" is so extreme that it should never be used. How it ended up in the palette is a mystery. The context help misguides the user into thinking that it is a good thing to sprinkle the code with this node.

08-22-2019 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@thols wrote:

Not the core issue with the code, but: After seeing AQ's E-CLA presentation, I learned that the use-case for "request deallocation" is so extreme that it should never be used. How it ended up in the palette is a mystery. The context help misguides the user into thinking that it is a good thing to sprinkle the code with this node.

To see the effects of "Request Deallocation" in reverese, all we have to do is;

open task manager

View >> Show Kernal times

Select the performance tab

Then open an application that take a bit of time to open like Excel, Word etc.

The performance graph will show just how much of the delay in opening is due to Kernal Time.

What "Kernal Time" tells us is how much of the CPU is being used to tinker with the hardware to do the OS related work needed to perform the "malloc" opetations (allocating memory). Tinkering with the virtual address translation is a tricky and delicate process that can not tolerate being interrupted or distracted. TO prevent distraction AND to allow access to the virtual address translation hardware, the OS transitions to "Kernal Mode" where page faults etc are not allowed.

It is only after allow the preparations have been completed that the OS will exit out of Kernal mode and "let the cats loose to play".

Releasing memory is the same process but in reverse.

I started serious development back Bridgeview 2.0 (Industrial version of LabVIEW that was contemporaneous with LV 4.0) and at that time the Request Deall..." actually did something (I believe since I was not savy enough to do any testing with it myself). But the devastating effects was shutdown soon thereafter.

The effect of "Request Deallo...." can only be seen if we spin-up top-level VI that are not part of the address space of the current VI context. It is only when the process context goes idle that "Request Deallo..." actually does anything.

LV has a wonderful memory manager running behind the scenes that makes memory available where needed, reassigns chunks if appropriate and is down right genius code! Invoking

"Request Deallo..." while an application is running is not far off from trying to rotate your tires while driving. IT can be done but it sure slows things down.

Enough of me rambling for now,

Ben

08-22-2019 09:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First of all thank you for your replies and you helping me understand what I was doing wrong.

The main thing I did not understand was that arrays need to be contiguous in memory but it makes sense.

As to why I am using this setup the answer is legacy and code re-usage. I was given this project which in its core was probably made using LV6 and by now approx. 10 people of varying degree of expertise had their way with the code (of which I'm not the best but probably also not the worst. And none was a real developer) and it is a behemoth that is hard understand and for doing it again I neither have the time nor the resources. So we will tinker until it breaks and can't be repaired, as it is custom in the trade.

Also when I asked about switching to 64bit the answer was that it would not work anymore, they have tried.

Unfortunately, the new path I'm working on produces a lot more that then the previous paths of data evaluation, so this is why I ran into the problems in the first place.

The constant resizing of the array is due to me using a bit of code from a colleague which appends the data to the array but also keeps track of the position it adds it to and I can retrieve the data I need by using an identifier (basically a string). I don't need to keep track of where in the array the data I need is, it just tell the SubVI I want "distance" for example.

So for this project I will rethink the way the data is stored and also will downsample the data considerably. Most of the evaluation and data manipulation can be done on a coarse version of the measurement data, once I know the location of the hundred or so interesting data points in the sea of millions of data points I can go back to the original data set and extract the "fine" values I actually need.

As for attaching it twice, I'm sorry but it looked like it didn't go through the first time I attached it and when the post was online I saw it was there twice and there is apparently nothing I can do about it once it is up. I looked to erase.

As for the request deallocation, is it called every time a loop finishes? it was my understanding, that it is only called once the VI has finished its work. I saw it being used in the code I was working on and also started using it.

Thanks for your points on Request Deallocation, I will think about how to get rid of this from the code, which is already there. Or at least the parts I'm using. I will also try and get a better understanding of how it works and what actually happens when I call it.

@Ben wrote:

@thols wrote:

Not the core issue with the code, but: After seeing AQ's E-CLA presentation, I learned that the use-case for "request deallocation" is so extreme that it should never be used. How it ended up in the palette is a mystery. The context help misguides the user into thinking that it is a good thing to sprinkle the code with this node.

To see the effects of "Request Deallocation" in reverese, all we have to do is;

open task manager

View >> Show Kernal times

Select the performance tab

I found that on Windows 10 to you need to go the the performance tab first, select the CPU graph and right click on the graph -> show Kernel times

08-22-2019 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kiwi6 wrote:

First of all thank you for your replies and you helping me understand what I was doing wrong.

... I can retrieve the data I need by using an identifier (basically a string). I don't need to keep track of where in the array the data I need is, it just tell the SubVI I want "distance" for example.

...

Look at Variant Attributes

They can be used as a fast look-up by name.

Ben