- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Lexicographic image processing

Solved!04-03-2014 09:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I want to process the array of an image lexicographically (meaning reading as you write, so from left to right). Normal arithmatic functions (division, multiplication etcetera) multiply the entire array, but I want to push the top-left pixel through my algorithm, and then the one to the right of that, and after that one the one to the right of that, up until the last pixel, and then it starts with the pixel one row beneath the first one all the way on the left.

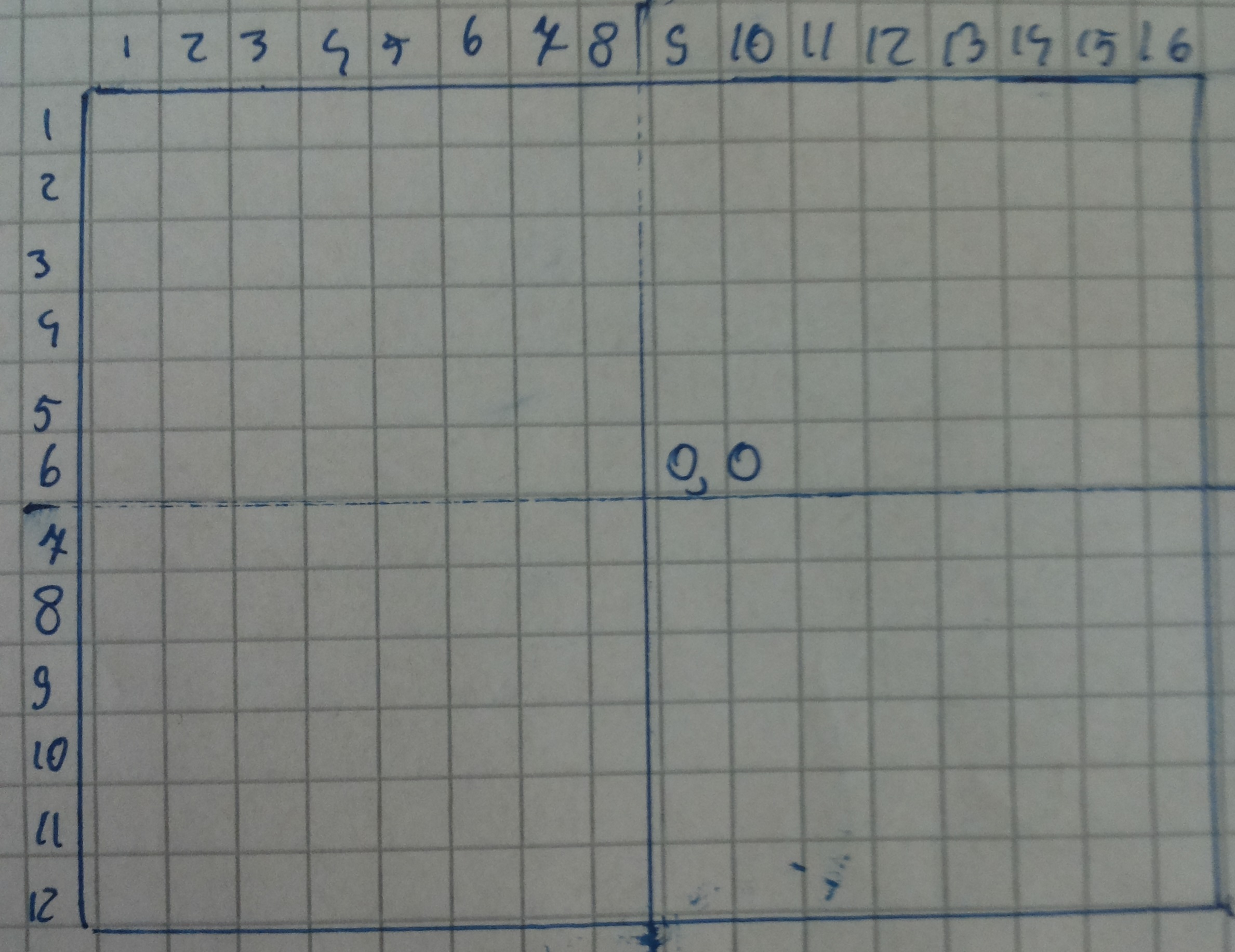

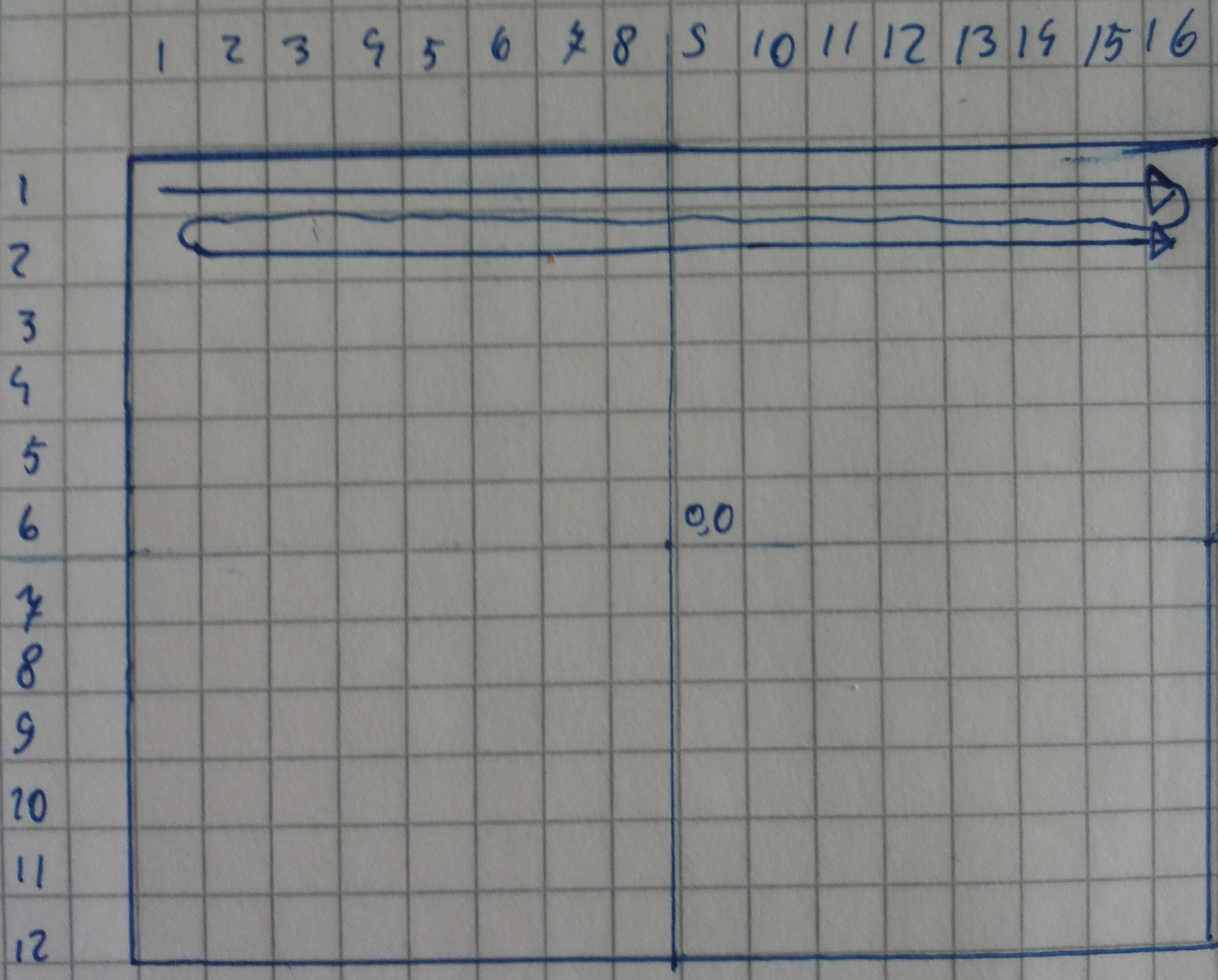

To illustrate, imagine an image of 12 X 16 pixels:

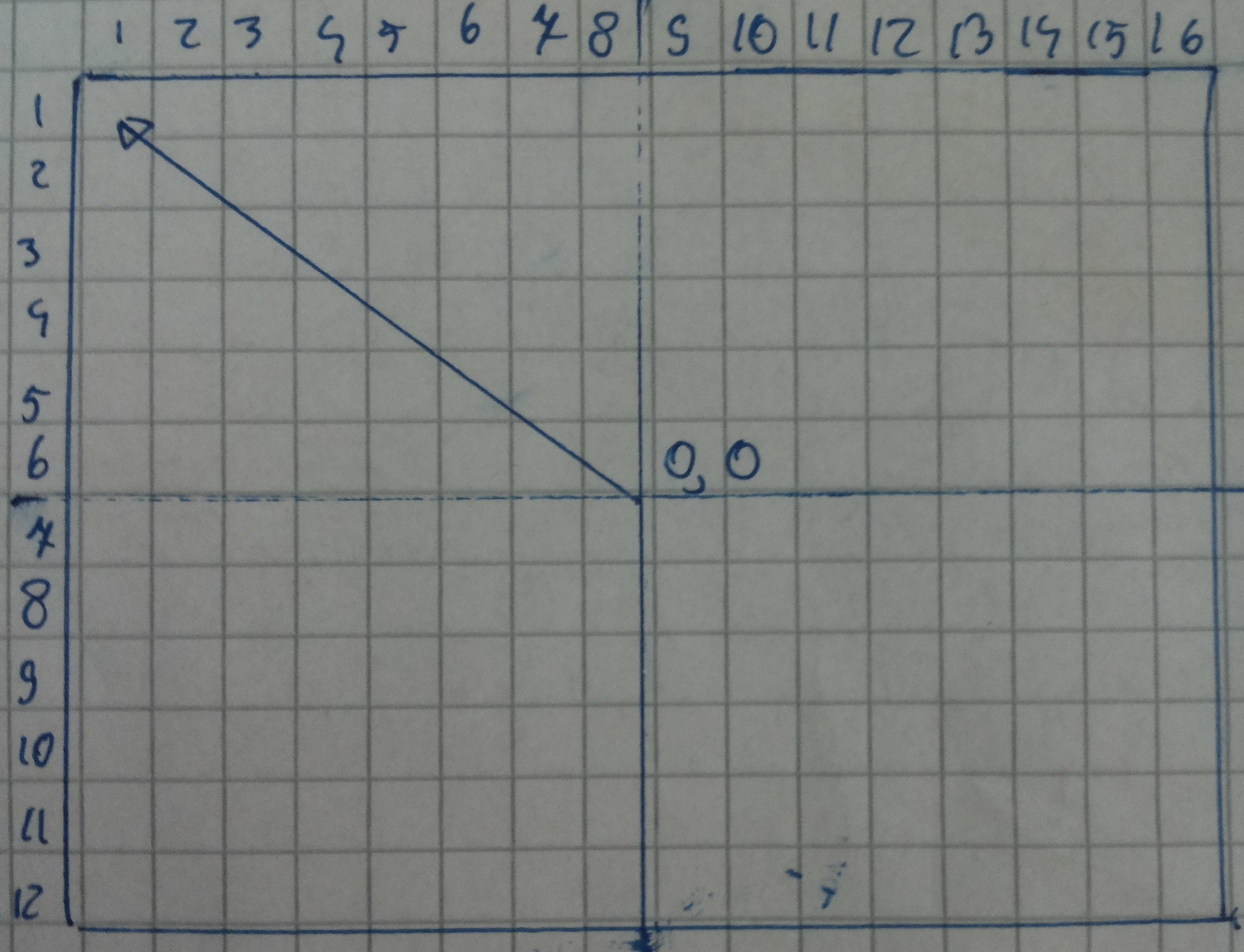

I want to calculate what value each pixel is supposed to have. I do this by comparing the actual value with the value I want. I calculate the value it needs to be at a certain pixel by looking at how far it is from the origin. The origin is in the center:

The distance then from the origin to the actual pixel is this:

The length of this 'vector' is then calculated by splitting it into a horizontal and vertical part:

The length is then the the x-coordinate squared and the y-coordinate squared added together and then the root of that number is taken. So that's basically the theorem of Pythagoras. I know that in this case, the length found is not the real length, because you use x=1 and y=1. So first off I subtract 8.5 from the x value and 6.5 from the y-value before going any further.

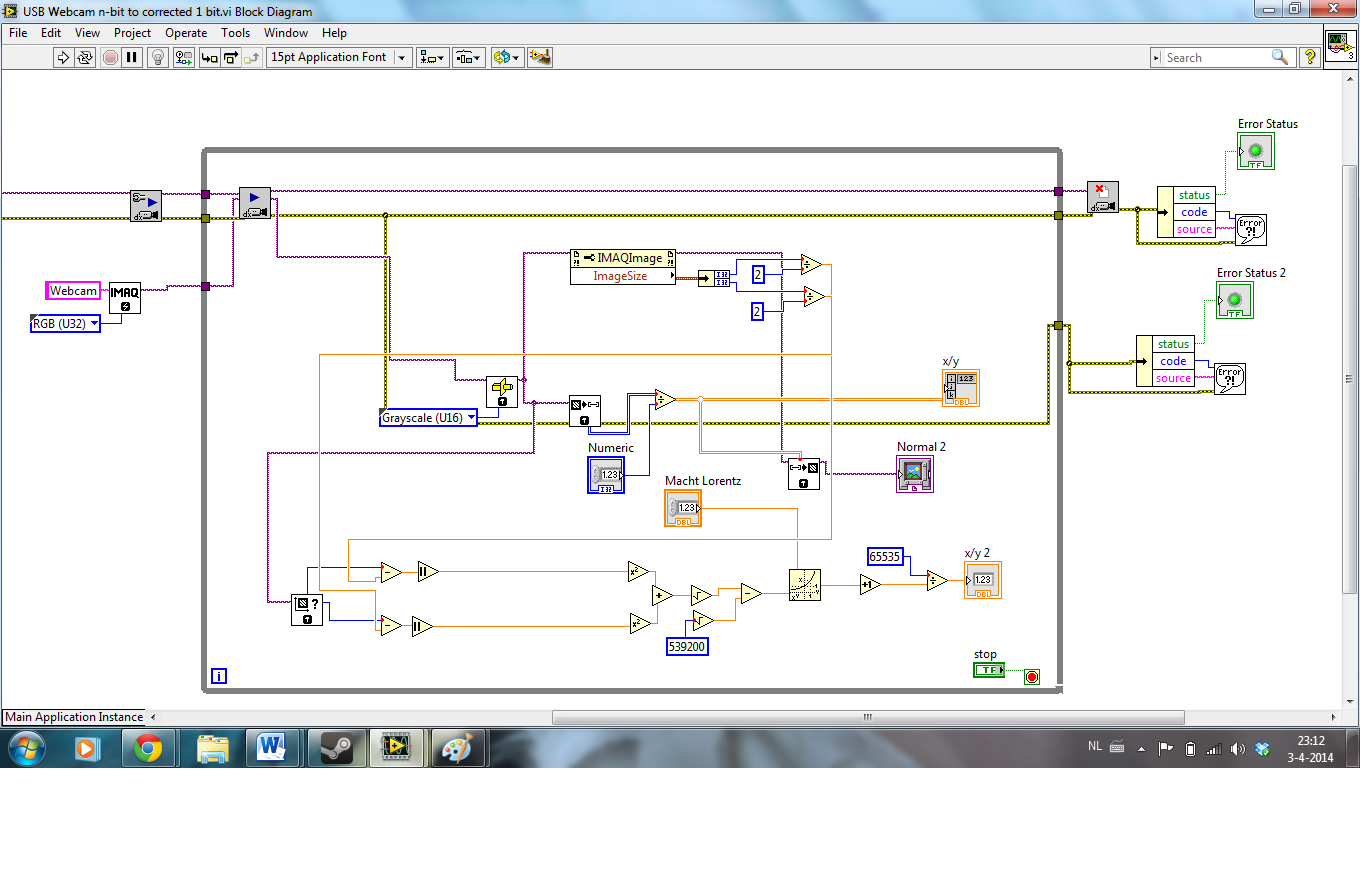

But I want to process the pixels in the order of y=1 x = 1, 2, 3, 4, 5, 6, 7, 8 etcetera and then y=2 x=1, 2, 3, 4, 5, 6, 7 etcetera. That is exactly what lexicographical processing is. But how can I get this to work? In my VI you can see how I am currently exporting just the numbers of the total size of the image (or frame of a video), but I want to process them one by one. I looked up some helpful Labview files that do something similar, like the 'Check Pixel Value' VI but there you have to manually scroll through the data. I want it to happen automatically.

So how can I process pixels of an image lexicographically?

Solved! Go to Solution.

04-03-2014 10:18 AM - edited 04-03-2014 10:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

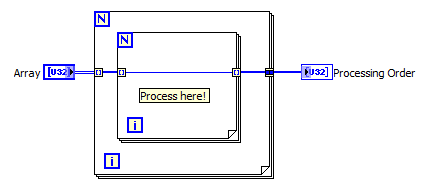

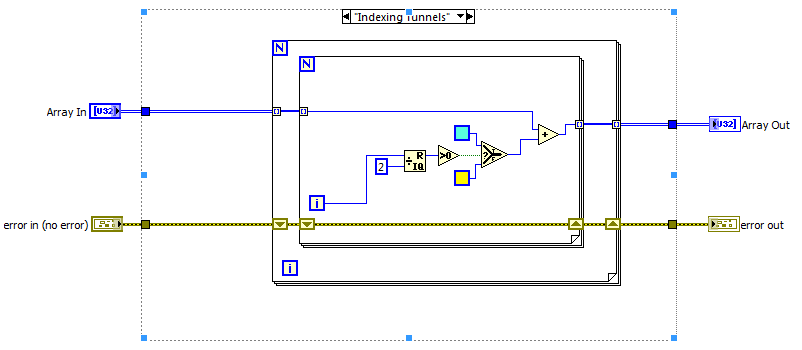

Forgive me if I'm missing the obvious, but I read and re-read your post a few times and is the solution not as simple as:

When you have a 2D array and you have a nested for loop with indexing, it will iterate through the 2D array in left to right reading order. You can then either re-index the items to build another 2D array of calculated values or do what I've done and concatenate the second terminal to put the items into a 1D array.

I can't see your VIs as I don't have LV2013 but I'm assuming you have a 2D array of something and you want to process each item in lexigraphic order?

04-03-2014 04:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

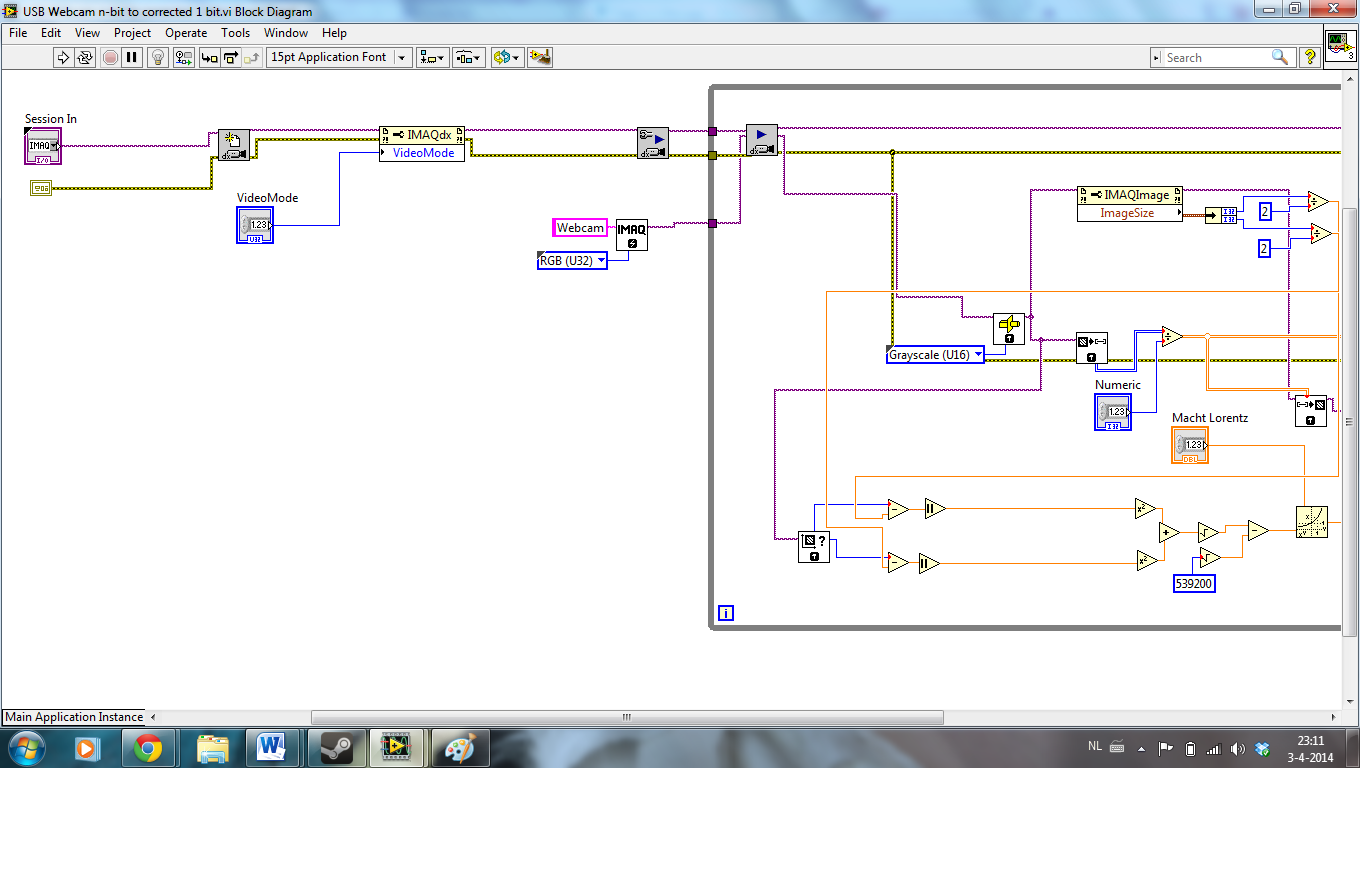

Yes, I do have a 2D array. I use the IMAQ Vision VI's to get my webcam to run. The solution is rather simple in that sense, yes.

But here is the deal though: the comparing with the Lorentzian function returns me a value. That is rounded to either a 0 or a 1, because it will be the pattern used for a Digital Micromirror Device (DMD). This induces an error. In order to make up for that, I need to correct it using neighbouring pictures (if a pixel is surrounded by 8 pixels, then the diagonal pixels are primarily used for this). I tried something similar in which I split it into 'rows' and process that, but that ofcourse removes the option to resolve the induced error.

The 'easiest' way is to split it into 1024 times 728 pixels and hook all of these up to the formula and connect each of them to their corresponding pixels, but that would take several months, so that's not an option.

Could you be more concrete in how you would do this? I tried something similar but thought, because of that error resolving, it would remove the option to work in this way, because it doesn't allow 'inter-row interaction'.

To give you some background:

I want to use a DMD to create a laser with a top-hat wavefront, meaning it's intensity distribution is equal everywhere on every point of the wavefront. For this we hook a CCD camera up to our computer which measures the intensity distribution. This image is then sent to our Labview program, which processes this image and turns it into a pattern for the DMD. The DMD or Digital Micromirror Device is a device that is made up of thousands of tiny mirrors, all of which can stand in either + 12 degrees or -12 degrees.

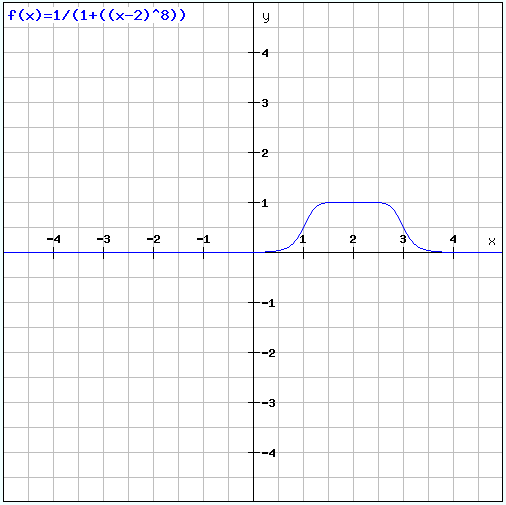

We look at the profile and compare that to a profile as simulted by a Super Lorentzian function

A Super Lorentzian function looks like this:

A / (B + ( (X-C)/X0) ^n)) + D

A/B is the top value

C is the horizontal transliteration

X0 is a value referring to the width of the function

n is a power

D is vertical transliteration

For even numbers of n the function produces a top hat function. In our case, we want to simulate an eigth order Super Lorentzian, so n=8

The image of the CCD Camera is a 12 bit image. Labview saves this as 16 bit, meaning it has 2^16 different grayscale values.

I don't have the CCD camera yet so for now I use the webcam of my laptop and turn that into a 16-bit image.

Here a some screenshots of my program so far:

And a SL function looks like this:

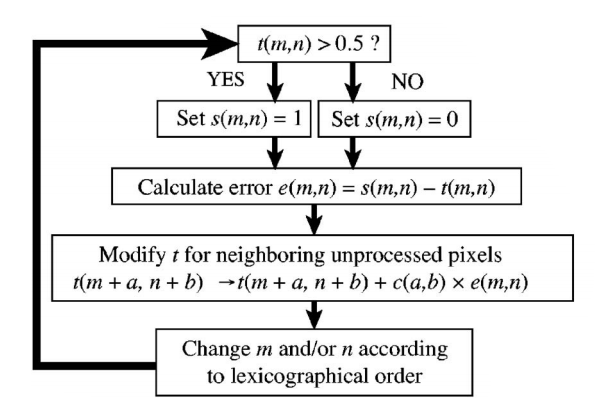

Our error inducement comes from an algorith developed by Dorrer and Zuegel, two german physicists.

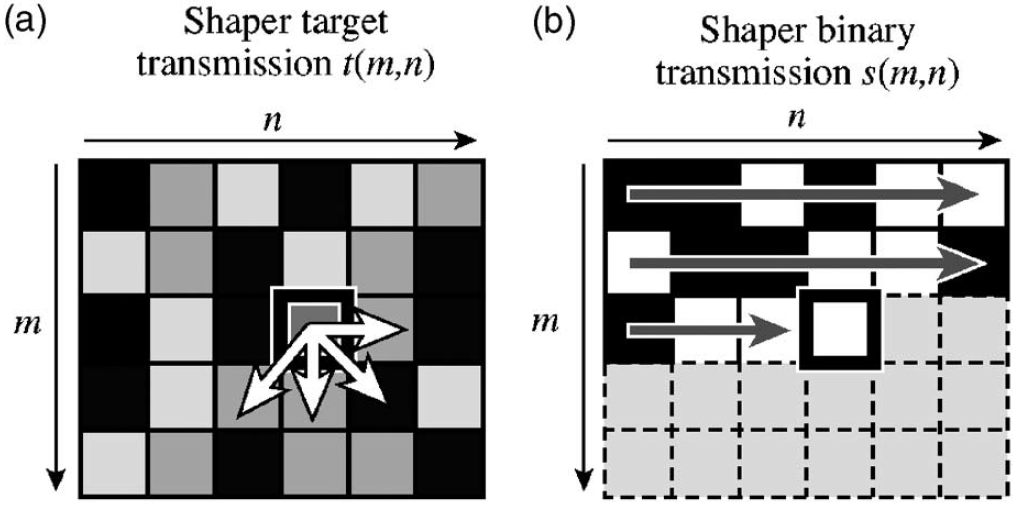

A screenshot of their paper concerning binary spatial light modulation:

But the main issue I am concerned with is thus the error inducing. Doesn't normal array processing remove the possibility to do so? And if not, how can I do it?

04-04-2014 04:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

When you talk about error inducing, are you talking about LabVIEW error? You'll need to decide how to handle those - either by ignoring them or stopping the execution.

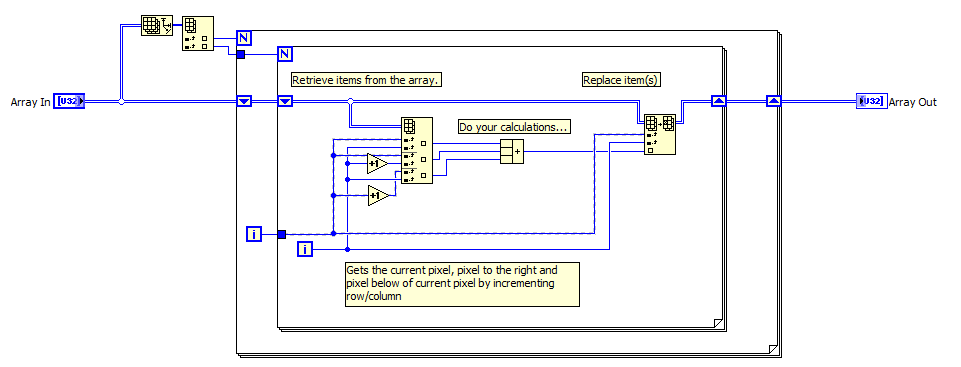

If you need to do further array manipulation, you can use functions in the Arrays palette like "array subset" and "replace array element" - this would allow you to pull out the surrounding elements from the array as well by incrementing/decrementing the row/column index. You'll of course need to check for edge conditions (when there is no surrounding pixel).

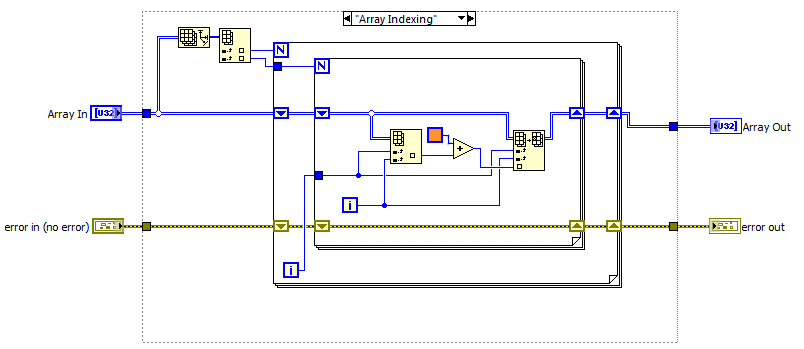

I've attached an example (in LV2012) that shows how you could wire the errors through the for loops and a couple of methods of manipulating items in an array. (For performance reasons, you could replace the index array / replace array subset with an in-place structure).

Screenshots from VI:

04-04-2014 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your quick reply. It is very helpful.

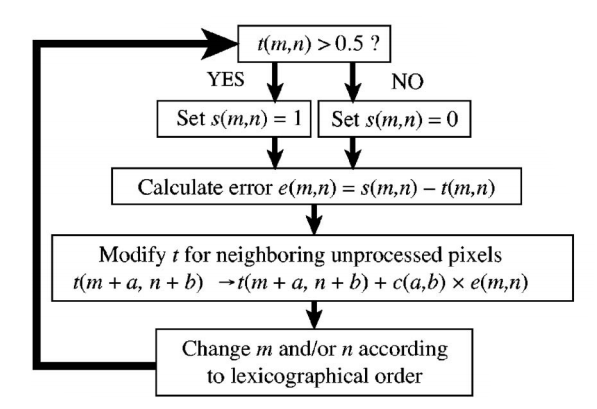

What I meant with error inducing is this:

We use a Digital Micromirror Device. That is a type of Spatial Light Modulator that has tiny mirrors, all of which can be either 'on' or 'off'. So it's a Binary spatial light modulator.

What we do is we calculate what value it is supposed to have and what value it currently it has.

In the picture, this is determined by the reflection of the pixel (or in reality, the mirror). This reflection function is known as t(m,n).

However, since the mirrors can be either on or off, we must make a decision based on this. Is the reflection more than 0.5? Than we turn it on. Is it equal or less? Than we turn it off.

This actual value that we give to the mirror (on or off, 1 or 0), is what we send to the DMD and is called s(m,n)

But you can probably see that this is not really the right way to go. We need (for an example) a reflection of 0.6 for a certain pixel, not 1.0. This means we are a bit off of the true value the mirror is supposed to have. That amount we are 'off' is an error. We must make up for this by considering the error of one pixel when we are calculating the next one.

This is done by adding to the normal algorithm for calculating the value the error of the previous pixel(s). The error, e(m,n), is calculated by the difference between the actual value s(m,n) and the value it is supposed to have, t(m,n).

This process is shown here:

The constant C is determines on which pixel relative to the original you are calculating the error for.

About Lexicographical processing

That is not that big of a deal so far, since lexicographical processing is, as you already said, the natural way of processing arrays if you put your loop inside a loop and turn on indexing.

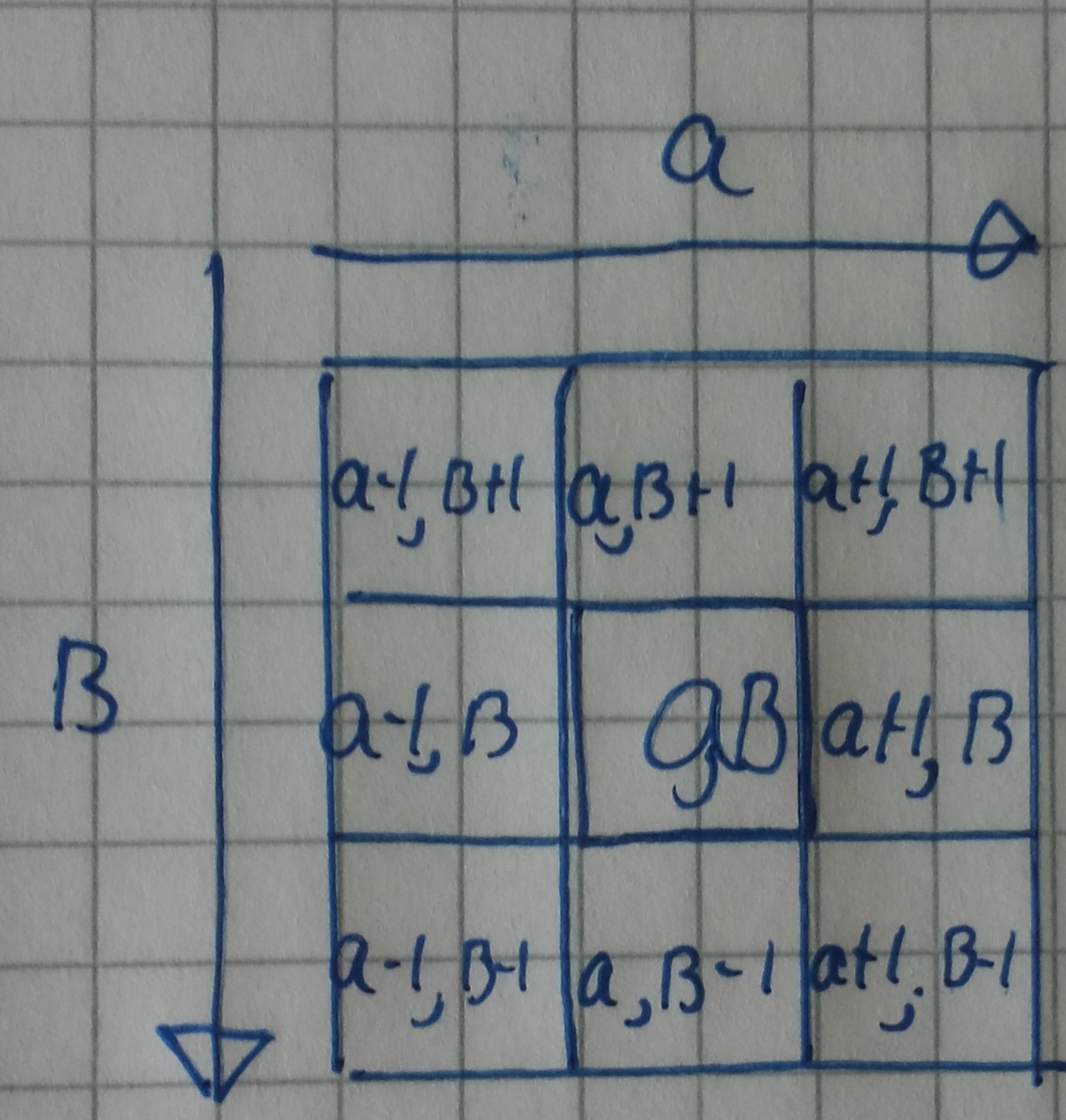

But as the algorithm shows, I need to be able to control pixels bordering on the original before actually processing them.

To show: normal processing does:

But the control I want is shown here:

I need to be able to control these neighbouring pixels too. To be more specific, I need to control a-1,b-1 & a,b-1 & a+1,b-1 and a+1, b.

This is also shown here, in this screenshot of a paper of Dorrer and Zuegel:

The arrows represent which pixels we want to use to solve the error that was induced by rounding to 0 or 1.

Doesn't this 'loop in a loop with indexing' remove the possibility to give the error function to these pixels? Because that information is then outside of the loop, not inside.

04-04-2014 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ok - this is quite difficult for me because I know nothing about what you're trying to do (in terms of your application), but I know I can help with the actual LabVIEW so to help me to help you, try to pinpoint exactly what it is you are struggling to do in LabVIEW.

From what I understand you want to iterate through all of the pixels, but to do calculations based on the pixels around the current pixel.

You can do that by indexing as many items as you want from out of the array and adjusting the row/column indexes accordingly. I've attached an updated VI that demonstrates this.

04-04-2014 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This does provide me with a lot of help thanks! I will try to work out my project a bit more in detail over the course of the weekend, so you will probably get another reply from me next monday 😉

04-07-2014 04:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I promised to give you an update after the weekend, but I haven't made any progress so far. But I will return with questions for you, so don't worry about that![]()

04-07-2014 08:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Okay, I tried a couple of things, all of which failed utterly.

There are two issues:

The distance calculating

error diffusion

The latter is the most difficult, so let's focus first on the former.

As shown in my beautiful drawings, I want to calculate the distance of the pixels, ofcourse in lexicographical order. Since you have shown me that the standard way for Labview to process arrays is lexicographically (and I must add I am very grateful for the insight you have given me) that is no longer an issue.

For the 'distance to center' calculation, I want to try the following:

Since I know it's going to calculate from left to right and starting in the top left corner and I know the resolution it will need, why not use that information to 'count down' from the resolution?

By that I mean the following:

I know the resolution 1024 x 768. That means the center is at 1024 / 2 =512. However, since it's an even number, there is no absolute center pixel. So the actual middle (or median) is at 512,5.

Same goes for 768: 768 / 2 = 384, median is at 384,5.

The center of the image is at 512,5 x 384,5. If you do not agree with calculation to find the middle of the image, then by all means please say so, for I myself cannot remove doubt from my mind even though I have the proof of it laid down before me in the form of a drawing.

But then the pixel at the top left corner is then at a distance of:

square root of (512,5² + 384,5²) = root of 410496.5 = 640.7000078. I then use that for my formula to calculate what value it should be (the superlorentzian formula). These coordinates will then also ofcourse work for the bottom right, bottem left and top right of the image, since these are at a distance that is the same size.

Then I perform the same calculation but then I take pixel next to it: it will be at a distance of which the horizontal and vertical components are also known.

The first pixel was at a distance of 512,5 horizontal and 384,5 vertical

The second pixel was at a distance of 511,5 and 384,5 vertical

The third was at a distance of 510,5 and 384,5 vertical

The fourth pixel is at a distance of 509,5 horizontal and 384,5 vertical

Etcetera

I am thus now 'counting down' from the known coordinates from the top-left all the way until -512,5 and 384,5 vertical.

When that point (the point -512,5 x 384,5) is reached I go down one part vertical. So I then use -512,5 horizontal and 383,5 vertical. That way I calculate down from 512,5 x 384,5 (top left corner) all the way until -512 horizontal and -384,5 vertical (bottom right corner).

This however is more of a 'brute force' method. Is this the most efficient way to do this? Or can Labview give me the values themselves without me having to presume their location (which is what I am basically doing right now).

04-07-2014 08:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey there! I made this small video in which I explain the entire thing about how how I want to calculate the 'length' of the vector. I add it to this message. I recorded it using my cellphone so sorry for the shakyness.

So instead of reading my previous lengthy reply, you can also watch the video.

My mobile phone however couldn't record the entire thing so it's cut off somewhere in the midst of my story. I will record and upload the next part in a moment.