- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Labview libtiff implementation

01-09-2015 03:08 AM - edited 01-09-2015 03:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Peter,

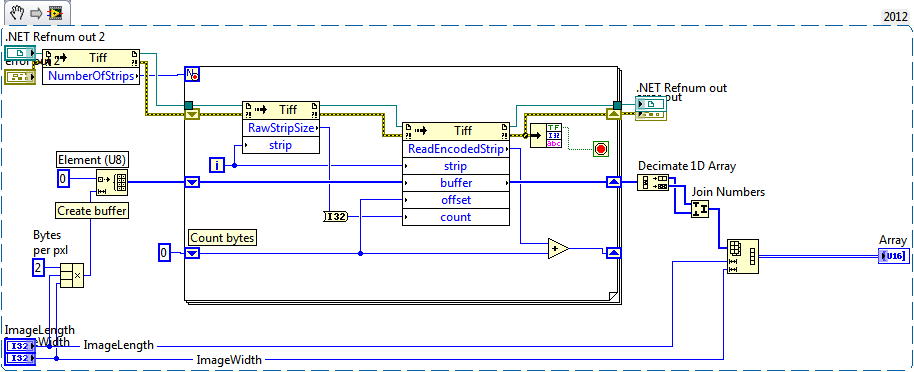

apparently one needs to access the value of the .NET refnum via a property node before transforming it into a variant. See attached snippet.

Best,

stran

PS: And you should close all refs. Apparently this is not always done automatically when working with external libraries.

01-09-2015 03:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your help, stran.

I am attaching the converted VI along with the original from the libtiff2 library. One problem I ran into is that the ReadEncodedStrip function only provides an U8 buffer, while the original in the libtiff2 library sems to adjust to the data type. I suspect that eiher there is another function for U16 and U32 values, or a function that allows setting the data type, but I was unable to find any. Any hints?

Thank you.

01-20-2015 04:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Peter,

it looks like it did not take care of that itself.

Here you go:

Best,

stran

01-20-2015 01:28 PM - edited 01-20-2015 01:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you so much, stran.

Since I have LabVIEW 2011, I cannot open it though. I recreated the code using your attached blockdiagram image. It works, but I noticed that it executes slow taking 54 seconds to load a 2560x2160 TIFF file.

I uploaded an example tiff file of the kind I am trying to open to https://www.dropbox.com/s/pjhts95npvpvnzf/New_Channel_0__B_003_r_0001_c_0002_t_0000_z_0004.tif?dl=0, since it is too large to send as an attachment.

Is there any more efficient way to load this? Thank you so much for your help.

Regards,

Peter

01-20-2015 01:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This won't solve the whole performance problem, but try "swap bytes" instead of decimating and joining. It's probably faster and more efficient.

01-20-2015 02:05 PM - edited 01-20-2015 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks, Nathand. Swapping bytes reduces execution time from 54 to 53s, and the image is corrupted (looking similar to a solarized image). The bottleneck is the ReadEncodedStrip node, which requires 18ms to execute for each of the 2560 strips. Decimating and joining the array adds 253ms to the total execution time, so is negligible in comparison.

(The 32-bit libtiff version takes 119ms to load the same image.)

Best,

Peter

01-20-2015 03:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@pbuerki wrote:

Thanks, Nathand. Swapping bytes reduces execution time from 54 to 53s, and the image is corrupted (looking similar to a solarized image). The

Oops! Sorry, yes. That was sloppy of me, didn't look carefully enough at what's happening. You would need to Type Cast the array of U8 to an array of U16, then swap bytes... but at that point, it would be better to convert the array of U8 to a string (this is essentially free, shouldn't require reallocating memory), then unflatten from string into an array of U16 with the endianness set properly. Not sure if that would be any faster, but it's easy to try.

I'm curious if a pure LabVIEW solution like the one I posted earlier is any faster, but I don't have time to investigate right now; maybe this evening. I don't know how efficient LabVIEW is at handing large data structures back and forth to .NET code.

01-20-2015 04:36 PM - edited 01-20-2015 04:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello again, Nathand.

I tried flattening the U8 array to string and unflatten to U16 array, but this produced:

Error 116 occurred at Unflatten From String in Read Tiff File (Bitmiracle libtiff.net) Fixed.viPossible reason(s):

LabVIEW: Unflatten or byte stream read operation failed due to corrupt, unexpected, or truncated data.

Typecasting the U8 array to string and unflattening to U16 array produced the same result. As I said, the conversion is currently not the bottleneck.

Sorry I was unable to open your earlier code since I need it in LV2011. When I looked at your block diagram picture I decided to continued pursuing the .Net solution first. But now, if you send me the code in LV2011, I am ready to give it a try and convert it.

Thanks,

Peter

01-20-2015 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@pbuerki wrote:

Sorry I was unable to open your earlier code since I need it in LV2011. When I looked at your block diagram picture I decided to continued pursuing the .Net solution first. But now, if you send me the code in LV2011, I am ready to give it a try and convert it.

I'll try to do that later this evening; I don't have access to LabVIEW 2013 to do the conversion right now but I will later.

pbuerki wrote:

I tried flattening the U8 array to string and unflatten to U16 array, but this produced:

Error 116 occurred at Unflatten From String in Read Tiff File (Bitmiracle libtiff.net) Fixed.viPossible reason(s):

LabVIEW: Unflatten or byte stream read operation failed due to corrupt, unexpected, or truncated data.

Typecasting the U8 array to string and unflattening to U16 array produced the same result. As I said, the conversion is currently not the bottleneck.

Did you set the Unflatten input for "data contains array or string size?" to False? If not, you'd almost definitely get that error. Otherwise I'd have to see what you're doing to understand the problem, but it sounds like that's not worth pursuing right now.

01-20-2015 05:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yep. The "data contains array or string size?" input was still TRUE. The error went away, but the image still looks corrupted. I will wait until you find time to convert your VI. Thank you so much.

Peter