- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Labview 2021 'Replace Array' Data Issues

Solved!09-24-2021 12:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Good afternoon!

I'm part of a group that's currently working on pump-probe spectroscopy, and for that end we're using LabVIEW to collect data from a Lock-in Amplifier, which essentially gives us a 1D Array of Double values, which we collect as the 'y' values.

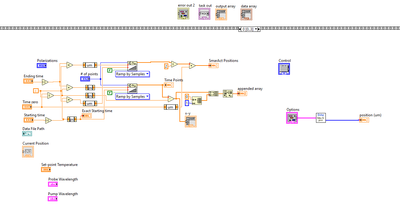

Our issue currently is that these values should be connected with time positions; we're using another piece of equipment, a Smaract stage, that moves in increments of microns, which we have converted to picoseconds to get the time. We take 130 data points for this general lab, with the collected data averaged within that loop 384 times, to go over our entire spectral range. Our problem is that the Intensity Graph, labeled 'Intensity Graph 2', is displaying our values incorrectly. I'm not sure exactly how, but my assumption is that the indexing only allows the values to be displayed through half of the data, and it seems that it is assuming our 'x' data is the time domain we want to get in terms of picoseconds. I'll attach images below from the block diagram, to help visualize.

Essentially, we want our 'x' values on the Intensity graph to consist of the 'Time Points' double data, while we want our 'y' values to be the values obtained from the DAQmx acquisition loop, averaged over a set of 384 and then displayed there, while the 'z' values in the amplitude should be the variation of that y-data. Is there something wrong with our indexing? Or are we putting data in the incorrect places? For reference, we obtain and collect our Time Points in Case 0 of our structure, as shown below:

Sorry if this message is too vague, I will try to be as specific as possible but I am not intimately familiar with the graphical capabilities or indexing rules of LabVIEW. Any help is greatly appreciated!

Solved! Go to Solution.

09-24-2021 12:34 PM - edited 09-24-2021 12:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You seem to have a more generic problem with array operations, so unless this is something that worked fine in the past, but now fails in 2021, you should leave out the version information in the subject, else people think you discovered a bug introduces with LabVIEW 2021.

We cannot debug pictures, so attaching a simplified VI containing some simulated data would bring us to a solution much faster. Tell us what result you expect.

(What we can tell from the pictures is that your code is quite poor. Stacked sequences, disconnected controls an an abundance of local variables are all strong indicators of fragile code. Do you really need EXT for parts of the data?)

09-24-2021 12:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As I mentioned, I'm not as experienced in LabVIEW, I have focused on workable code rather than a holistically foolproof approach. That is true that the problem is likely not version specific, apologies on that end. I'm unsure what specifically is needed for simulated data, but I am attaching the VI along with our most recent set of half-complete data, hopefully that should allow for viewing of the program.

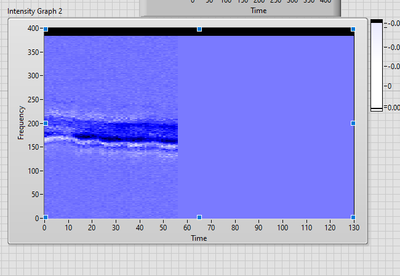

For our results, we currently view a graph of the form below:

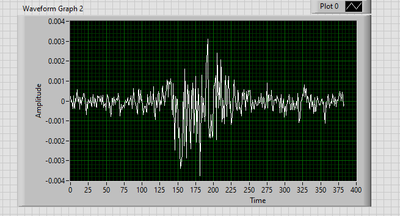

This has an x-axis of the data points specifically, while the y-axis refers to the number of times we run through the averaged y-data. We'd like to have an intensity graph where the x-axis reflects the time points we are shifting to (in order of -1 ps to 3 ps over 130 steps) while the y-axis bars will display the averaged y-data in a form akin to the graph below, with the trend then continuing for each subsequent time position we shift to.

I am unsure what you mean by 'ext for parts of the data', if that is just general externally saved data or some specific structural component.

09-24-2021 01:11 PM - edited 09-24-2021 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi wesdeeg,

@wesdeeg wrote:

I am unsure what you mean by 'ext for parts of the data', if that is just general externally saved data or some specific structural component.

"EXT" is the abbreviation for a datatype: "extended floating point". There are other datatypes like DBL, SGL, I32, …

Example:

You use a "Starting time" control set to EXT datatype. Then you apply a "ps" unit to this data and display it in a DBL indicator "Exact starting time": why do you think the DBL value is more exact than the EXT value before?

Did you notice the coercion dots? (Do you know what they indicate?)

Did you notice that many/most functions in LabVIEW don't support EXT inputs, like the Ramp function in the same image?

You are using units quite a lot: while this might be handy it often gives other problems and is not supported by LabVIEW very well. Usually you will get the recommendation not to use those units…

My best example (for a long time) was this: calculating the square of "1m" still gives you "1m" instead of "1m²". (This was sadly true for a decade or so, LabVIEW learned to calculate this correctly only in the latest versions…)

09-24-2021 01:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ah, that does make sense. I assumed the coercion dots were for abbreviating data, though I didn't know the cause if that is the case. Would you recommend then that I try to bind all data to specific non-floating datatypes?

You are correct that we use a lot of units, we want to prepare our measurements for the values we intend to measure, so it is a little frustrating. Is it fine then to do the proper mathematical conversion and manually assign the units, or is there another method if accounting for this?

09-24-2021 03:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

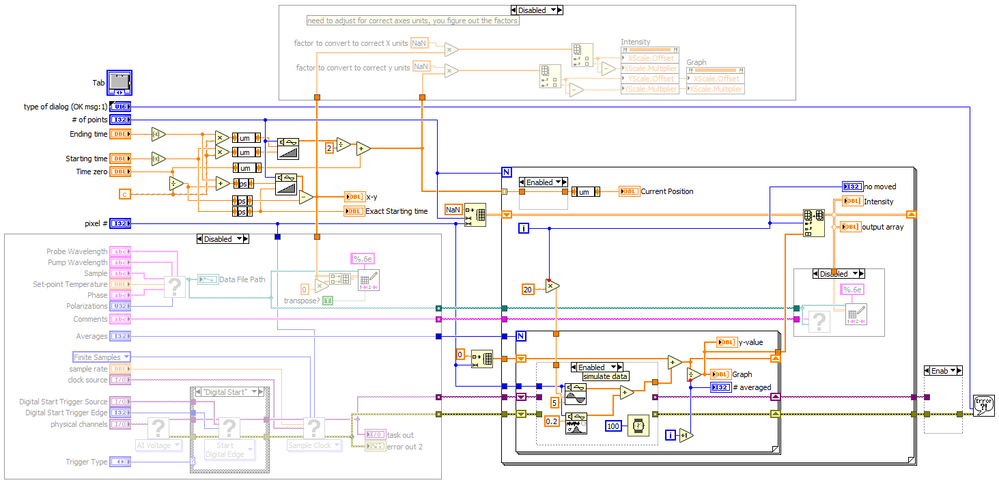

An intensity graph is a 2D array with of course integer indices in both dimensions. Both axes are equally spaced and the translations to real units is set by x0 and dx of the axes that you can calculate based on your linear x and y ramps.

Currently you are replacing each row twice with different data, which makes of course no sense at all.

09-24-2021 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So what do you suggest is the fix? I assume you are referring to the Replace Array sub.vi that formulates the data for the intensity graph, do you believe this will be fixed through adjusted one index to head into columns?

As a note, I am aware that the indices are not entirely proper, I have been given the program to work on. One of the indices, as its data has 384 entry points as opposed to 130, I assume needs to be looped aside from the major for loop around the lab creating code, which I plan to try and fix in the future.

09-24-2021 03:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's a very quick attempt at some code cleanup. I don't have any of your hardware or subVIs, so I simulate data instead. See if it can give you some ideas. It is a "literal" cleanup with some glaring redundancies corrected. I am sure there are many places to improve up. Notice the difference in diagram size!

(NOTE: No sequences, not local variables, and no hidden controls/indicators to hold data.)

(And yes, get rid of these units and do explicit conversions. For example x0,dx don't understand units. Not shown here, but look at the disable structure on top)

09-24-2021 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Alright, I'll go over this all and see how effective it is, thank you for the prompt response!

09-25-2021 10:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

On a side note, the presence of EXT datatypes and UNITS in your code was most likely cause by the decision by NI to make most of Math&Scientific Constants (e.g. "speed of light") have units and be EXT by default. Does not really gain anything, for example the speed of light is exactly defined as having 9 integer digits (no fractional part) and would have fit equally well into DBL, SGL, or even I32. 😄

I probably would have preferred DBL. Users that think they really need EXT can always change the representation. (internally, the values need to utilize the full precision of each datatype of course, but be placed as DBL by default).

(Side note of the side note: We had a similar issue with "machine epsilon" and that got fixed in LabVIEW 2010 already)