- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LabVIEW Memory is full error using To .NET Object.vi

Solved!06-26-2017 02:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We are using the Microsoft.Office.Interop.Excel(15.0.0.0) .NET Constructor to create *.xlsx reports in production. We use LabVIEW to reduce the data. Normally *.csv files created in production contain only 96,320 or so cells. However, we need to make longer test runs for QA/QC.

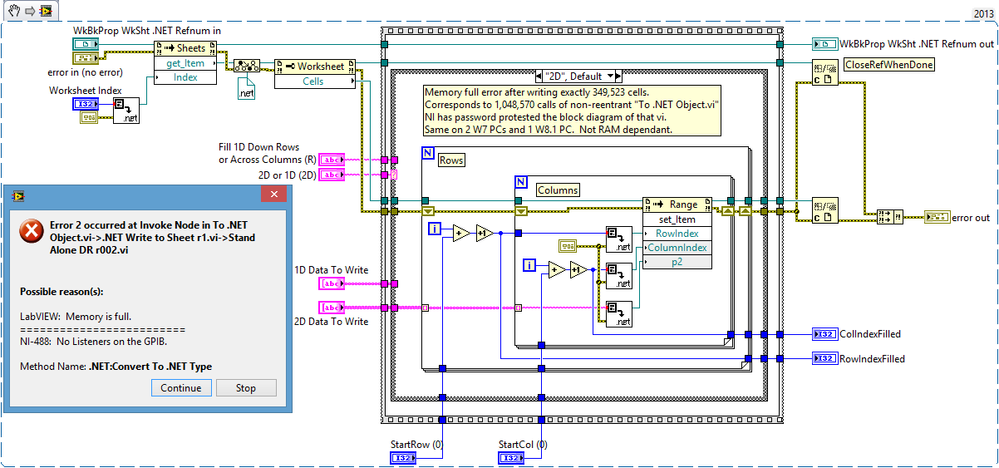

The problem is: After exactly 1,048,570 calls of the non-reentrant 'To .NET Object.vi' we encounter the memory full error in vi snippet below.

The questions are:

- Does NI limit the number of calls to this vi? It has a password protected block diagram.

- Does anyone out there know another way to do this? Other than the NI Report Generation package please...

Solved! Go to Solution.

06-26-2017 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Excel15 has a limit of 1,048,576 rows, so I'd guess that is the cause of the error. You'll have to break up the data into multiple files, if Excel is required.

06-26-2017 07:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your response Chris. The *.scv data file is 19.9 MB. Rows = 32,705 and Columns = 80. Cells to write = R * C = 2,616,400. That is 7,849,200 + 2 previous calls to the non-reentrant 'To .NET Object.vi'. Need 1 call each for row number, column number and the data to write. Since we can manually copy and paste the *.csv data into an Excel data reduction spreadsheet template (*.xltx) and the data array is small by modern Excel standards I do not think we have a row or column limitation with Excel.

The objective is to do everything with LabVIEW at the push of button. That is why NI customers purchase their product. Valued customers do not expect any aspects of the product they purchased to be intentionally limited in some way.

In this case it seems NI has limited the number of calls to that vi by making it non-reentrant. Thus the memory LabVIEW can use fills up. Presently using LabVIEW 2013, 32 bit, but will try the 64 bit version next but I not sure if that will help.

Since data coercion impacts memory utilization I tried a 'to variant function' in front of each 'To .NET Object.vi' call. That got rid of the 'coercion dots'. This effort produced the exact same result stopping at the exact same point.

I need to know directly from NI engineers if I am wasting my time trying to use a product they have limited. Good for LabVIEW Report Generation Toolkit sales though. Perhaps I will try a trial license (if available) of this add-on toolkit. If it works for 2,616,400 cells then we may understand more about what NI did with 'To .NET Object.vi'.

Warm Regards,

Lloyd

06-26-2017 09:31 PM - edited 06-26-2017 09:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm not sure I really want to weigh on this. But I assume you have at least attempted to use the profiler to see examine the memory consumption.

I changed my mind. Best of luck.

06-26-2017

11:24 PM

- last edited on

09-12-2025

03:10 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

With such a huge amount of data I really think Excel files are not good candidates! Why make your life harder? If you need to generate reports with large amount of data, there are much better choices. One example I most often use, TDMS files. Store your data properly in channels under the different groups. You can structure data saving in a very nice way. Plus, you can set properties to the TDMS file, like storing operator names, product specific info regarding to that particular test, etc...You could even generate a few pages text report, and the TDMS file would hold the large data part.

Additional benefit with TMDS files: you can create custom LabVIEW code to do very fast access to your data! If you do not want to write your own data access tools for your TDMS "database", you can consider using the software tool from NI, called NI DIAdem: https://www.ni.com/en/shop/data-acquisition-and-control/application-software-for-data-acquisition-an...

Edit: also see this page: https://www.ni.com/en/shop/labview/data-storage-reporting-labview.html

Edit2: another thing is to consider, to use a real database alongside with LabVIEW, but i am not familiar in this topic...

06-27-2017 02:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

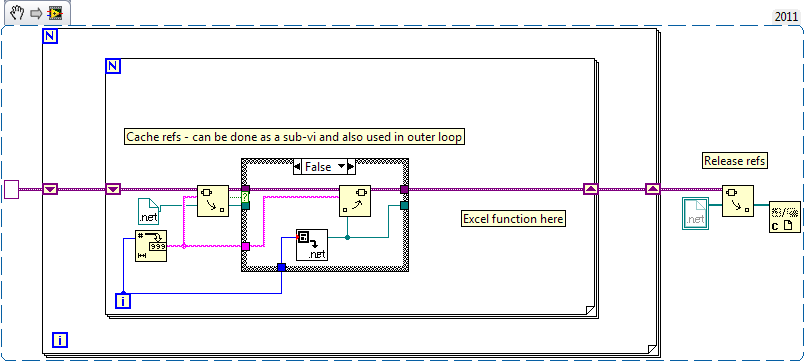

I've noticed something similar before, it seems there's a limit to the number of .net objects that can be in memory. What you'll need to do is Close the ref after using it and/or cache it so you don't create a new one every time.

It seems to be a windows/.net problem.

/Y

06-27-2017 05:30 AM - edited 06-27-2017 05:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

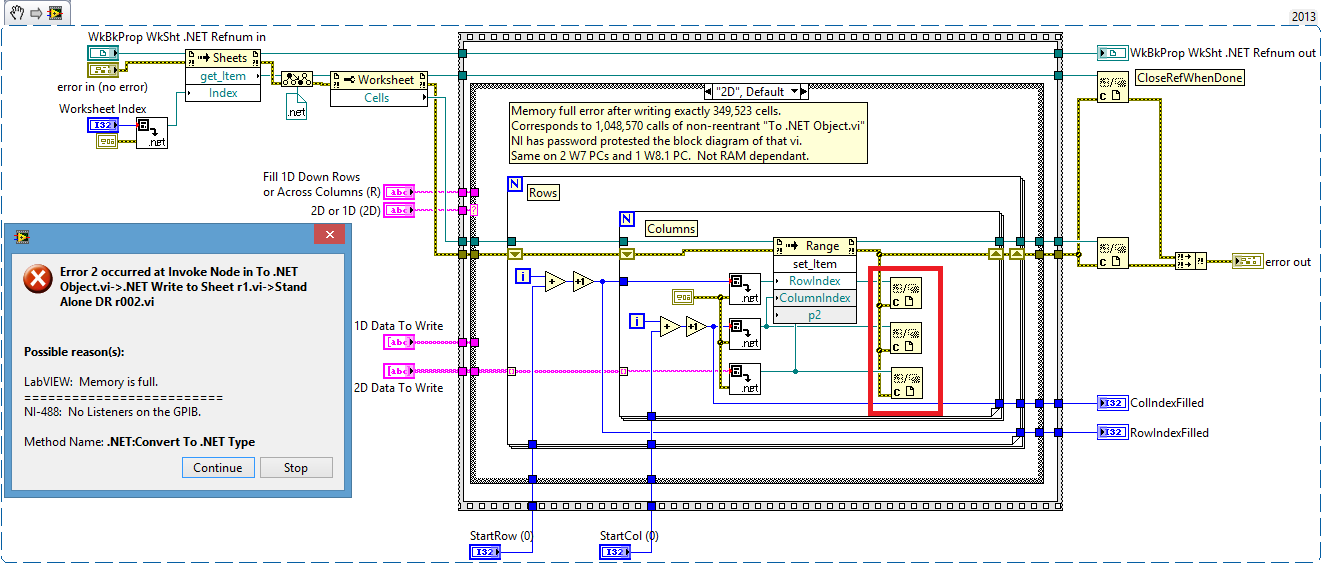

Actually it's most likely a LabVIEW problem, though can be easily worked around. The greenish wires are all LabVIEW refnums. LabVIEW only can create 2^20 refnums of a type (All .Net refnums are one type, file refnums another, etc.). This means after creating 1'048'576 open refnums of any particular type LabVIEW has an out of refnum error, which because there is no specific error code for this, is reported as an out of memory error.

The solution is to close each refnum after it is not used anymore, which will return that refnum to the resource manager for reuse.

There would be likely some serious performance optimization possibilities, by creating the according refnums only once outside of the loops, casting them to the specific .Net object type (Numeric/String) and then inside the loop assign the actual value through a property or method access node and pass it like that to the set_item method, and of course closing the refnum after the loop.

06-27-2017 06:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Some caching should be nice, as mentioned.

06-27-2017 06:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

While this caching would help some for the column index it has its own overhead of having to convert the index to a string to then do a variant attribute lookup, so I would guess this is not really that beneficial.

And as extra note there is another To .Net Object refnum in the beginning of the function that needs to be closed too!

06-27-2017 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

While this caching would help some for the column index it has its own overhead of having to convert the index to a string to then do a variant attribute lookup, so I would guess this is not really that beneficial.

And as extra note there is another To .Net Object refnum in the beginning of the function that needs to be closed too!

I actually recreated the 'bug' earlier today by simply having a loop creating .net objects of the loop-counter (i), it only created some 1-200 objects/second, so the overhead of a variant lookup is negligable.

/Y