- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Is there a file size limit when using Read From Spreadsheet File?

Solved!02-18-2014 01:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm trying to read in a large file, about 52mb, 525600 lines with 27 fileds in each line using "Read From SpreadsheetFile.vi". I then search the 2D array for -999, which represents bad/no data in that field and total the number of fields with -999 in it. This all works on 3 months worth of data. The program is giving me an out of memory error and stopping in the case where the read takes place on the large one year file. So my question is does the Read from Spreadsheet file have size limitations? Is there a better way to do this? Thanks in advance for the help.

ssmith

Solved! Go to Solution.

02-18-2014 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

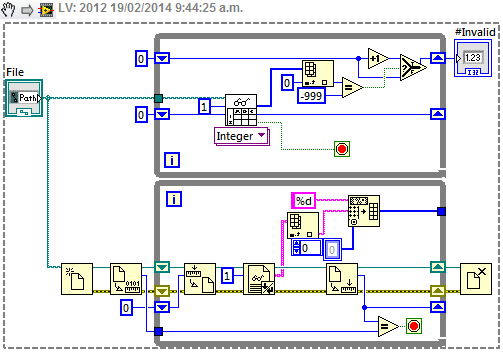

You could read one line at a time, such as in the example here. For large files, you should only open the file once and then extract each line as shown in the lower part of the diagram, without creating a 2D array.

02-18-2014 03:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ssmith490D wrote:

I'm trying to read in a large file, about 52mb, 525600 lines with 27 fileds in each line using "Read From SpreadsheetFile.vi". I then search the 2D array for -999, which represents bad/no data in that field and total the number of fields with -999 in it. This all works on 3 months worth of data. The program is giving me an out of memory error and stopping in the case where the read takes place on the large one year file. So my question is does the Read from Spreadsheet file have size limitations? Is there a better way to do this? Thanks in advance for the help.

ssmith

You sure it's 52 MB? I was building a file with similar dimensions (all cells filled with a 14-place number) and I get over 500 MB. This might be too much for your computer to swallow whole without choking unless you've got a lot of RAM (> 4GB). Even then I guess you'd have to run it in 64-bit mode, which may be a bugger.

Cameron

The optimist believes we are in the best of all possible worlds - the pessimist fears this is true.

Profanity is the one language all programmers know best.

An expert is someone who has made all the possible mistakes.

To learn something about LabVIEW at no extra cost, work the online LabVIEW tutorial(s):

LabVIEW Unit 1 - Getting Started</ a>

Learn to Use LabVIEW with MyDAQ</ a>

02-18-2014 04:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Camerond--

Thanks for the help. I recreated the VI you posted and unfortunately it doesnt work. It looks like the index array vi is only looking at the zero index and not the other 26 fields in each row. This would probably require a FOR LOOP to go through each field index. This is what I have and it works on a smaller version of the file. For some reason LV is using up bucket loads of memory to run this smaller file and crashes running the 60MB file. I've attached one of my vi's to solve this problem and the smaller data set. You'll see in the beginning that I trim some columns and rows to leave just the data I want to scan. Thanks again for the help.

I just tried send 3 months worth of one minute data and it failed. So here is a really small version, 20 minutes worth of data. Does anyone see anything that would cause memory usage issues here?

ssmith

02-18-2014 04:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry Greg, gave credit to the wrong guy on posted vi. Thanks for the help.

ssmith

02-18-2014 05:05 PM - edited 02-18-2014 05:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, maybe you should autoindex over the 2D array of strings and only convert a row (or column) with each iteration, limiting the need to allocate a huge 2D array that needs to be contiguous in memory. (code not shown)

Here's a loop-free version. Of course you could use similar code one row at a time, autoindexing on the 2D array of strings...

No need for OGTK tools (that do much more than really needed in this case)

02-18-2014 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would probably operate on one raw string row at a time using a FOR loop and convert the times to a single timestamp, which will give a 1D array of timestamps at the output tunnel. Then simply take the array subset of data for each row and count the equals as shown earlier.

02-18-2014 07:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Read from Spreadsheet File.vi is not very memory efficient with large files. It reads the entire file as a string and then converts it to a 2D array of DBL. The numeric array occupies 525600*27*8 = 113 MB. The string is of the same order of magnitude, with the exact size depending on the format and how many digits are stored for each value. All the elements of an array must occupy contiguous memory. All the characters of a string must occupy contiguous memory. So if the memory allocated to LV has become fragmented by other operations before trying to read a file of that size, there is a significant probability that a memory error will occur.

Reading in segments as others have suggested is the most reliable way to read large files.

Lynn

02-18-2014 08:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Altenbach--

I've incorporated you segment of code in to my vi which I have attached. So can you help me understand what exactly is happening after the Transpose Array vi up to the Add Array Elements. I have an idea but I dont see how the = vi is converting or creating an array of 0,1 based on the comparison? It works fine on the smaller files but still crashes on the large file. When I open LV and the vi it is using 48mb of memory. 3 months of data is 15 Mb in size. When I run this vi on the 3 month file the LV memory usage goes up to 446.8mb and does not come down after the vi stops. If I try running it on the 60mb file the memory usage goes from 48mb to over 1.2GB and I run out of memory and it fails. This I dont understand at all. Anyone have any ideas???

ssmith

02-18-2014 11:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OK, so could you clarify what you actually need in the end? If you just need the two counts, I would not even do the scanning into a numeric. looking for a string "-999" in the string array is probably sufficient. Do you need all the headers? Do you need the rest, i.e. the (valid) data?