- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to set High priority execution on part of block diagram

02-05-2023 12:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have a VI that has two main "recording" loops. One read FPGA data and other reads USB serial device data.

Since VI also contains other parts of the code (updating GUI, event handler etc) there are sometimes strange behaviours (delays) in "USB recording loop". My quiestion is if there is any way to tell LV that it must execute USB reading loop in "High priority" maner so that it can't be interrupted and it must execute in a more stable way?

In other languages like c, c++, delphi there is a "Thred" you can define and open to run on a specific processor with "High" priority parameter.

02-05-2023 12:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@AndrazS wrote:

I have a VI that has two main "recording" loops. One read FPGA data and other reads USB serial device data.

Since VI also contains other parts of the code (updating GUI, event handler etc) there are sometimes strange behaviours (delays) in "USB recording loop". My quiestion is if there is any way to tell LV that it must execute USB reading loop in "High priority" maner so that it can't be interrupted and it must execute in a more stable way?

In other languages like c, c++, delphi there is a "Thred" you can define and open to run on a specific processor with "High" priority parameter.

Manually assigning thread priorities is almost never the answer in LabVIEW. More often it is the coding that is the issue. Are you trying to do too much in the USB loop? Could you upload the VI?

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

02-05-2023 07:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Don't put any GUI objects in the high priority loop.

Certified LabVIEW Architect, Certified Professional Instructor

ALE Consultants

Introduction to LabVIEW FPGA for RF, Radar, and Electronic Warfare Applications

02-05-2023 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Priorities are set on VIs, not "parts of VIs". So make your USB Loop a VI (well, a "sub-VI"). Then you can assign it a high priority. There are other ways you can optimize the code that we might be able to suggest if we could see the code (and I mean code, not "pictures of code", which don't allow us to see how you've set up the VIs). Note that if your USB Loop, itself, calls other VIs, we'd need to see those, as well. Best is to compress the Project Folder to a Zipped file and attach the entire Project.

Bob Schor

02-05-2023 10:18 AM - edited 02-05-2023 10:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I agree with others that trying to mess with priorities will typically make things worse. The compiler knows what to do better. A properly architected program should not need it.

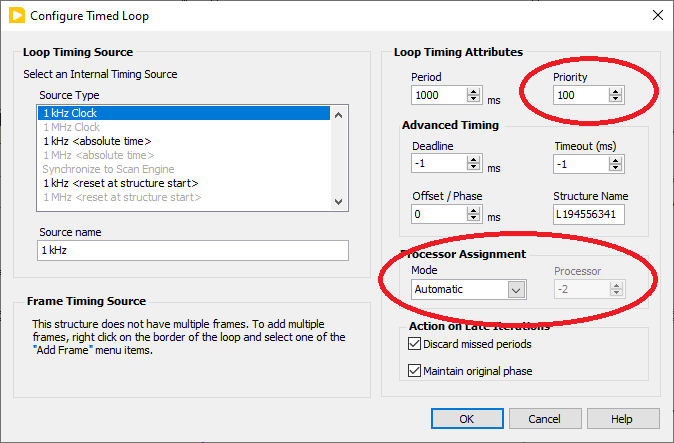

Still, the timed loops do have a priority settings, so that would be one way to get lost in the swamp. 😄 I recommend to look elsewhere.

Don't tax the UI thread (e.g. trying to graph millions of points in a few thousand vertical pixels, overuse of property nodes, etc.) and memory manager (don't constantly grow and shrink arrays or make data copies). Run your USB code in an independent loop and push the data into a queue to be processed elsewhere.

If you show us your code, we can probably tell of there are any poorly implemented sections.

Do you even know what causes the USB delays? That might have causes different from what you think. 😄

02-05-2023 12:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I always get wary when someone asks a very specific question about something obscure like this because it always means they are focusing too much on the "how" and not enough on the "why".

Like "How do I flush the UART buffer" instead of "Why am I getting extra characters?"

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

02-05-2023 02:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If the loops are in fact all in one VI that's likely leading to some of the issues. It's really tempting to do UI stuff in acquisition and processing loops. UI should only be getting updated in UI specific code and messaging/some other mechanism should be used to get data in/out of other code that needs to be displayed.

So without knowing op's code structure and real issues and if they're even using VI Server too much I'll soapbox some stuff:

DO NOT USE VI SERVER CALLS INSIDE OF ACQUISITION AND PROCESSING LOOPS. It can be tempting to pass around references to front panel elements and constantly tweak properties and update values with them. Just. Don't. Even in a small program: Don't. It's a bad habit to start and unless practice is done where a design is easier to do then it won't be done on larger programs where the slowness of operating on UI elements by reference will have a real impact. VI Server is many orders of magnitude slower than terminals and local variables and that's in an ideal situation where VI Server isn't being accessed in several places simultaneously. VI Server access times get worse linearly based on the number of accesses being attempted in parallel (due to properly handling priorities of calling VIs) and can quickly go from "it works in the timing I need" to "holy **bleep** all my loops all got slow all at once" once a delay threshold is passed and it can be difficult to find out which loop is actually the cause. LabVIEW is multi-threaded without having to try and with today's multi-core processors there's oodles of CPU time to go around. However there can be many tripping points: VI Server, Functional Globals, global variables with multiple writers, etc. that will all cause calling code to execute as if the loops are all on a single threaded program because they're all waiting on access to a shared resource and so they can't really run in parallel. THAT's why it's important to modularize, encapsulate, and ensure what I'll call "clean" mechanisms for getting data in and out of modules so that it's much more difficult to accidentally share a resource between them.

Frameworks like DQMH and Actor Framework exist specifically to make it easy to enforce this design constraint, whether you like their techniques or not. People spent the time implementing those because it's very good practice and even if you don't use them (I don't) you should program with similar goals in mind. Having a single VI with loops for acquisition, processing, logging, and UI all on the block diagram is a BadIdea™ and while it may seem at first that more code and more communication mechanisms would make the problem worse, what often is the real problem is misunderstandings around mechanisms that seem easy to use (VI Server references, FGs, etc.). Loops for different "tasks" should definitely be on different block diagrams and avoid the temptation to pass UI references into subVIs except if it's specifically UI related code. e.g. Logging is not UI even if you want to display timing and other statistics to a UI. Logging doesn't do UI stuff but it does have measurable statistics that can be made available to other code for display.

Once you get cleaner interfaces (mechanisms for getting data in/out) to modules of code it becomes much easier to determine where issues are and what's really causing any timing issues. If there are still performance issues once modularized (even if done poorly) it can be much easier to identify and fix issues since it'll be less likely to influence other code that's running for other tasks.

There is nearly zero reason a program doing some FPGA acquisition and USB comms should need to worry about priorities unless things are extensively handled by polling. FPGA has mechanisms like DMA FIFOs and interrupts to let that part of the host side code "sleep" until action is needed. (Unless there's gigabytes of data being thrown around, those RF folk get a bit rowdy with their data slinging) USB might be trickier depending on the communication protocol and timing requirements but USB should be slow in comparison to how fast data can be thrown around with FPGA.

02-06-2023 11:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

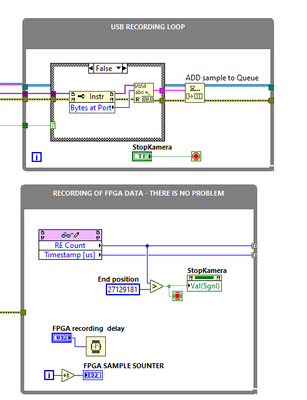

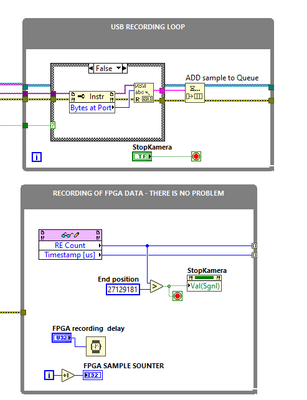

Thanks for answer, this is a picture of two loops. Upper loop is USB recording loop and it just does not run smoothly since there are also other parts of the program that interrupt performance. The USB recording loop should run on separate processor...

02-06-2023 11:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the idea on a timmed loop, I will try it (maybe 😉 ). As I posted in the image, you can se that USB recording loop is asking for device how many bytes there is at port, then, it reads this much bytes and put it in a queue to be processed elsewhere as you suggested.

USB device throws data that consist of timestamp + data + counter. The counter of samples should increment monotonously : 1,2,3,4.... but there is no the case! Sometimes it happens that some of the samples are lost, so the counter goes 1,2,3,8,9,10... There is no such problems in other SW that we use and the implementation is similar : Asking for number of bytes at port, then read them..

02-06-2023 11:58 PM - edited 02-07-2023 11:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are you aware that a value(sgnl) fires an event even if the value did not change? Your lower loop hammers the event structure (... and we have no idea where that even is!).

(If you don't have an event structure, the use of a signaling property is completely pointless. Even a plain value property is expensive. Use a local variable instead)

What determines the boolean going to the case structure? Are you sure you need "bytes at port"?

We really need to see a bit more of the code, architecture and what runs where?