- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to make labview program run faster?More CPU to program?

Solved!12-23-2019 07:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi guys,

This is a concept discussion.

We know that nowadays PC CPU has more power,higher frequency,multi cores...and Labview programs running on industry environment mostly,and the typical application is ACQUISITON->PROCESSING->DISPLAY of a loop.

I find that CPU usage is only 20-30% while a loop is running .This is no problem normal.but I think just personally, optimize the loop time by dedicate CPU more to the program,like 80~90% ,even 100% to achieve a fastest loop.

Is it possible?or Windows will block the thing to happen?How to make PC more focusing on the labview program?

Appreciate your idea,tks.

Solved! Go to Solution.

12-23-2019 08:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are, I believe, Asking the Wrong Question, or Worrying about the Wrong Thing. A well-designed LabVIEW routine "doing what LabVIEW is designed to do", i.e. server as a Laboratory Virtual Instrument Engineering Workbench, and handle such Engineering Tasks as acquiring "real-world" data from multiple sensors, analyzing it, and using it to make decisions, control other processes, and save data for later analyses, is, in most cases, running at exactly the right speed, and using exactly the right amount of Computer Resources.

Consider, for example, acquiring data and using those data to control a process. If the time-scale of the data and the process under control is on the order of milliseconds to seconds, it makes no sense to run the CPU at 100% utilization, collecting data at a mHz rate, "just because the CPU can handle it". What makes more sense is to design the routine so that it is easy to create, easy to understand, easy to debug, easy to modify and maintain, and easy to document and "hand off" to another person. LabVIEW (and a good LabVIEW developer) can do that.

Bob Schor

12-24-2019 04:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Aside from what Bob already mentioned and with which I wholeheartedly agree, there is one other gotcha that you may have. The reason that you see "only" 25 % CPU usage might be because you have a dual core CPU with hyperthreading (and a badly behaving LabVIEW loop spinning at maximum speed doing usually nothing).

A LabVIEW loop without any asynchonrous nodes in it (Wait, Asynchronous IO, etc) will execute at the maximum speed that a single thread can provide. Genearlly you can't split a loop up to distribute it over multiple threads or cores, although if the loop only contains a certain type of functions including mathematical functions, you can select to tell LabVIEW to parallelize it over multiple threads/cores.

For short running loops that is acceptable and sometimes desirable but you NEVER/EVER want a LabVIEW loop that runs for the lifetime of your application, run at this maximum speed. All it will do 99% of the time is heating up the CPU by doing very very fast nothing.

12-24-2019

06:48 AM

- last edited on

05-12-2025

03:19 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@avater wrote:

I find that CPU usage is only 20-30% while a loop is running .This is no problem normal.but I think just personally, optimize the loop time by dedicate CPU more to the program,like 80~90% ,even 100% to achieve a fastest loop.

If you are using 20-30% of your CPU, trying to single thread your code will not help it run any faster. Often, I see it slow down when trying to do that. Most commonly, I see speed ups by doing what LabVIEW is really good at: parallelization. The most common way I see this improve performance is by moving your File IO (ie logging) into a loop all of its own, leaving all of your other threads to run as fast as they need/can (Producer/Consumer).

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

12-24-2019 01:22 PM - edited 12-24-2019 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My guess is that you have a 4 core CPU and one of the cores is running at 100% due to poor programming (greedy loop, hammering UI thread, excessive use of value (and other) property nodes? etc.).

For example the following code (add more loops if you have even more cores!) can use all real CPUs, so you'll get near 100% on a regular CPU (and ~50% on a hyper-threaded CPU). What us the exact model of your processor?

Analogy: Your car wheels can spin the fastest if you put the car on blocks with the wheels in the air, but what would be the purpose? You'll just ruin your engine!)

Do you really have performance problems? Does the program "feel slow"? (Sluggish graph update? Slow response to mouse interactions?)

Can it keep up with the incoming data?

What is your program architecture?

A good programmer does not try to optimize for maximum CPU usage (except if you need to heat the room in winter with the excess thermal signature!). A 25% sustained CPU usage is way too high! There is insufficient headroom for the short moments where you actually need to be fast. As soon as the customer wants you to speed up the data rate or run on a less powerful CPU, you are out of luck and need to re-architect from scratch)

A good programmer tries to minimize CPU use. 20+ years ago, our CPU was many 100x slower and only had a single core, and we were still able to do what needs to be done. I'd be worried if a program uses more than a few %.

If acquisition is involved, that's probably the time limiting step anyway.

I am sure we can help you optimize your program if you could give us more information.

- Acquisition: What rate? How many channels? How exactly (communicating with external device, DAQ Card? USB? software timed, hardware timed, etc.). Are you using mostly express VIs or lowlevel functions?

- Processing: What kind of processing? (simple scaling, averaging, or big FFTs, etc. Who wrote the algorithm?)

- Display: Simple memory delimited chart? Ever-growing data in an xy graph? etc. Complicated graphs with millions of points? Many overlapping items on the front panel?

What is the time limiting step? Only the "processing" can potentially be parallelized, but since it can keep up with the acquisition, there is no need for that and it could actually slow you down due to more overhead.

So, feel free to show us your code and we can help you minimize your very excessive current CPU usage. 😄

12-24-2019 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The OP's question is interesting to me, but maybe not for its intended purpose.

On Unix OSes, there is a "Nice" command that lets you dedicate more CPU time to a particular program, that is, the program can be greedy or nice. I imagine it has to do with the thread scheduler, but I'll leave that to @rolfk. Windows does not really have a nice command. LabVIEW lets you set priorities for VIs, but I have seen anything that suggests they are really needed; please correct me if I am wrong.

But it would be nice, if not on a Real Time OS, to be able to tell the OS, my program has the highest priority, not what those IT guys try to push on my system.

Cheers,

mcduff

12-24-2019 02:24 PM - edited 12-24-2019 02:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

On Unix OSes, there is a "Nice" command that lets you dedicate more CPU time to a particular program, that is, the program can be greedy or nice. I imagine it has to do with the thread scheduler, but I'll leave that to @rolfk. Windows does not really have a nice command. LabVIEW lets you set priorities for VIs, but I have seen anything that suggests they are really needed; please correct me if I am wrong.

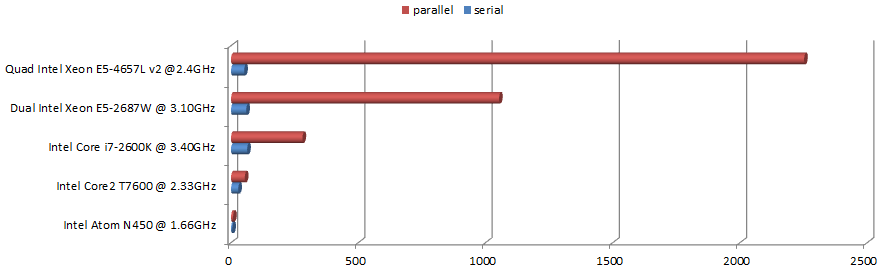

While the LabVIEW compiler is great at running things in parallel, using 100% CPU for real work (i.e. not just a collection of greedy loops running in parallel 🐵) requires significant programming effort to keep all cores busy for extended periods. A typical program set to "greedy" will not be able to use more than 25% on a quad core system.

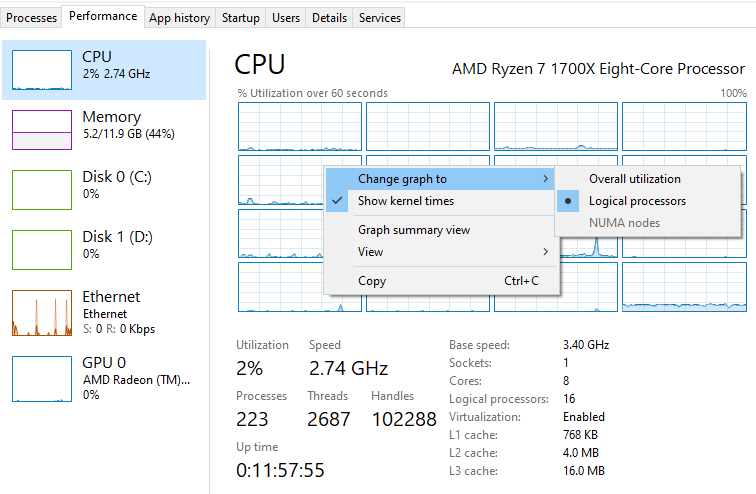

As a first step, the OP should set the "task manager...performance...CPU display" to "logical processors" instead of the default "overall utilization" (right-click on graph, see picture). This way we can easily tell of one CPU is pegged at 100%. Of course the OS will most likely shuffle the process around from core to core to even thermal load.

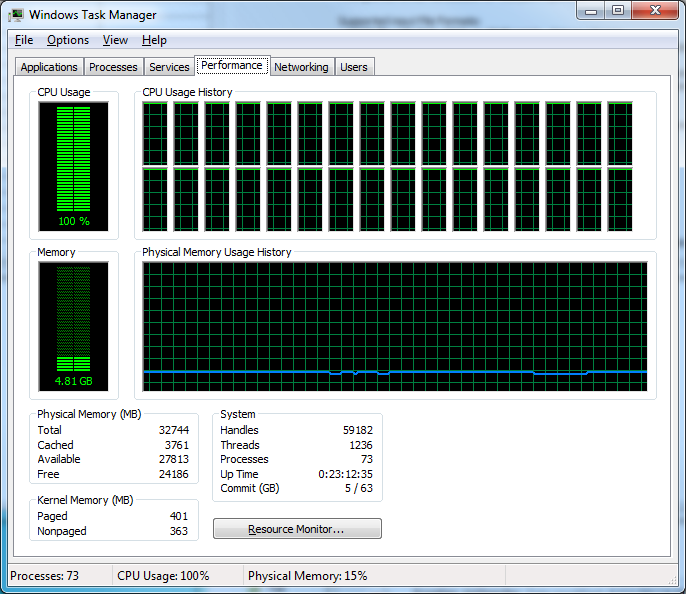

Yes, properly programmed, we can use 100% of all cores. Here's my 16 core (32 hyper-threaded cores) machine running one of my analysis programs 16+ times faster than on a single core (details).

12-24-2019 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can't quote on this browser.

Nice works well when there is a lot of CPU stuff going on. Sometimes IT does something to my computer and the CPU goes crazy, nothing LabVIEW related. Nice lets you have an unequal partition of the CPU resources during this time. Rather than share resources, Nice lets you give a process more or less CPU time than other processes.

There are times USB connections get knocked out by these IT intrusions; I would like to prevent that in my programs, by making my program less Nice.

Here's an example of something I do lessen the IT tax. Our computers all have passwords which we cannot share, we also screen savers that we cannot turn off. So during field tests, if the owner of the laptop is not nearby, then others may not be able to check how our data acquisition is proceeding if the screen saver goes on.

The VIs here allow one to temporarily disable the screen saver by telling Windows, a presentation/movie/etc is on, don't turn on the screen saver.

cheers,

mcduff

12-25-2019 02:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your kind reply.

I konw the execution time is determined by a well-designed structure.Like parallel structure.

What my post trying to figure out is that,besides the strcutre issue,is there a way to let CPU know the PROCESSING needs to be done ASAP.

For example,I have a 10k size array needs to be processed.I will put it in a For Loop.Every loop time is tiny,but the whole time is significant.

So if CPU can dedicate to this for loop process,maybe the time cost can be shorter.

Maybe this question is OP related which I'm not familiar with. Now I think I got something.

12-25-2019 02:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your PROFESSIONAL reply. This is great for Labviewers.

I don't have a CODE needs to be solved,but just an idea wants to figure out.

My superficial think is that if CPU uses highest frequency to a Labview program,the execution time can be shorter.