- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Enabling Loop Parallelism seems to take longer

Solved!11-25-2015 04:13 PM - edited 11-25-2015 04:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi guys,

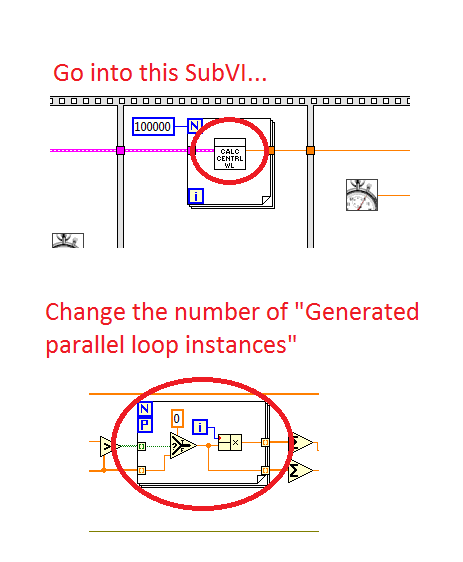

I was benchmarking a VI to see how much time was saved by enabling loop parallelism. The loop just uses select and multiplication on an array of 2048 elements. To my surprise, enabling parallelism increased the execution time! Please see if you can re-create what I am seeing by changing the loop parallelism in the attached SubVI (Calc Central Wavelength.vi), and changing the number of parallel loops allowed. Then run Test Calc Central Wavelength.vi to see how long it takes. The more loops I allow, the longer it takes! Thank you for any insight.

Solved! Go to Solution.

11-25-2015 04:52 PM - edited 11-25-2015 04:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What CPU are you running this with? Single core?

11-25-2015 04:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Doing it without a loop is even faster, 3.8 seconds compared to 5.8 seconds. Further refinements may be possible.

Lynn

11-25-2015 05:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can that code really be run in full parallel? Looks to me like the compiler basically has to perform the work sequentially since you are reliant on using the loop indicator and combining the results back in order via the tunnels. Given the number of iterations you are doing (thousands) it's liklely that the context switching over-head is being clearly exposed.

11-25-2015 05:21 PM - edited 11-26-2015 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Parallelism requires some overhead in order to split and reassemble the data. etc.. If your loop code does not do much, the parallelism overhead is realtively large and can even slow you down.

11-25-2015 05:52 PM - edited 11-25-2015 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

See if this is any faster. 😄

I would also use "interpolate array" to get the interpolated value at a fractional index. Modify as needed.

11-30-2015 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you everyone for the suggestions.

Altenbach, yours did indeed run much faster. I started changing my function piece by piece to look like yours, running after every change to see which changes gave the best improvement (it seemed to be the usage of shift registers, which I am puzzled about). After all of the changes my function was still a factor of 2 slower, and I realized it was the "Enable Debugging" checkbox that was to blame. Thank you for your help,

Gregory

11-30-2015 11:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, debuging adds some overhead. Also make sure the subVI front panel is closed when testing. If performance is important, you should also try inlining the subVI (not tested).

One big difference between the code versions is that yours uses significantly more memory, creating a boolean array and two DBL arrays, all with the same size as the input array.

Mine operates in place and exclusively uses scalars that operate in place (shift registers). They only arrays are the inputs.

Sometimes array operations can be faster because they are more suitable for SSE instructions, but here creating all these extra huge data structures just to throw them away a nanosecond later seems pointless. My code has an extremely small memory footprint which is desirable even if there is no speed advantage. For extremely large inputs, your's will run out of memory much quicker because it requires contiguous free memory for all these arrays.

In any case, all code versions are probably fast enough, even on a tiny atom processor. What are your performance requirements?

11-30-2015 11:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you, I will try to keep in mind these points about memory. There are no strict requirements, the VI Analyzer just told me that I had missed some opportunities to parallelize "for" loops, and I was surprised that enabling the parallelism slowed it down!

03-01-2018 07:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A little late to the party, but something just piqued my attention in your post -

'Allow Debugging' enabled actually forces the loop to execute sequentially, as per LabView. Not sure if this tip was shown in your version, but i suspect this was another reason why parallelism was slower.

Sorry to have woken up a sleeping post.

Greetings,

D