- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Doubt regarding Graphs

Solved!03-13-2020 08:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

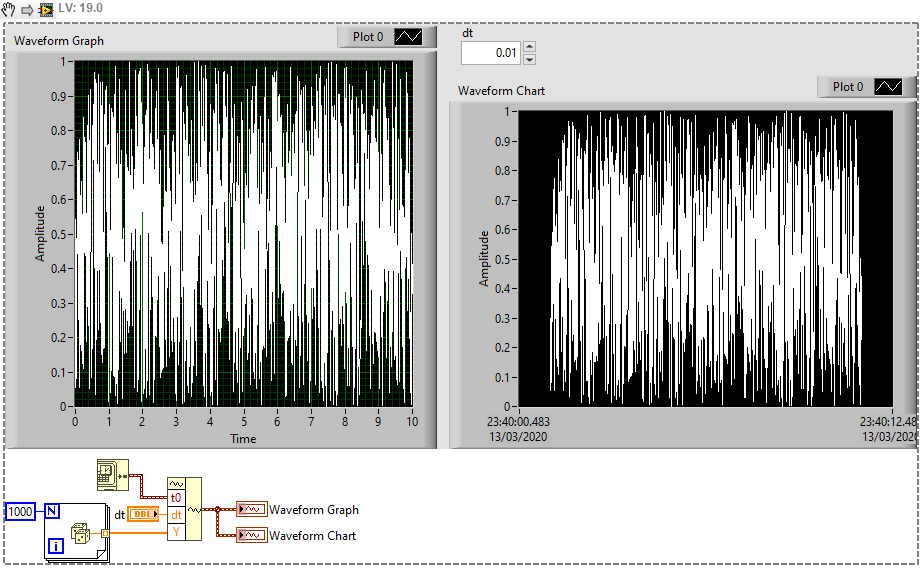

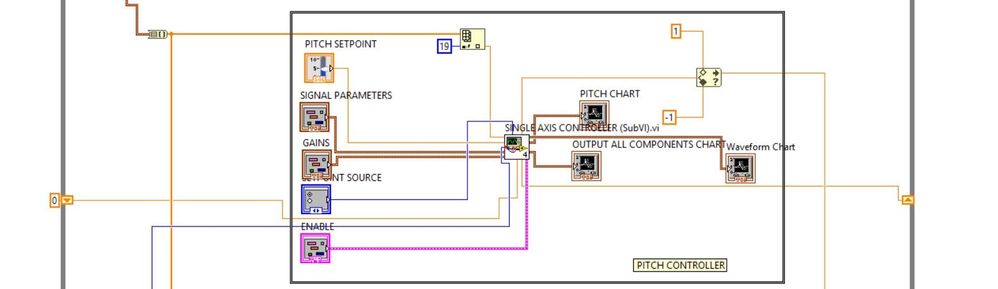

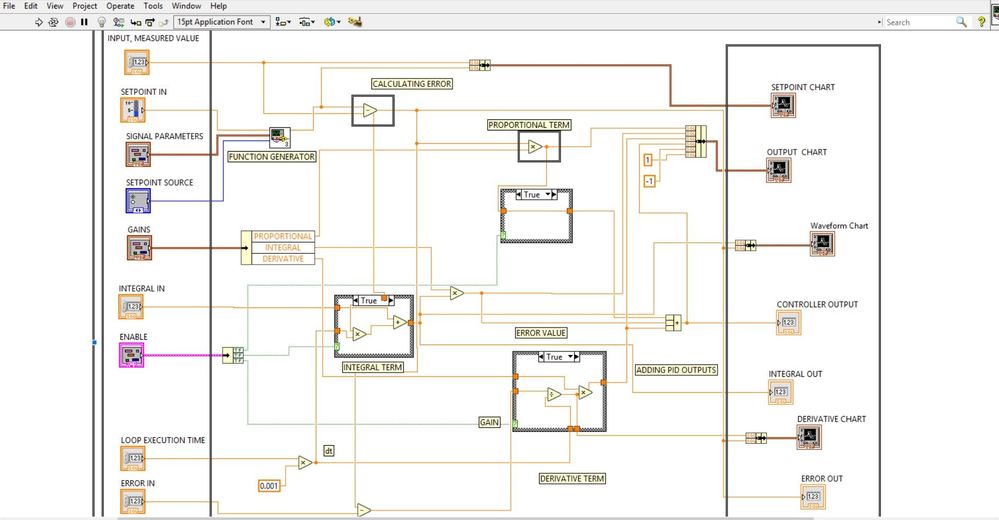

Okay, so I've posted the whole code here and I'm confused. It's basically a PID controller. I get the data from the simulator at 20packets/second(20hz, so dt=50ms) but the dt is not exact so I measure the loop iteration time as shown below and I get values b/w 45-55ms. I give this value to my SUBvi and it is shown similarly. As I give this value to my integral and derivative part in my SUBvi,

should I be multiplying it by 0.001 as I have done below?

And as you can see the waveform chart below, what is the unit of time here? is it in seconds or milliseconds?

Solved! Go to Solution.

03-13-2020 09:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you are plotting a Waveform Chart or Graph (not XY Graph) and you haven't changed the "X Scale.Offset" or "X Scale.Multiplier" properties (either via property node or the right click Properties of the chart/graph) then the X axis is in sample number when you plot an array of doubles (or bundles of arrays, which appears to be what you're doing)

If you have a known (constant) dt, then you can use this as the "multiplier" to have your X axis display in seconds.

Alternatively, if you use a Waveform input type, it will automatically set the axis values:

Here I extended the timestamps on both sides so you can see that it is recognised as a 10 second chunk of data.

Note that this takes <<1s to generate with this code, so there's no guarantee of truth! Only that it plots what you tell it.

03-13-2020 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for the fast reply.

What will be the sample number? but my dt is continuously changing(loop iteration). What should I do now?

Also, the 0.001 that I've multiplied with my dt, is it correct?

03-13-2020 10:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So multiplying your "loop time" from the tick count/wait functions by 0.001 will indeed give times in seconds.

However, be careful to consider that if you have hardware with timed acquisition (doesn't look like it yet, but maybe in future?) the acquisition time and the loop iteration don't have to be the same.

Consider the following:

Here I have some fictional task in which I acquire 1000 samples per iteration at 1kHz. Assuming I have enough buffer space, this will acquire at exactly 1kHz (within the error of the DAQ device).

However, my loop will take different amounts of time.

In the first iteration, it will take 1200ms. The next iteration, I'll need 1000 samples but by the time I start, 200 will already be available. I'll 'wait' for another 800 (taking 800ms) and for the loop wait (700ms) and the total will be the longest with this code (so 800ms). After two iterations, 2000ms will have passed and the mean value will be 1000 as expected. But there will be variation each alternating loop iteration - 1200 then 800.

If I make software-timed (on demand) sampling, this is no longer true. Now the acquisition will always take 1000ms (because it starts acquisition when requested) but half of the loop iterations will be slower. As a result, there will be 200ms gap between iterations 0 and 1, 2 and 3, 4 and 5... not ideal!

So make sure you consider what you're actually timing, and that if you have hardware timing for acquisition the loop rate might not be critical (this is basically the main advantage afaik of hardware timing).

03-13-2020 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your response. But I partly understood what you said. However, that multiplication(0.001) is right I guess.

Now back to the graphs again. As my while loop iteration time continuously changes(also giving a wait time of 1ms to unload cpu), which is dt, how do I get the x-axis in my graph to show time in seconds? What should be my multiplier term?

03-13-2020 11:07 AM - edited 03-13-2020 11:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@bartboi wrote:

Thank you for your response. But I partly understood what you said. However, that multiplication(0.001) is right I guess.

Now back to the graphs again. As my while loop iteration time continuously changes(also giving a wait time of 1ms to unload cpu), which is dt, how do I get the x-axis in my graph to show time in seconds? What should be my multiplier term?

Oops - sorry. I got distracted mid-way through my last reply and when I came back to it to finish I forgot what I meant to write.

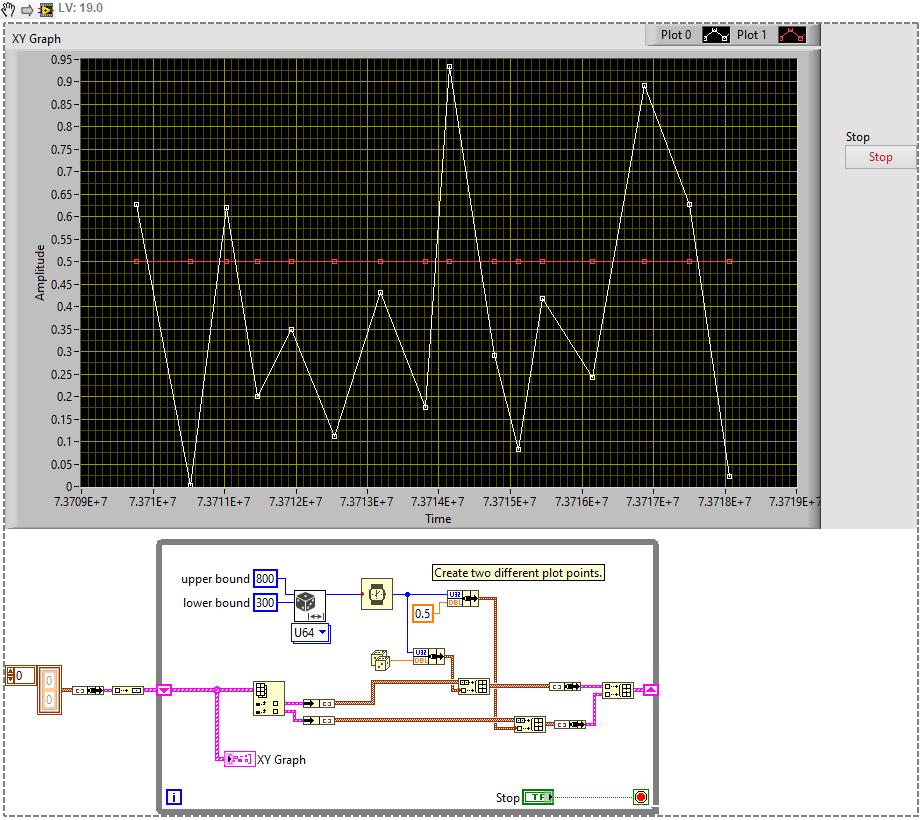

If you have a non-constant dt value, you can't use a waveform graph or chart (at least not easily). You should instead use an XY-Graph.

These take different inputs, but you specify an X value for each Y value. You can do this by using:

- An array of bundled pairs of values, X and Y (where each cluster is a single point)

- A cluster of two arrays, X and Y (where each array is one of the value types, so an array of X and an array of Y values). These should be the same length

- An array of complex values (Re = x, Im = y).

Read the detailed help for more details, especially with regards to multiple plots if you want that (typically involves more bundling and array building).

Since you're limited to a graph (not a chart) you also need to store your history using something typically like a Shift Register.

Here's an example with two plots and arrays of clusters of arrays of points. I don't claim this is the easiest or most efficient method to store multiple plots for XY graphs (I'd suggest perhaps looking at Complex Numbers, or the arrays of X and Y options), but it is convenient for an example.

Here I use the millisecond timer as a clock/time value, but you could calculate offset from a start time etc. The dt varies between 300 and 800 ms using the Random Number (Range) VI.

03-13-2020 11:32 AM - edited 03-13-2020 11:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for replying but I'm stuck again! I think this will complicate everything.

Okay so, if I consider my dt constant at 50ms, then should I just add the multiplier term in the graph as 20? Then will I get the time in seconds?

03-14-2020 12:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If your dt is 50ms, then that's 0.05 seconds per sample. So set the multiplier to 0.05 and you'll get seconds.

More generally, if you have

t(Sample N+1) - t(Sample N) = num samples (1) * multiplier = dt

it will give you what you want. Not sure if that is a better explanation or not, hope it helps a bit.