- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Displaying large data sets

Solved!11-17-2016 01:06 PM - edited 11-17-2016 01:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Everyone,

I am having some difficulty with managing and displaying large data sets for post testing review. Attached is my .vi, it seems that it is getting hung up at the for loop trying to decimate the arrays so I am not trying to display 22k points of data on a 1k wide graph. I have the decimation algorithim inside of a for loop as I do not know the total number of columns in each file. Really I do but it changes all of the time from the Engineering department that I would like to make it adjustable/ auto configurable.

Is there something that I am missing when trying to decimate the arrays of data? I am new at trying to display this many data points with LabView, typically I do this in MatLab but I don't have that ability this time around.

Attached is a sample file that I am trying to read, as well as my .vi.

Any help would be appreciated.

Thanks,

~Dan

Solved! Go to Solution.

11-17-2016 01:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

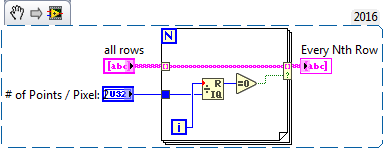

If what you want to do is to take your full array with all the rows and return a "decimated" array of every Nth row, a simple way to do this is with a For Loop with a conditional output tunnel:

Note that this returns the first, N+1th, 2N+1th, etc. points. I recommend making this a sub-VI, maybe called "Decimate All Rows" with the inputs and outputs as shown plus, of course, the Error Line in the lower left and lower right corners of the 4-2-2-4 connector pattern ...

Bob Schor

11-17-2016 02:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

11-17-2016 02:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bob,

Essentially this is what I would like to do. The only difference is that the array that I am looking to decimate is several columns wide and possibly millions of rows long.

Thanks,

~Dan

11-17-2016 03:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for the reply, I took a look at this and I assume that it does work the way that I am trying to do. The issue is that when I replace my decimate algorithm and use this function. My overall .vi still doesn't run. An updated version is attached with the data set again.

Thank you for the help, this does simplify the block diagram quite abit.

~Dan

11-17-2016 09:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OK, so you have a file with multiple columns and millions of rows, and you want to "decimate", and, let's say, isolate every hundredth row, all without reading the entire huge file in memory. I'll assume you have room for 100 rows in memory and the 1% of the file that would arise from one every hundred rows.

Here's what you do: take advantage of Read Delimited Spreadsheet to read 100 rows at a time (in a While loop). Throw away all but the first row and export that through an Indexing tunnel. You now have the entire file read, but only the 1% that you "decimated" in memory to do with what you will. Here's an example:

If you read the Help on Read Delimited Spreadsheet, you should be able to figure out how this works.

Bob Schor

11-18-2016 02:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Bob,

I took a spin on your solution to find the over all problem and fix my original .vi. I made a rookie mistake and realized that the auto-index of the for loop indexes the rows of a 2D array, not the columns. This was the basis of my problems, once I solved this I took your solution and created a feedback loop as to whether the ranges of the graph changed to updated the decimation factor of the array so I can get better resolution as I zoomed in.

Thank you for the help,

~Dan

11-18-2016 02:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello All,

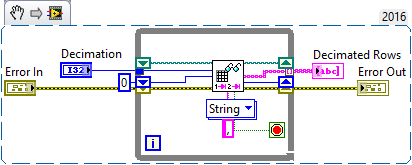

Ultimately I took a mixture of several different solutions to come up with the answer to my memory issue. I attached the decimation portion of the .vi to this post for others to use as they need to.

Since I didn't know how many columns that I would need to plan on, I ended up using a For Loop to cycle through the columns on the input string array. The main issue that I had was that I made a newbie mistake and assumed that the Auto-Indexing funtion on a For Loop would index the columns and not the rows. This is why I ended up getting an out of memory error (my smallest sample size is 22563 rows, I was trying to autoindex and populate a 22563 x 22563 and graph it. This ends up being a total of 509,088,969 data points all with 64bit precision, a total of 32,581,694,016 bits of data.

Once I solved this issue and transposed the array at the input it worked great. I then used a while loop and a case statement with a feedback loop so that I could detect when the user zoomed in on the graph (or zoomed out) and it would recalculate the decimation factor so the user would be able to get better or worse resolution on the data set without loosing the original data.

Hope this helps someone else in the future.

~Dan