- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Controlling Virtual Keyboard using eye signals

Solved!07-12-2020 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello everyone,

I want to make a virtual keyboard that can be controlled using eye signals.

In the virtual keyboard, there will be a cursor that can move upward, downward, left, and right depending on the eye movements. and it will be clicked when eyes blink.

I have searched for the example of the virtual keyboard in Labview, but almost all the programs using buttons for the program. So when the signals come, the button will be clicked directly.

How to make the virtual keyboard that has a cursor which can move?

I haven't made the vi because I don't know how to do it. But here I attached the example of the virtual keyboard that i want to make.

Thank you.

Regards

Jenni

Solved! Go to Solution.

07-12-2020 06:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi, Jenni.

For a brief period, I was associated with the NIH's National Eye Institute, and know a little bit about eyes and their movements (do you know that the eyes can rotate about all three spatial axes?). From the point of view of LabVIEW, coming up with a way to "push a virtual key" seems, to me, a much simpler problem than the one of measuring "eye signals" (oh, maybe you mean something like "eye blinks", instead of "eye movements" (i.e. direction of gaze). Sounds like an interesting Thesis Project in Biomedical Engineering.

Bob Schor

07-12-2020 07:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Bob, thank you for replying.

Yes, actually my research is about electrooculography. So I want to control a virtual keyboard using gaze directions. But I have an obstacle in building the virtual keyboards.

In Labview front panel, I just found the buttons to build the keyboard, but buttons can't be moved, it just can be clicked. I need to know is there any other options to make a virtual keyboard without using buttons.

I hope you can understand what I mean 😅

Regards,

Jenni

07-12-2020 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What makes your particular problem "tricky" is the various "mappings" that LabVIEW uses. If you go looking for "Set Cursor Position", you can find a function that will put the cursor and a particular position, relative to the monitor screen, of the LabVIEW object in the working LabVIEW window. I might not be saying this correctly, but assume you have a Front Panel that takes up a region 200 by 200 pixels, not counting the Title, Menu Bar, Scroll Bars, etc. Let's say you have this more-or-less in the middle of your Monitor. [I'm assuming a one-monitor system -- it gets more complicated with multiple monitors ...].

Now put an object, say a Boolean Control, in the middle of this window, at location 100, 100. You can use a Property Node of the Control to get its Position, and it will return a value near 100, 100. But if you do a SetCursorPosition, it will go to 100, 100 on your Monitor, near the upper-left-hand corner, and put the Cursor there, even though your control is in the center of your Monitor.

There are a bunch of other Properties Nodes you can (and must!) play with to take into account the location of your VI's Front Panel relative to the Screen and possibly other "complications" before you can put the cursor where you want it (LabVIEW will always put the cursor where LabVIEW wants to put it ...).

This will be an "interesting" learning experience. Apply your Engineering talents and also "play scientist" -- form hypotheses, do some research, do some experiments (i.e. write little Test VIs and see what happens -- nothing is going to break ...).

Bob Schor

07-13-2020 11:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Jenni,

Your research on electrooculography seems pretty interesting.I just gone through some slideshare site and go to know electrooculography is technique to read the standing potential between cornea and retina.This standing potential will be in mV.So basically it will turn out into a data acquisition problem if you want to move the LabVIEW controls on the front panel.

Hence you need to chose an optimum instrumentation amplifier and feed that amplified signal to any data acquisition hardware.In the LabVIEW program ,you need to map your eye movements with voltage .

And rest of the program involves the position property of the control to rightly position based on the voltage (which inturn corresponds to eye movement).

Hope i am showing you some direction .

07-13-2020 10:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hallo Srikrishna,

Thank you for replying.

I have done with the instrumentations, I use analog filter to record EOG signals. And now, I have got the EOG data.

I made a vi to classify the signals and use led for the indicator. Here I attached the program that I have made.

What I confuse now is to build the virtual keyboard for the implementation of this EOG signals.

Regards

Jenni

07-14-2020 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As someone who has recorded eye movements, I'm very curious about the "Up and Down Signal" that you attached. Could you please tell me what the two channels represent, whether (or not) they represent precisely-spaced samples, and if so, what sampling rate was used, and the nature of the "analog filter" used to record the EOG signals. Was the subject asked to do anything, i.e. stare at one point in space, track an object moving up and down, or left and right, etc.? Are the two channels "Horizontal" and "Vertical", or "Left Eye Horizontal" and "Right Eye Horizontal"?

I know that this isn't your question, but as a scientist, before I can make suggestions on how to use data (particularly data from human subjects), I need to have confidence that the data have "more signal than noise" ...

Bob Schor

07-14-2020 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your reply, Bob.

I'll try to answer your questions one by one

1. Could you please tell me what the two channels represent?

The two-channel represents the voltage signal from eog sensor. When you record Eog signal, I use 4 electrodes in my face (around my eyes). The electrodes stand for ch1, ch2, reference, and ground.

But the data was from the Ch1 and Ch2

2. Ch1 and Ch2 can be used to classified the type of eye movements. There are many ways to do that.

the way that I understand the most is by using the polarity of the signal. so when

=========================

ch1 | ch2 | Movement

=========================

+ + Down

- - Up

+ - Right

- + Left

==========================

And you have to know that, Blinks has the same polarity with up Movement.

But it can be distinguished by the Amplitude.

3. The analog filter has filtered most of the noise. But there is some noise caused by Involuntary blinks. to avoid that, usually, we use threshold to get rid of the signals in a predetermined range.

4. Was the subject asked to do anything, i.e. stare at one point in space, track an object moving up and down, or left and right, etc.

the object only allowed to stare at "normal position" and move their eyes Up, Right, Down and left.

the head was locked, and you can't overdo the muscle in your face, it can cause noise.

my friend who has made the same virtual keyboard in the visual studio said that he made the keys using images arranged alphabetically, and there is one transparent image that can be moved. can it be done in Labview?

better to use Ring or import image in Labview?

I hope you understand what I mean. Sorry for Grammatical error.

Regards

Jenni

07-15-2020 08:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, while I remain skeptical that you are accurately recording eye positions (based on the data record you provided), I do have an idea about a Keyboard layout, which comes down to the task of moving the mouse cursor to a known position on the screen (i.e. over a Boolean Control labeled "A", or "B", or "C") and doing a Mouse Click on it.

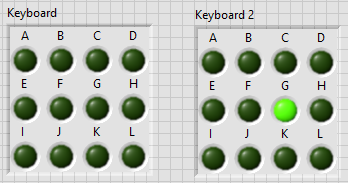

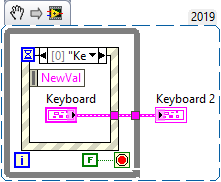

Here is a 12-letter "Keyboard" (I got lazy and stopped at 12 letters). The second image is actually a Snippet -- if you copy it to a LabVIEW 2019 (or 2020) Block Diagram, it should turn into runnable code.

The Keyboard is a cluster of Boolean controls. The Controls are configured as Latch until Release, so they stay (except while you are "pressing them") off, and the nature of the Event mechanism makes the "result", shown in Keyboard 2, only have lit the first key that was released (so you only need to look at the elements and figure out which one is True).

[Oops -- wire a True to the Stop indicator so the loop only runs once]. Since Keyboard 2 will only have a single Boolean "lit", it should be a simple exercise to figure out which one was pushed.

Bob Schor

10-09-2021 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello jennichrni;

ı want to contact with you about this topic. Please send me a message.

My email : sametgoktas9555@gmail.com