- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Consumer loop randomly slowing down and running out of memory

Solved!10-20-2020 12:09 AM - edited 10-20-2020 12:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

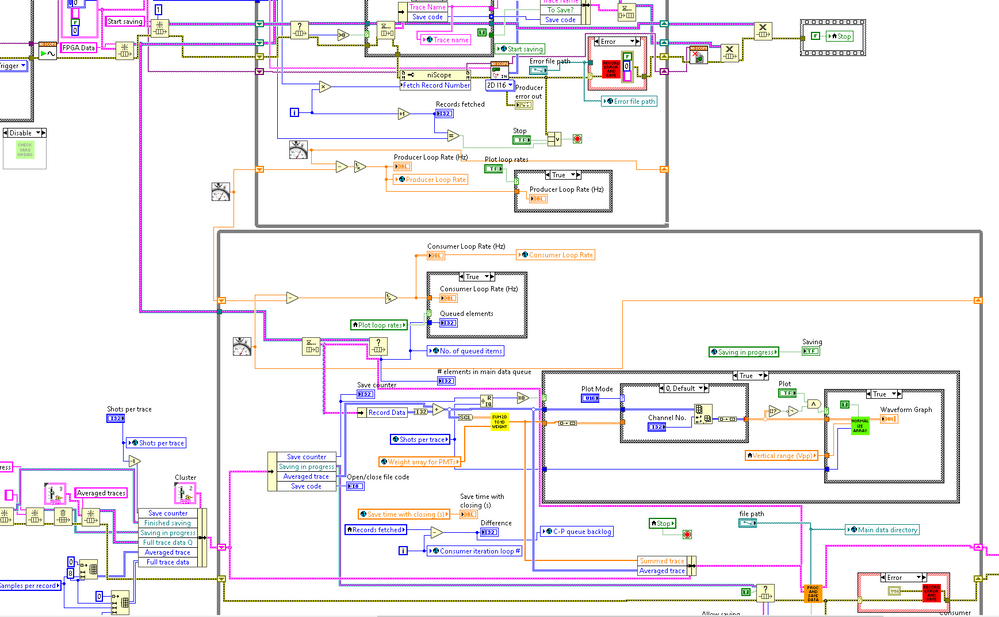

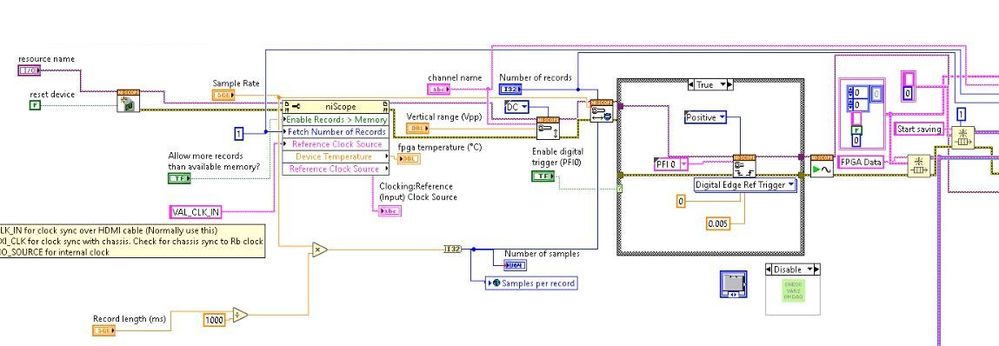

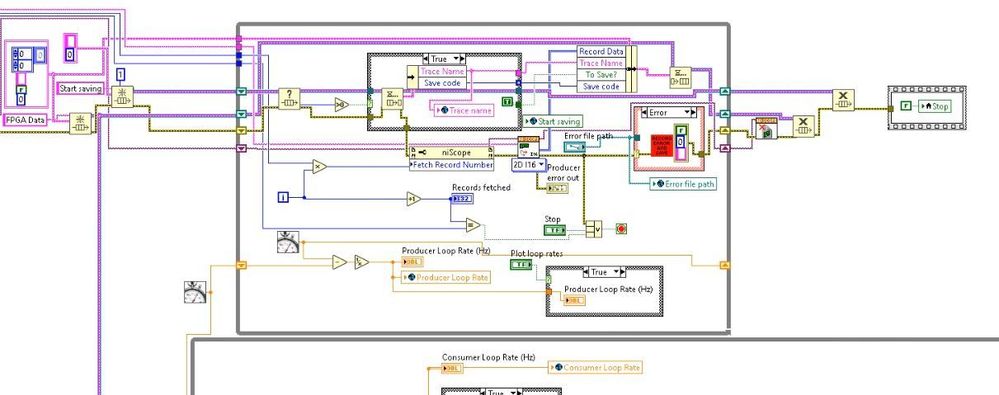

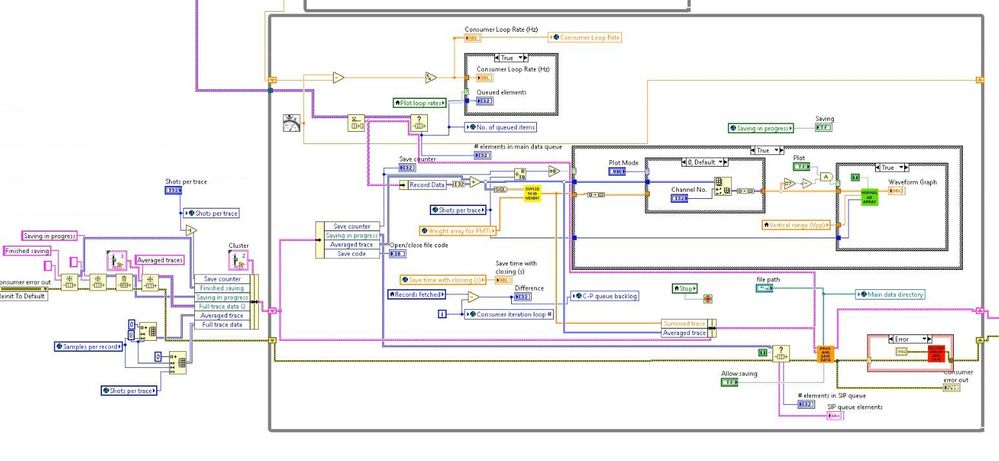

I have been working with a VI in LabView 2019 to acquire and save data from a PXIe-5171R. The VI has many features which I am developing, but it is basically a standard Producer-Consumer structure connected by a queue. Besides this main data queue I also have several other queues running in the background to communicate with other top-level VIs. A steady 50 Hz trigger is sent to the DAQ. Here is a screenshot showing both the Producer and Consumer:

(The orange sub-VI in the bottom right of the Consumer loop is where the data processing and saving occurs.)

So far, the VI has been working smoothly for several months. However, recently I added a new, memory-intensive feature to the Consumer loop - placing the acquired data (1.4 million elements) in a larger array every loop iteration. This process happens inside a sub-VI in the Consumer loop. After a few minutes of smooth running (with the Consumer looping along at about the usual speed), the VI started behaving erratically, the Consumer loop would suddenly start looping at about half the speed of the Producer, resulting in a growing backlog in the queue and finally out of memory. Even when I deactivated saving and disabled the new, memory-intensive feature, this behavior kept repeating itself. The consumer kept running slowly for no clear reason. I tried a few things to troubleshoot, none of which helped:

- Making sure to release all queues before restarting the VI, but that didn't help.

- Trying to isolate the problem to a specific part of my Consumer loop code - I found that completely disabling the orange saving sub-VI would stop the behavior. However, I could not easily isolate any specific part of the sub-VI to figure out what was causing the problem. The moment I activated any part of the code diagram in the sub-VI, the behavior would return again.

- I found that a reliable way to "reset" is to quit LabView and re-open the VI. Then it would run smoothly again for a few minutes before repeating the same behavior.

I have never encountered this sort of erratic behavior in LV before. In my previous experience, when I had memory leaks, I usually could solve it by disabling the memory-intensive parts of the VI. But here I can't seem to do that. What could be causing this? Could it be something to do with the way LabView executes sub-VIs (in this case, the saving sub-VI)? Or might be to do with some references to queues/other variables in the memory which are not closed until LabView quits?

Solved! Go to Solution.

10-20-2020 04:14 AM - edited 10-20-2020 04:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Nobody here can troubleshoot a truncated picture. What are the loop rates you see (in real units) and what do want them to be. What determines the loop rate of the producer loop?

What hardware contains the PXIe-5171R? Where is the code running?

So you are growing an array by 1.4M elements at 50 Hz? How and why? How long do you think you can keep that up given your amount of RAM??? Is that data also shuffled around via shared variables? What is the CPU usage and network usage?

10-20-2020 08:27 AM - edited 10-20-2020 08:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Nobody here can troubleshoot a truncated picture.

Sorry about that. I will include a fuller picture of the Producer and Consumer at the end of this post. However, there are sub-VIs in the Consumer which I think are too complex to include in full, although I can include that as well that if that is useful.

What are the loop rates you see (in real units) and what do want them to be. What determines the loop rate of the producer loop?

The loop rate is determined by a 50 Hz trigger that is fed continuously to the PXIe-5171R. Every time this trigger is received, the device acquires a record lasting for 10 ms and waits for the next trigger. Each record has 1.4M elements (8 channels at 175k points each). Normally, the loop rate of the producer and consumer loops should both be 50 Hz.

What hardware contains the PXIe-5171R? Where is the code running?

The PXIe-5171R is contained in a PXIe-1075 chassis. The chassis communicates with a computer using a PXIe-8375 controller. The code is run on this computer.

Computer specs are as follows:

- CPU: 3.7 GHz Xeon E5-1630V3 (8 threads)

- DDR4 RAM: 24 GB

- 1 TB Samsung 850 EVO SSD

- Windows 10 Professional

So you are growing an array by 1.4M elements at 50 Hz? How and why? How long do you think you can keep that up given your amount of RAM??? Is that data also shuffled around via shared variables? What is the CPU usage and network usage?

In my system, data is taken is batches of 25 consecutive records. The Producer and Consumer loops run continuously at 50 Hz without saving the data to disk, but once in a while a "Start saving" command is received from a different VI, at which point the Consumer will start saving a batch of 25 consecutive records to disk (which should take about 500 ms, due to the speed of the 50 Hz trigger).

What I want to do is to analyze this batch of 25 records as a group, so that I can compare different records with each other. In principle, 25 records * 1.4 M values/record * 16 bits/value = 70 MB of memory, which I thought should be manageable, as my system has 24 GB of memory.

Thus, at the beginning I initialized an empty 3D array of size 25 pages * 8 rows * 175000 columns. My plan was to use the Replace Array Subset function (in the Consumer loop) to replace a page of this giant array every loop iteration until all 25 pages are filled. Then the whole array will be sent to a different queue that is going to be read out by a different top-level VI for further analysis. This process will be repeated every time the system receives a "Start saving" command.

Now, it may be that the plan I had prepared in the above paragraph may not be the best way to do this. I was in the middle of brainstorming different strategies to do the same thing. However, the erratic behavior which persisted even after I disabled the memory-intensive function was concerning because it could point to something about the way LV manages memory that I am unaware of and I am not doing right in general, which is why I came here.

Diagram of initializing code:

Producer loop:

Consumer loop:

10-20-2020 12:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As altenback eluded to, we cannot, and most won't, diagnose pictures/images. Can you upload your VI instead?

10-20-2020 01:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Eric1977 wrote:

As altenback eluded to, we cannot, and most won't, diagnose pictures/images. Can you upload your VI instead?

I would recommend zipping the entire project folder and uploading (use a standard zip file, not rar or 7z formats).

10-20-2020 02:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here is a zip file generated by the command "Duplicate file hierarchy to new location". (Sorry for not neatly organizing everything into the LV project format.)

You should be able to open the main VI by going to the following path within the ZIP file:

FPGA Save all traces v6 add trace analysis Folder\Scopes\5171R Multirecord with Sample Filtering and Triggering\Save all traces\FPGA Save all traces v6 add trace analysis.vi.

10-21-2020 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I get an error from Windows that the file path name is too long when I try to open this file, even when I extract to the root directory.

10-21-2020 07:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

10-27-2020 10:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

An update: I've since decided to give up on this way of implementing what I want to do. I still don't understand the memory problems that I described, but I simply reverted back to an earlier version of the VI which doesn't have the same problems.

In the end, instead of sending out a record of 1.4 M elements to fill a gigantic 3D array every 20 ms (which is what I wanted to do originally), I send out the record to a separate queue which is read out by a parallel while loop (different from the consumer or producer) and immediately processed into a more tractable set of values (calculating mean of the data). After a series of 25 such records are processed, I can also compare the records to each other, though by using the mean values instead of the whole array. For now, this is sufficient for my purposes, even if it is not as versatile as my original scheme (had it worked). It also does not result in any more memory problems.