- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Complex cluster as input to calling a dll, vimba, allied vision camera, frame announce

04-02-2017 07:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

Need help plz,

I have a mako camera from allied vision which did not supply LV driver. imaqdx is not allowed at my company.

so i am trying to create one in LV.

i was able to use import shared library tool to generate VI for their corresponding functions except a few.

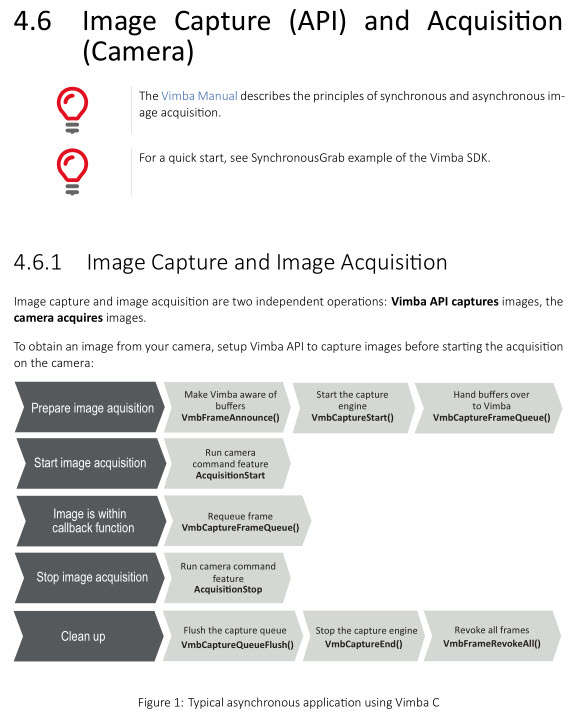

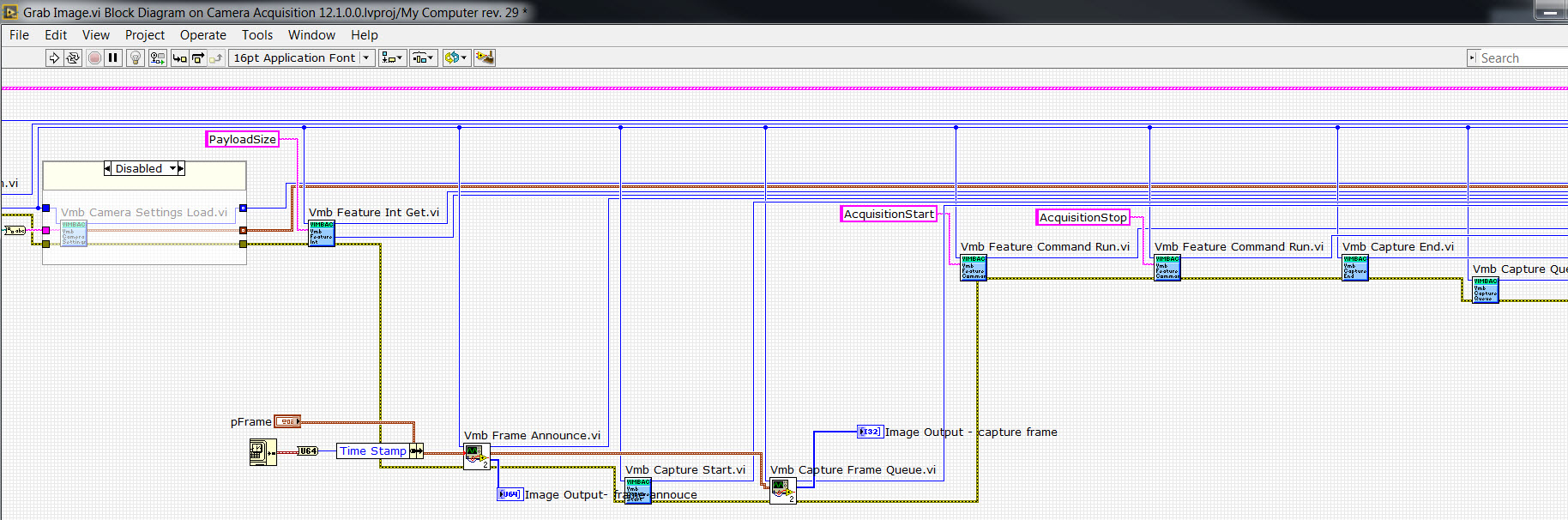

I was able to use these VIs to sucessfully enumerate, get camera information, load settings onto the camera. but i am stuck on couple of functions to acquire image. i am following the sdk vimba api C manual to build the VIs using the streaming example listing #7 in the attached manual and "asynchronous grab.c"

I am giving the inputs as attached in the VI. but i am unable to get any data out, and there are no errors in any functions. can someone be kind enough to look at my code to see what i am missing especially starting from frame announce().

attached zip has all the VIs and dll functions with images examples and api manual.

Thanks

Listing 7: Streaming

#define FRAME_COUNT 3 // We choose to use 3 frames

VmbError_t err; // Vimba functions return an error code that the

// programmer should check for VmbErrorSuccess

VmbHandle_t hCamera // A handle to our opened camera

VmbFrame_t frames[FRAME_COUNT]; // A list of frames for streaming

VmbUInt64_t nPLS; // The payload size of one frame

// The callback that gets executed on every filled frame

void VMB_CALL FrameDoneCallback( const VmbHandle_t hCamera, VmbFrame_t *pFrame )

{

if ( VmbFrameStatusComplete == pFrame->receiveStatus )

{

printf( "Frame successfully received\n" );

}

else

{

printf( "Error receiving frame\n" );

}

VmbCaptureFrameQueue( hCamera, pFrame, FrameDoneCallback );

}

// Get all known cameras as described in chapter "List available cameras"

// and open the camera as shown in chapter "Opening a camera"

// Get the required size for one image

err = VmbFeatureIntGet( hCamera, "PayloadSize", &nPLS ); (A)

for ( int i=0; i<FRAME_COUNT; ++i )

{

// Allocate accordingly

frames[i].buffer = malloc( nPLS ); (B)

frames[i].bufferSize = nPLS; (B)

// Anounce the frame

VmbFrameAnnounce( hCamera, frames[i], sizeof(VmbFrame_t) ); (1)

}

// Start capture engine on the host

err = VmbCaptureStart( hCamera ); (2)

// Queue frames and register callback

for ( int i=0; i<FRAME_COUNT; ++i )

{

VmbCaptureFrameQueue( hCamera, frames[i], (3)

FrameDoneCallback ); (C)

}

// Start acquisition on the camera

err = VmbFeatureCommandRun( hCamera, "AcquisitionStart" ); (4)

// Program runtime ...

// When finished, tear down the acquisition chain, close the camera and Vimba

err = VmbFeatureCommandRun( hCamera, "AcquisitionStop" );

err = VmbCaptureEnd( hCamera );

err = VmbCaptureQueueFlush( hCamera );

err = VmbFrameRevokeAll( hCamera );

err = VmbCameraClose( hCamera );

err = VmbShutdown();

//----- Image preparation and acquisition ---------------------------------------------------

//

// Method: VmbFrameAnnounce()

//

// Purpose: Announce frames to the API that may be queued for frame capturing later.

//

// Parameters:

//

// [in ] const VmbHandle_t cameraHandle Handle for a camera

// [in ] const VmbFrame_t* pFrame Frame buffer to announce

// [in ] VmbUint32_t sizeofFrame Size of the frame structure

//

// Returns:

//

// - VmbErrorSuccess: If no error

// - VmbErrorApiNotStarted: VmbStartup() was not called before the current command

// - VmbErrorBadHandle: The given camera handle is not valid

// - VmbErrorBadParameter: The given frame pointer is not valid or sizeofFrame is 0

// - VmbErrorStructSize: The given struct size is not valid for this version of the API

//

// Details: Allows some preparation for frames like DMA preparation depending on the transport layer.

// The order in which the frames are announced is not taken into consideration by the API.

//

IMEXPORTC VmbError_t VMB_CALL VmbFrameAnnounce ( const VmbHandle_t cameraHandle,

const VmbFrame_t* pFrame,

VmbUint32_t sizeofFrame );

------------------------------------------------------------------------------------------

// Frame delivered by the camera "VmbFrame_t"

//

typedef struct

{

//----- In -----

void* buffer; // Comprises image and ancillary data --> pointer generated from Imaq

VmbUint32_t bufferSize; // Size of the data buffer ---> 1936*1216=payload size

void* context[4]; // User context filled during queuing --> creating array of 4 pointers and inserting into cluster

//----- Out -----

VmbFrameStatus_t receiveStatus; // Resulting status of the receive operation

VmbFrameFlags_t receiveFlags; // Resulting flags of the receive operation

VmbUint32_t imageSize; // Size of the image data inside the data buffer

VmbUint32_t ancillarySize; // Size of the ancillary data inside the data buffer

VmbPixelFormat_t pixelFormat; // Pixel format of the image

VmbUint32_t width; // Width of an image

VmbUint32_t height; // Height of an image

VmbUint32_t offsetX; // Horizontal offset of an image

VmbUint32_t offsetY; // Vertical offset of an image

VmbUint64_t frameID; // Unique ID of this frame in this stream

VmbUint64_t timestamp; // Timestamp of the data transfer

} VmbFrame_t;

04-03-2017 04:45 AM - edited 04-03-2017 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

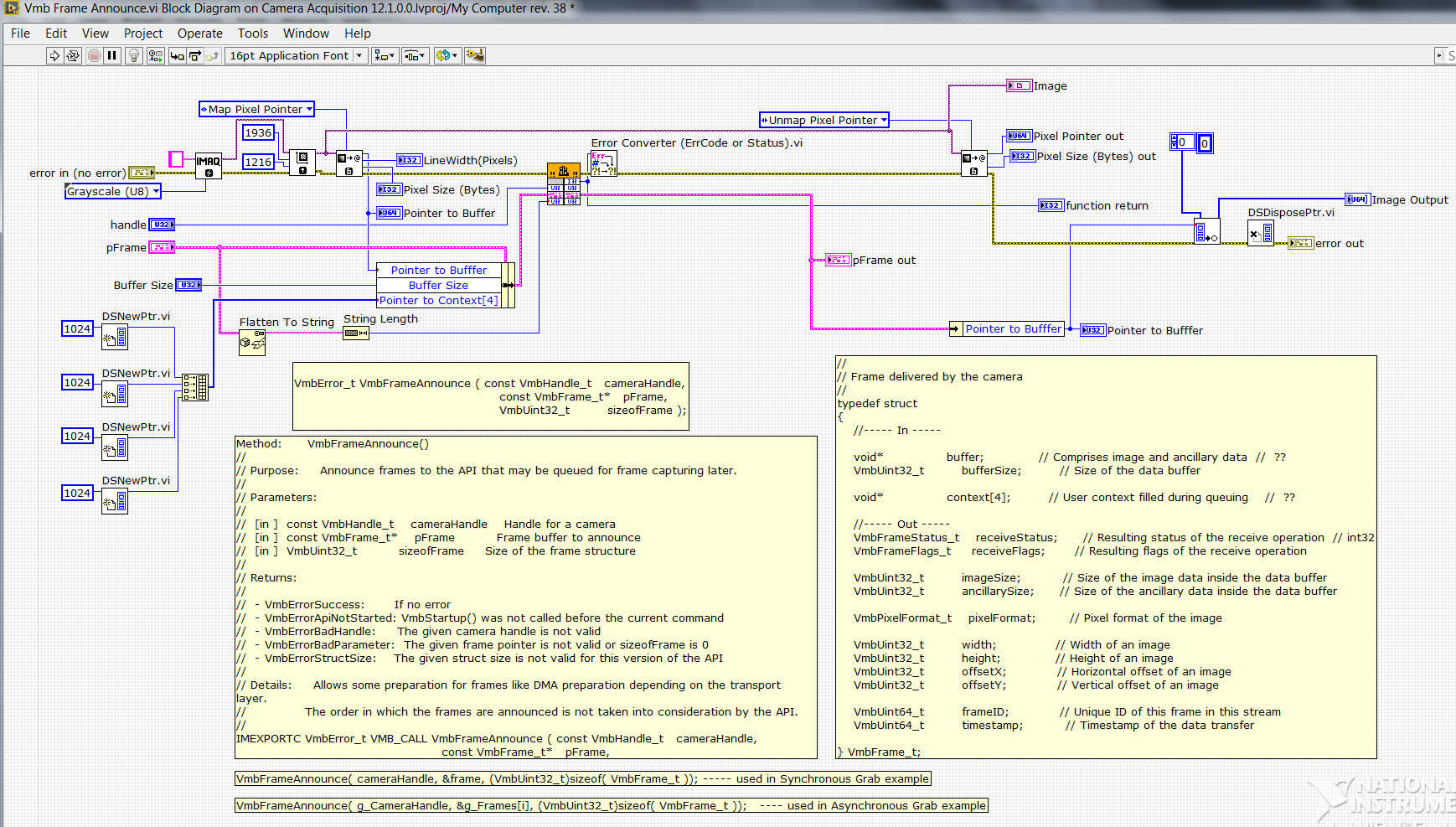

One potential problem you have is the disconnect between the buffer size and the IMAQ image buffer.You retrieve the payload size from the camera and pass it to the Frame Announce.vi as buffer size, but inside the Frame Announce.vi you create an IMAQ Image with a certain Width and Height and pass the buffer pointer to the DLL. There is no guarantee that this will actually work out fine, since the payload that the driver thinks it needs has nothing to do with the buffer that IMAQ allocates for the image and whose pointer you retrieve with the IMAQ Get Image PixelPtr.vi. (Well they should of course be related but there is no guarantee for that other than that the image size you set the IMAQ image to, happens to HOPEFULLY end up with a buffer that happens to match the size of what the driver thinks it needs).

Instead you should retrieve the image size the camera can support and pass that to the Announce Frame.vi to be used to generate the IMAQ image and then from the return values of the IMAQ Get Image PixelPtr.vi, use the Line Width(Pixels), and then multiply this with the Pixel Size (Bytes), and then multiply this result with the Height of the image and pass this as buffer size. Also IMAQ by default uses a border size of 3 pixels around the entire image for extra buffer space by various image analysis algorithms. The PixelPtr is adjusted to point to the correct upper right corner of the real image, but the Line Width(Pixels) is the actual stride across a full image line and includes the border pixels. You either have to set the border size to 0, or find a way to tell the DLL function that the line stride is different to the actual image size. Possibly the DLL itself also does some default padding, as many image routines assume a word or even long word padded line width in images.

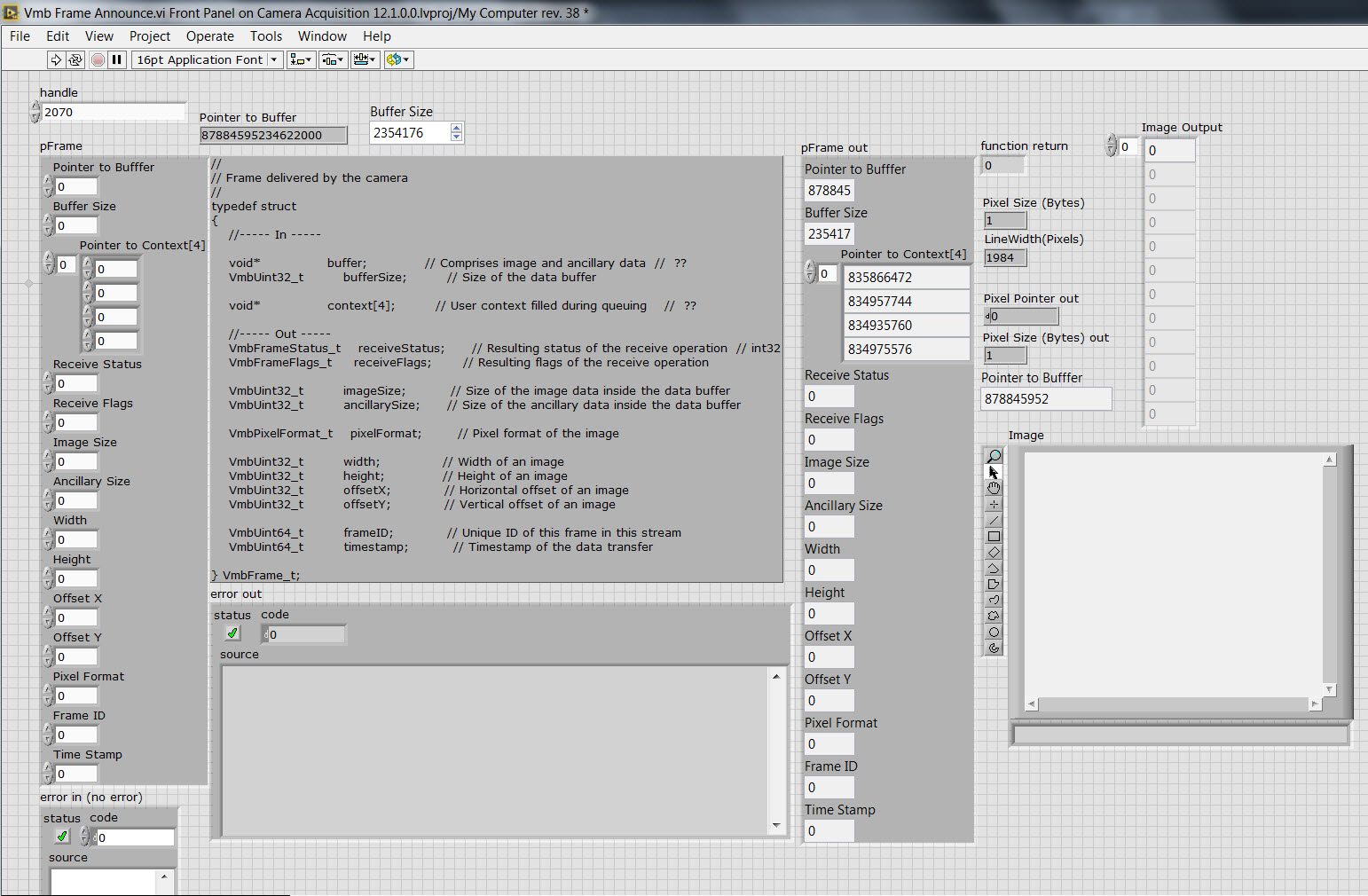

But an even more grave bug is that the Call Library Node in the Frame Announce.vi is not set to call the VmbFrameAnnounce() function in the DLL but rather the VmbFeatureIntGet(). Apparently the function doesn't crash in this particular case and doesn't cause an error either but it definitely is not right.

Also the order of elements in the pFrame cluster doesn't match with the C definition of that structure as shown in your diagram. Namely the pixelFormat should be before the height and width in that structure according to the C declaration shown, but it is after that. Anyhow, I think your ZIP archive actually contains a different older version of your VIs, since the Frame Announce.vi diagram you show doesn't match what is inside the archive. An I'm pretty sure that you actually need to fill in width, height, offsetX, offsety, pixelFormat and possibly other values in that structure to some meaningful values too, for the function to work properly.

Another problem you have is the handling of the 4 pointers for the context. This is a fixed size array and not a pointer to an array. So you have to put in 4 pointer sized integers into the cluster and not an array of pointer sized integers. But even if it was a pointer to pointers you couldn't use a LabVIEW array inside the cluster since a LabVIEW array is not a pointer to memory area but a pointer to a pointer to a memory area.

Next problem is that you Unmap the pointer after the Frame Announce call. While I believe that the Unmap isn't strictly necessary under Win32 but and Win64 bit anymore (this function dates back to the original Graftec Toolkit before it was acquired by National Instruments and was developed for LabVIEW for Windows 3.1 which had a special 32 bit flat memory model, but pointers passed to DLLs where 16 bit segment : offset based pointers that had to be mapped and unmapped from the 32 bit flat memory space to the 16 bit segment : offset model explicitly to be accessible in a 16 bit DLL), it's still not a good idea to unmap it before it has been properly written to by your frame grabber DLL.

Which leads us right to the next error.

VmbCaptureStart() tells the DLL to start capturing an image and fill the buffer. It normally does not mean that the frame is filled when this function returns. This would have to be determined by some other means before you can safely assume that the IMAQ image pointer has been written to. Part of this seems to be the Capture Frame Queue.vi which also suffers from the problem that it calls VmbFeatureIntGet() in the DLL rather than the intended VmbCaptureFrameQueue() function. But since you don't use the callback pointer which would tell you when the frame has been fully captured (and you can't use a callback pointer without writing an intermediate DLL that translates such a callback into a more LabVIEW friendly asynchronous event such as a User Event) you have to find some other means to poll the session for the completion of the frame capture by calling some function in the DLL until it returns "frame capture complete" status or an error condition.

Only after that should you unmap the IMAQ pixel pointer and continue to use the image inside LabVIEW!

04-03-2017 05:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I took a quick look at the C source code example and the killer in there is that the pFrame structure is supposed to persist across the entire call chain of VmbFrameAnnounce(), VmbCaptureStart(), VmbCaptureFrameQueue(), etc. until VmbFrameRevoke(). That will require you to allocate this explicitedly using the same DSNewPtr.vi and keep it alive until it's not needed anymore.

And this is the point where I declare this API as not suitable for direct interfacing from LabVIEW, but definitely requiring the development of a more LabVIEW friendly intermediate DLL in C!

04-03-2017 08:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks rolfk,

That was a great expalanation. i got some more clarity but still have questions if you dont mind.

1.Instead you should retrieve the image size the camera can support

the max size is 1936*1216 = 2354176 happens to be displayed as payload size even in vimba viewer software provided by allied vision. thats why i chose this value.

2. and pass that to the Announce Frame.vi to be used to generate the IMAQ image and then from the return values of the IMAQ Get Image PixelPtr.vi, use the Line Width(Pixels), and then multiply this with the Pixel Size (Bytes), and then multiply this result with the Height of the image and pass this as buffer size.

The math you suggest comes out exactly to match transfer max size output of the image pixel pointer = 2412544 regardless of the border size input value, which is 58,368 more than 2354176, so i give this as input border size to frame announce now..

3. But an even more grave bug is that the Call Library Node in the Frame Announce.vi is not set to call the VmbFrameAnnounce() function in the DLL but rather the VmbFeatureIntGet(). Apparently the function doesn't crash in this particular case and doesn't cause an error either but it definitely is not right.

i am using frameannounce and captureframequeue in the call library node, but the zip file i sent had the older version by mistake, i copied the featureintget node to create these 2 new, thats why it showed that.

4.Also the order of elements in the pFrame cluster doesn't match with the C definition of that structure as shown in your diagram. Namely the pixelFormat should be before the height and width in that structure according to the C declaration shown, but it is after that

i changed the pixel format order, and do i give these values as input even though in the function prototype in .C they are commented as //----- Out ----- ?

i dont know the receive status, receive flags,ancillary size, i entered them 0 as per vimbaC.h, the rest of the values as per dimensions and offset but nothing happened. they output what ever my inputs were. i dont think the function is returning any thing as per its own calculations. so i tried some random numbers , gives same outputs.

5. Another problem you have is the handling of the 4 pointers for the context. This is a fixed size array and not a pointer to an array. So you have to put in 4 pointer sized integers into the cluster and not an array of pointer sized integers. But even if it was a pointer to pointers you couldn't use a LabVIEW array inside the cluster since a LabVIEW array is not a pointer to memory area but a pointer to a pointer to a memory area.

i am little confused about this, do i just place four separate U32s in the cluster instead of the array ? but the function is expecting "void* context[4];" No ? i thought this array is being used by the captureframequeue internally to do some operation in those locations. so i did insert these 4 as separate U32s once and then I32s again, both times i get the following error. i guess the array of pointers seems to be correct input, No ?

VimbaC-stdwinapi.lvlib:Error Converter (ErrCode or Status).vi<ERR>

VmbErrorStructSize

The given struct size is not valid for this version of the API

<b>Complete call chain:</b>

VimbaC-stdwinapi.lvlib:Error Converter (ErrCode or Status).vi

Vmb Frame Announce.vi

Grab Image.vi

6.Next problem is that you Unmap the pointer after the Frame Announce call.

I removed the unmap..

7. But since you don't use the callback pointer which would tell you when the frame has been fully captured (and you can't use a callback pointer without writing an intermediate DLL that translates such a callback into a more LabVIEW friendly asynchronous event such as a User Event) you have to find some other means to poll the session for the completion of the frame capture by calling some function in the DLL until it returns "frame capture complete" status or an error condition.

yes there is a callback function that tells the frame has been acquired and then goes to capture the next frame in the asynchronousgrab.c example in the attached folder, but i am not sure how to implement this in labview as the API does not expose this function to LV call library drop down. and i am unsure of how to create an intermediate dll to do this.

Method: FrameCallback

//

// Purpose: called from Vimba if a frame is ready for user processing

//

// Parameters:

//

// [in] handle to camera that supplied the frame

// [in] pointer to frame structure that can hold valid data

//

void VMB_CALL FrameCallback( const VmbHandle_t cameraHandle, VmbFrame_t* pFrame )

{

//

// from here on the frame is under user control until returned to Vimba by re queuing it

// if you want to have smooth streaming keep the time you hold the frame short

//

VmbBool_t bShowFrameInfos = VmbBoolFalse; // showing frame infos

double dFPS = 0.0; // frames per second calculated

VmbBool_t bFPSValid = VmbBoolFalse; // indicator if fps calculation was valid

double dFrameTime = 0.0; // reference time for frames

double dTimeDiff = 0.0; // time difference between frames

VmbUint64_t nFramesMissing = 0; // number of missing frames

// Ensure that a frame callback is not interrupted by a VmbFrameRevoke during shutdown

AquireApiLock();

if( FrameInfos_Off != g_eFrameInfos )

{

if( FrameInfos_Show == g_eFrameInfos )

{

bShowFrameInfos = VmbBoolTrue;

}

if( VmbFrameFlagsFrameID & pFrame->receiveFlags )

{

if( g_bFrameIDValid )

{

if( pFrame->frameID != ( g_nFrameID + 1 ) )

{

// get difference between current frame and last received frame to calculate missing frames

nFramesMissing = pFrame->frameID - g_nFrameID - 1;

if( 1 == nFramesMissing )

{

printf("%s 1 missing frame detected\n", __FUNCTION__);

}

else

{

printf("%s error %llu missing frames detected\n",__FUNCTION__, nFramesMissing);

}

}

}

g_nFrameID = pFrame->frameID; // store current frame id to calculate missing frames in the next calls

g_bFrameIDValid = VmbBoolTrue;

dFrameTime = GetTime(); // get current time to calculate frames per second

if( ( g_bFrameTimeValid ) // only if the last time was valid

&& ( 0 == nFramesMissing ) ) // and the frame is not missing

{

dTimeDiff = dFrameTime - g_dFrameTime; // build time difference with last frames time

if( dTimeDiff > 0.0 )

{

dFPS = 1.0 / dTimeDiff;

bFPSValid = VmbBoolTrue;

}

else

{

bShowFrameInfos = VmbBoolTrue;

}

}

// store time for fps calculation in the next call

g_dFrameTime = dFrameTime;

g_bFrameTimeValid = VmbBoolTrue;

}

else

{

bShowFrameInfos = VmbBoolTrue;

g_bFrameIDValid = VmbBoolFalse;

g_bFrameTimeValid = VmbBoolFalse;

}

// test if the frame is complete

if( VmbFrameStatusComplete != pFrame->receiveStatus )

{

bShowFrameInfos = VmbBoolTrue;

}

}

if( bShowFrameInfos )

{

printf("Frame ID:");

if( VmbFrameFlagsFrameID & pFrame->receiveFlags )

{

printf( "%llu", pFrame->frameID );

}

else

{

printf( "?" );

}

printf( " Status:" );

switch( pFrame->receiveStatus )

{

case VmbFrameStatusComplete:

printf( "Complete" );

break;

case VmbFrameStatusIncomplete:

printf( "Incomplete" );

break;

case VmbFrameStatusTooSmall:

printf( "Too small" );

break;

case VmbFrameStatusInvalid:

printf( "Invalid" );

break;

default:

printf( "?" );

break;

}

printf( " Size:" );

if( VmbFrameFlagsDimension & pFrame->receiveFlags )

{

printf( "%ux%u", pFrame->width, pFrame->height );

}

else

{

printf( "?x?" );

}

printf( " Format:0x%08X", pFrame->pixelFormat );

printf( " FPS:" );

if( bFPSValid )

{

printf( "%.2f", dFPS );

}

else

{

printf( "?" );

}

printf( "\n" );

}

// goto image processing

ProcessFrame( pFrame);

fflush( stdout );

// requeue the frame so it can be filled again

VmbCaptureFrameQueue( cameraHandle, pFrame, &FrameCallback );

ReleaseApiLock();

}

any help ?

04-03-2017 08:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

i am not well versed with C, so i had someone help create an exe using this asynchronousgrab.C example to output the image but it outputs to the console and i dint know how pipe/ reroute to LabVIEW front panel. so we created a file and wrote the output to it and read it using systemexec and LV file functions from that file and had another file where "get_image" and "done" were written to synchronize when to read the data file and when to close. but opening closing the files to read and write at both ends was way too slow it was taking about 3 seconds to write one frame, i am in need of 30 frames/sec. i knew this was inefficient and is not usable. also the enter button was used to stop the acquisition in that C program but when built to exe, it never responded to enter to stop the acquisition always had to kill it.

is there a way to convert this asynchronousgrab.C example to dll and use its functions in call library node to command it to start, stop and give me image, thats all i need ?

the other option i tried was giving the vimbaNET.dll supplied by allied to LV constructor node ,but it is giving the generic " an error occured trying to load the assembly" no other description. would you mind trying that once from the .net dll attached here...

last option i am trying is this python wrapper some one wrote from this link

https://github.com/morefigs/pymba and trying the examples from here

http://anki.xyz/installing-pymba-on-windows/

but i still have the question of how to get the output from python to LV , not knowing python and have issues installing the packages.

any help is greatly appreciated..

Thank you very much..

04-04-2017 02:08 AM - edited 04-04-2017 02:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@freemason wrote:

1.Instead you should retrieve the image size the camera can support

the max size is 1936*1216 = 2354176 happens to be displayed as payload size even in vimba viewer software provided by allied vision. thats why i chose this value.

Well, there are certain rules in software writing, one of them is: Don't ever put f***ing magic constants in your code if you can help it. Those magic constants are just that, magic and very much constant, and in a year from now you go and try to use this code with a different camera; maybe your current camera broke down and you had to get a (temporary) replacement until your unit has been replaced, and this new camera happens to be one with a different image sensor with more pixels!

2. and pass that to the Announce Frame.vi to be used to generate the IMAQ image and then from the return values of the IMAQ Get Image PixelPtr.vi, use the Line Width(Pixels), and then multiply this with the Pixel Size (Bytes), and then multiply this result with the Height of the image and pass this as buffer size.

The math you suggest comes out exactly to match transfer max size output of the image pixel pointer = 2412544 regardless of the border size input value, which is 58,368 more than 2354176, so i give this as input border size to frame announce now..

So continuing from above camera replacement case, you continue to create an image of 1936*1216 with the IMAQ Create, retrieve its pointer with IMAQ Get PixelPtr.vi, and then go and tell your camera DLL, "Yeah go ahead, I placed a pointer in that data structure which is big enough to store the "payload" you would like to have and the camera DLL does just that and boom!!!, memory corruption lies ahead and if you are lucky your program simply crashes, or if you are not so lucky it simply corrupts some memory and causes your application to generate totally wrong measurement results before the corruption eventually amounts to the point of corrupting some real memory pointers that will finally and mercifully cause a crash anyhow.

You need to calculate the size of the memory area whose pointer you pass to the DLL from the information you have and that is the information of the picture height and the return values from IMAQ Get PixelPtr.vi and then pass that to the DLL, not some number you got from the DLL itself that tells you how big of a memory area it would like to write to.

If this size eventually turns out to be not correct the worst that can happen (provided the DLL does what it is supposed to do by using this size properly instead of just treating it as some nice decoration without any real value) is that only part of the image is placed in the memory area. That will then tell you that the image you created with IMAQ Create was probably to small and you will have to go and adjust the size of the width and height accordingly and that is also why you should actually wire these two values out of the Announce Frame.vi. That way you don't have to go searching where you have to adjust these values deep down in your application, but can properly make this parameters of your image acquisition routine.

Any decent image acquisition library nowadays also gives you the ability to select a specific area of the image that you are interested in and then only will transfer that much of the image to your application memory buffers. In a future version of your application you may want to make use of that and then adjust the area but thanks to those magic constants deep down in your software library the image will look skewed since the IMAQ image still assumes a fixed image size of exactly 1936*1216 pixels.

Despite your claims that the border size is not relevant, the math shows you that it probably is. If I take the number you provide and divide it by 1216 I get a Line Width of 1984 pixels. So IMAQ seems to add whooping 48 pixels to the image per line. This seems pretty huge in fact, but I can't say right from my hat if that is possible or indicative of some problem with the use of IMAQ Create. It surely likes to add padding to the image buffer, definitely for the border, so various picture algorithms have enough space to operate on the image to the outermost image pixel, without having to do all kind of special casing to avoid corrupting image pixels from the previous and next line.

You will also have to find a way to tell your DLL that the image should be actually transferred with a line width of 1984 pixels (or more precisely the result of Line Width * Pixel Size from the IMAQ Get PixelPtr.vi) insteaad of the 1936 it really uses or your image will look skewed. This is also known as line padding in image processing.

4.Also the order of elements in the pFrame cluster doesn't match with the C definition of that structure as shown in your diagram. Namely the pixelFormat should be before the height and width in that structure according to the C declaration shown, but it is after that

i changed the pixel format order, and do i give these values as input even though in the function prototype in .C they are commented as //----- Out ----- ?

i dont know the receive status, receive flags,ancillary size, i entered them 0 as per vimbaC.h, the rest of the values as per dimensions and offset but nothing happened. they output what ever my inputs were. i dont think the function is returning any thing as per its own calculations. so i tried some random numbers , gives same outputs.

I didn't read the manual, but just went from the information in the VI. Studying API documentation for image acquisition libraries is serious work and takes many hours and I'm doing this in my own time, so be gentle on me here. It's pretty much possible that these are values that the DLL will eventually fill in.

5. Another problem you have is the handling of the 4 pointers for the context. This is a fixed size array and not a pointer to an array. So you have to put in 4 pointer sized integers into the cluster and not an array of pointer sized integers. But even if it was a pointer to pointers you couldn't use a LabVIEW array inside the cluster since a LabVIEW array is not a pointer to memory area but a pointer to a pointer to a memory area.

i am little confused about this, do i just place four separate U32s in the cluster instead of the array ? but the function is expecting "void* context[4];" No ? i thought this array is being used by the captureframequeue internally to do some operation in those locations. so i did insert these 4 as separate U32s once and then I32s again, both times i get the following error. i guess the array of pointers seems to be correct input, No ?

VimbaC-stdwinapi.lvlib:Error Converter (ErrCode or Status).vi<ERR>

VmbErrorStructSize

The given struct size is not valid for this version of the API

<b>Complete call chain:</b>

VimbaC-stdwinapi.lvlib:Error Converter (ErrCode or Status).vi

Vmb Frame Announce.vi

Grab Image.vi

That's a problem then, but the placement of a LabVIEW array inside the structure is definitely completely wrong! A LabVIEW array is NEVER ever directly compatible with a C array, no matter what. The fixed size array with 4 elements embedded in a structure is in fact equivalent with a cluster with 4 elements inside the outer cluster but you can leave the inner cluster also away, as the cluster itself does not occupy any memory in the data layout of the variable. If the resulting size doesn't match with what the library expects, there must be something else going wrong!

7. But since you don't use the callback pointer which would tell you when the frame has been fully captured (and you can't use a callback pointer without writing an intermediate DLL that translates such a callback into a more LabVIEW friendly asynchronous event such as a User Event) you have to find some other means to poll the session for the completion of the frame capture by calling some function in the DLL until it returns "frame capture complete" status or an error condition.

yes there is a callback function that tells the frame has been acquired and then goes to capture the next frame in the asynchronousgrab.c example in the attached folder, but i am not sure how to implement this in labview as the API does not expose this function to LV call library drop down. and i am unsure of how to create an intermediate dll to do this.

You simply don't! LabVIEW can not create function pointers to pass to a DLL. Period! And that is not so much because the LabVIEW developers wouldn't want to support this, but because the resulting configuration dialog would be several magnitudes more complex than the existing Call Library Node configuration, and seeing how most LabVIEW users already struggle with this configuration, it's pretty obvious that those LabVIEW developer hours can be spent much more productive with other things than implementing call back pointer support in LabVIEW. The way to deal with callback pointers when interfacing a DLL to LabVIEW, is to write an intermediate DLL library in C(++) and implement the callback there and inside the callback function translate to something more LabVIEW friendly such as a user event. And while callback programming is one of the more advanced topics of C programming and definitely requires some seasoned C programmer, trying to do that all in LabVIEW is really black magic art in comparison.

As to your followup post! Image processing and image acquisition are two of the most demanding areas in terms of proper memory management and dealing with pointers. There are generally a myriad of ways to do wrong things with that and just about one correct way. It is accordingly even for seasoned C programmers a pretty demanding and frustrating experience to write code for that. LabVIEW makes this all a lot easier, as long as you can use pretty and nicely developed VI libraries, but you are diving into the nastyness of C programming whenever you start to interface to DLLs and LabVIEW can not take that away in any way. And trying to start with interfacing an image acquisition library is about as similar as trying to build a cathedral right away without having built a few houses and other buildings first: A pretty sure way for a disaster waiting to just happen!

04-04-2017 03:54 AM - edited 04-04-2017 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@freemason wrote:

i am not well versed with C, so i had someone help create an exe using this asynchronousgrab.C example to output the image but it outputs to the console and i dint know how pipe/ reroute to LabVIEW front panel. so we created a file and wrote the output to it and read it using systemexec and LV file functions from that file and had another file where "get_image" and "done" were written to synchronize when to read the data file and when to close. but opening closing the files to read and write at both ends was way too slow it was taking about 3 seconds to write one frame, i am in need of 30 frames/sec. i knew this was inefficient and is not usable. also the enter button was used to stop the acquisition in that C program but when built to exe, it never responded to enter to stop the acquisition always had to kill it.

is there a way to convert this asynchronousgrab.C example to dll and use its functions in call library node to command it to start, stop and give me image, thats all i need ?

the other option i tried was giving the vimbaNET.dll supplied by allied to LV constructor node ,but it is giving the generic " an error occured trying to load the assembly" no other description. would you mind trying that once from the .net dll attached here...

last option i am trying is this python wrapper some one wrote from this link

https://github.com/morefigs/pymba and trying the examples from here

http://anki.xyz/installing-pymba-on-windows/

but i still have the question of how to get the output from python to LV , not knowing python and have issues installing the packages.

The .Net interface, while not my preferred choice, is most likely your best bet to get something working. The error you see might be caused by an incompatible .Net framework. The .Net world is split into two eras: everything before .Net 4.0 and everything from 4.0 and later. Many application and .Net components are written to only work with one of those eras. LabVIEW before 2013 or so by default loaded with .Net 3.5 or earlier and the latest version loads by default with .Net 4.0 or higher. If you need to call a .Net component that requires the other .Net world, you can add a manifest to the LabVIEW folder that tells LabVIEW to load with the other .Net version instead.

Read this article to see if it might be your problem.

As to incorporating Python: I can absolutely not recommend that. Python is great and can be interesting to use in LabVIEW for a lots of things, but trying to circumvent trouble in interfacing to an image acquisition board, is about the worst reason you can imagine to use Python for. You basically replace one problem with at least two new problems. The image acquisition interface is still going to have to proof to work with your Python library. While many software for Python is pretty good there is also a lot of crap out there, that someone wrote, with or without much understanding of the underlying problem, to the point that it somehow started to work for their specific use case, then they throw it out in the world with the words: Look what I have done! and you can then start to debug it if you want to use it for your own work.

In addition you create the extra problem of having to interface yet another software (Python) to your LabVIEW program. Maybe the Python library is able to do the 30 images per second that you are shooting for, maybe it is not, but getting that much of data from Python into LabVIEW in realtime is going to be a pretty difficult challenge too.

04-04-2017 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks rolfk

all your answers make sense.

I will try to get some one to build the intermediate dll.

I really appreciate your help. learned a lot.

04-04-2017 02:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

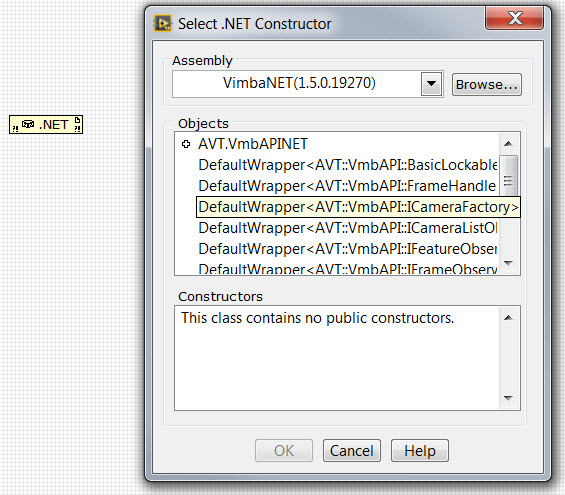

forgot to mention that .net trial lead to this, no public constructors..and the list had only 10 objects none of them are any functions that i can use to get camera info or start acquisition

04-26-2017 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello freemason,

You have to click on the plus in the upper left corner and then use the Vimba() object. First use the method Startup(), after that You may use all the properties and methods of vimba with further objects.

I worked on the same problem like You: Getting images from an allied vision camera using the free SDK.

My problem is the stability of the vimba .NET DLL: LV 2012 SP1 crashes very often. I will try to install LV 2014.

Did You had success after Your las post?

Best Regards