- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Combining Map and arrays?

05-13-2022 09:00 AM - edited 05-13-2022 09:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

@VinnyAstro wrote:Two questions about this:

When is a copy created? And is my example and statements below correct?

The actions on the array (dfa at index 0 + ba the new value) above is basically what I am doing for every of the 32 clusters I mentioned before.

That's 256*(16+8), a timestamp is 16 bytes! So, triple your estimate.

Ah crap I forgot I was bundling a timestamp together with its value for my XY graph... but it doesn't have to be. I can just cluster one dbl and its array and cluster this for every Data and send one common timestamp together with the entire cluster. Both for the display and the log, the difference in time won't be an issue.

wiebe@CARYA wrote:Deleting the 1st element could need a copy (or at least a move). LabVIEW probably adds an "array subset object" (something like a stride\stride c++ object) to the original to avoid this. But when you keep adding elements, at some point the memory needs to be either moved or copied, as the memory allocated for the original size (+ x%) will not be enough anymore..

But as I'm deleting one element and then adding one, the size stays the same so globally no memory change, no?

wiebe@CARYA wrote:Putting an object on a queue doesn't require a copy, but if you continue to modify the data, there needs to be a copy. That's why it might be faster to enqueue the single samples, and add it to a (circular) buffer on the receiving end(s). You might get multiple copies, but you're not copying al the time. So you're trading memory for speed.

You'll get these kind of trade offs all the time. A circular buffer is much faster to write, a little slower to read, compared to a simple array as you used it. That's slower to write, but you can read instantly. Another trade off is that a circular buffer is more complex.

So you suggest queueing single data points and "clusterizing the arrays" twice or more, instead of clusterizing once and queueing twice, right?

Do you have an example of circular buffer under labview, I thought this is what I was doing already (deleting first element, and adding one, but apparently not; And I couldn't find a recent enough subject.

05-13-2022 12:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@VinnyAstro wrote:

wiebe@CARYA wrote:

@VinnyAstro wrote:Two questions about this:

When is a copy created? And is my example and statements below correct?

The actions on the array (dfa at index 0 + ba the new value) above is basically what I am doing for every of the 32 clusters I mentioned before.

That's 256*(16+8), a timestamp is 16 bytes! So, triple your estimate.

Ah crap I forgot I was bundling a timestamp together with its value for my XY graph... but it doesn't have to be. I can just cluster one dbl and its array and cluster this for every Data and send one common timestamp together with the entire cluster. Both for the display and the log, the difference in time won't be an issue.

wiebe@CARYA wrote:Deleting the 1st element could need a copy (or at least a move). LabVIEW probably adds an "array subset object" (something like a stride\stride c++ object) to the original to avoid this. But when you keep adding elements, at some point the memory needs to be either moved or copied, as the memory allocated for the original size (+ x%) will not be enough anymore..

But as I'm deleting one element and then adding one, the size stays the same so globally no memory change, no?

If the memory is

addres value

xxxxxxx0 a

xxxxxxx1 b

xxxxxxx2 c

xxxxxxx3 d

xxxxxxx4 e

xxxxxxx5 f

xxxxxxx6 g

xxxxxxx7 h

xxxxxxx8 i

xxxxxxx9 j

When you delete the first element and add one:

addres value

xxxxxxx0 X

xxxxxxx1 b

xxxxxxx2 c

xxxxxxx3 d

xxxxxxx4 e

xxxxxxx5 f

xxxxxxx6 g

xxxxxxx7 h

xxxxxxx8 i

xxxxxxx9 j

xxxxxx10 K

You either need to move all elements (so xxxxxxx1 becomes xxxxxxx0, or you need extend the memory to xxxxxx10.

LabVIEW will manage this for you, but it can be expensive.

For a circular buffer, this is what happens:

filled with 10 elements:

xxxxxxx0 a

xxxxxxx1 b

xxxxxxx2 c

xxxxxxx3 d

xxxxxxx4 e

xxxxxxx5 f

xxxxxxx6 g

xxxxxxx7 h

xxxxxxx8 i

xxxxxxx9 j

pointer to start: xxxxxxx0

Add 1 element:

xxxxxxx0 K < new element overwrites the 1st!

xxxxxxx1 b

xxxxxxx2 c

xxxxxxx3 d

xxxxxxx4 e

xxxxxxx5 f

xxxxxxx6 g

xxxxxxx7 h

xxxxxxx8 i

xxxxxxx9 j

pointer to start: xxxxxxx1

No memory allocations or movement! Just replacing one value, and the pointer.

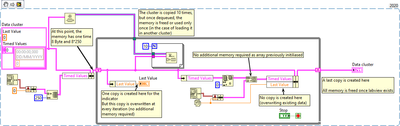

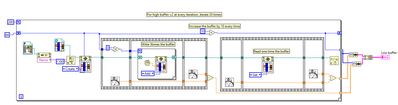

Here's an image I made for a colleague a while ago:

Note that the init, add and get can be functions in a FGV, or VI's in a class, or actions in a DVR inplace element structure. This gives you resp. a global\singleton, by wire & by value, by wire & by reference solution.

05-16-2022 03:29 AM - edited 05-16-2022 03:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sweet !

I've created a FGV for now, hopefully I'll be able soon to train with OOP.

The only issue I had from the circular buffer ... is it's circular ...

A graph updated directly from the array will have a "Sweep chart" update mode effect, and I would prefer the "Strip chart" update mode effect. So here is what I did, but then I'm afraid I'm losing the point of using a circular buffer, as the bigger the buffer will be, the longer the get function will take.

05-16-2022 04:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

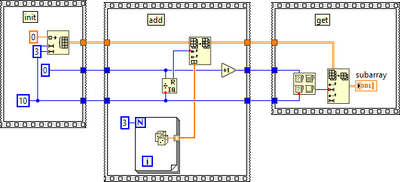

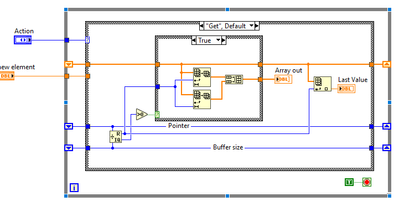

That image was an part of a conversation. The get part wasn't complete.

Sorry about that, the for loop is the way to do that.

Use the pointer and a Quotient & Remainder. If the Quotient is >0, you know the data has wrapped.

If not wrapped, get the first part of the array up to the pointer.

If wrapped, get the entire array, and rotate with the remainder.

This will give you the entire buffer in sequential order.

05-16-2022 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

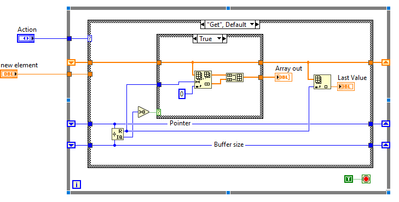

If I understood well:

05-16-2022 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

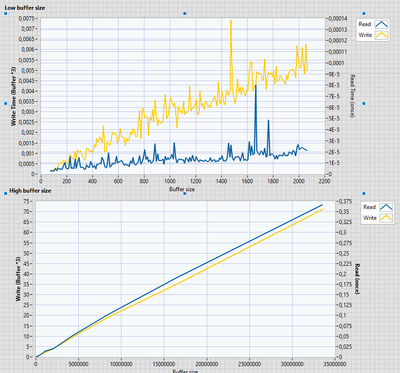

Just for the sake of it, I have done some performance tests and graphed out the time to write 3 times in the buffer and read 1 time over the buffer size, for High (up to 33 554 432 points) and low (up to 2100 data points) buffer size.

The results are somewhat predictable and rather linear.

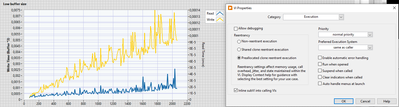

But for some reason, if I put my FGV in Time critical priority, it is (much) slower

vs

05-16-2022 11:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@VinnyAstro wrote:

If I understood well:

No, don't use a for loop, split the array into the part before the point and after the pointer, use build array to make them 1 array with the right order.

05-17-2022 03:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ah yes ok makes sense.

I also realized I can use either Delete from Array or Array subset when the split is required, but I guess 1 Delete from array is better.

Also, for my Telemetry Data cluster: I decided to dynamically call the FGV for every telemetry that requires an array, store their reference in a Map, bundle this map with the other telemetry data (that doesn't require an array) and send away this cluster to other loops which will read out the FGV and the single data.

If i understand well the mecanisms of references, this should considerably reduce the copy sizes and also make the data manipulation easier thanks to the Map keys.

05-17-2022 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@VinnyAstro wrote:If i understand well the mecanisms of references, this should considerably reduce the copy sizes and also make the data manipulation easier thanks to the Map keys.

Yes, references can prevent copies.

However, some (value, value signaling) property nodes will synchronize with the refresh rate of the screen.

So, what is faster depends a lot on the situation... Another reason to use the most pragmatic solution, and to optimize when needed.

05-17-2022 04:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@VinnyAstro wrote:

Ah yes ok makes sense.

I also realized I can use either Delete from Array or Array subset when the split is required, but I guess 1 Delete from array is better.

A delete is good. A rotate will also work. I don't expect much difference in performance, but you can always test.