- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Can i optimize write of large data array into text file ?

09-28-2016 05:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

Here's my question:

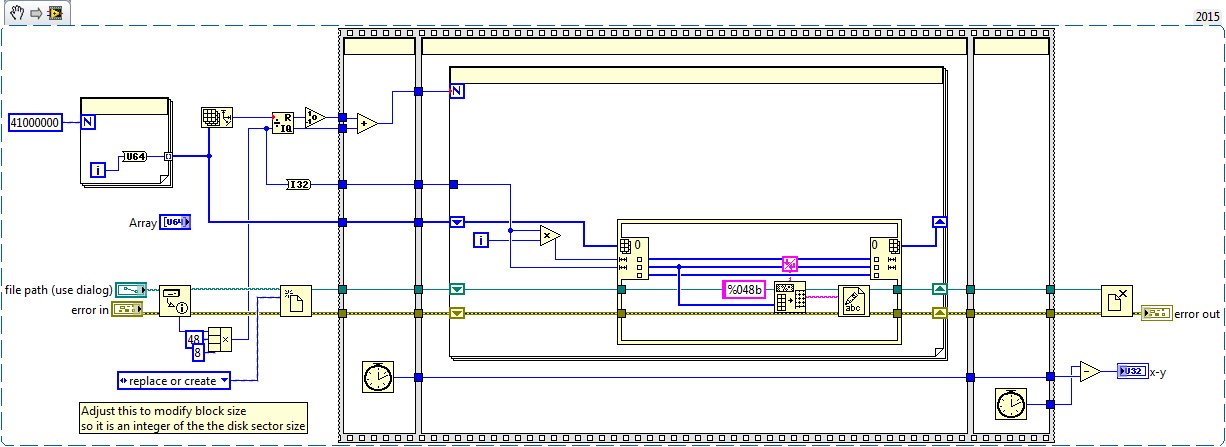

I've a large data array (for me its large), about 41M of U64 elements.

But i do not directly write this array into a file.

I need to convert each data in a specific format before wrote it in a file:

for each element of array

convert it to 48b string

add an EOL character

write it into open file

However, this operation takes a lot of time (about 5min10) on a correct PC.

It can be OK, if i need to do this operation only once. But, it is not, this operation can be reproduced many times, and so at the end, it consumed too much time.

So, my question is simple: Is it possible to optimize my VI in order to gain some time ?

Thanks to all.

Ps: I've joined the VI (LV 2015).

09-28-2016 07:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Let's divide this problem into two sub-problems -- convert 41M U64 into a string representation, and writing this representation to a file. Note that 41 million U64 is 41*8=328 MBytes, a "big array". Your output representation is a 48-character string + an EOL (which on the PC is <CR><LF>, for a total of 48+2 = 50 bytes), so the output file is 205 MBytes.

I tackled the first part by generating a million random U64s and timing how long it took to convert them to 48-byte strings and concatenate an EOL. The answer was 1.11 sec. I then did the same thing, but changed the Format string on the Format Into String function to %048b\r\n, which tacks the EOL on directly so I can eliminate the Concatenate String. This time it took 1.9 msec, a 500-fold improvement. Still, a "drop in the bucket" compared to the File I/O time.

I suspect that File I/O can be sped up by writing larger buffers, maybe 100 lines at a time instead of one at a time. There will be tradeoff between storage time and I/O time -- I'll leave it to you to design an "experiment" similar to the one I described above to investigate the "formatting" timing.

Bob Schor

09-28-2016 07:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

Well, i'm sure about your computation of file size...

I've made a try:

i have an array of 45M of U64

The text file created by my original VI is about 2Go

09-28-2016 08:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Slight correction there Bob, 50bytes times 41M is 2.05G, you dropped a zero.

Writing large blocks though almost undoubtedly will help.

Certified LabVIEW Developer.

09-28-2016 08:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oops. Pesky decimal points! Yep, 2.05, which agrees nicely with what the Original Poster sees.

BS

09-28-2016 08:57 AM - edited 09-28-2016 09:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

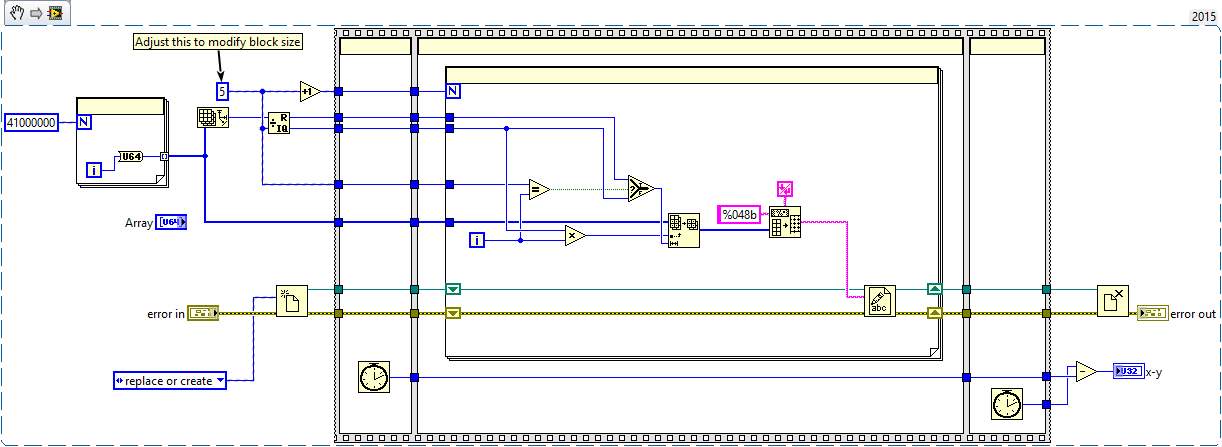

Actually, after thinking about it, a couple of steps.

A) Write more than one line to the file at a time, make use of the file IO buffer.

B) Use the Array to Spreadsheet function to convert the data into multiline strings.

C) Break the Array up into smaller chunks to process at a time to lower the amount of data copying needed.

Here is a simple snippet I wrote that works decently fast (about 35 seconds for my one test using 41M values.)

Certified LabVIEW Developer.

09-28-2016 10:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I try your code. However i don't understand clearly the impact of the block size. Because i use 5 and 10 for example, and i've the same value (very close in fact).

However, i try this modification in my application (which is still in debug), and with 40.8M of U64 i made 1min30 to write the file instead of 5min. That's great

09-28-2016 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, after my Decimal Point Debacle, I decided to try to redeem myself with a speedy write. Jon's routine is faster than mine (on my machine, his was 52 seconds, mine was 186), but mine is perhaps a bit simpler. Here it is, with comments:

- I'm generating 64-bit numbers from the bit representation of the Random Number generator. Because of the "rules" of Format Into String, I have to zero out the top 16-bits, so that's the extra code inside the Generator. I'm not counting this in the timing, which starts right after this For loop.

- I rearrange the array of 41 million into a 2D array of 41 by a million. It might be significantly faster to, instead, "extract" 41 million-element sub-arrays, similar to Jon's code.

- I skip adding the EOL as I format the million-element string, then write it. Hmm -- what if I refactored the Array as 1 million rows of 41 columns? I'll time that, too.

- So changing the Array as mentioned in the last comment makes it three seconds slower ... oh, well.

Bob Schor

09-28-2016 12:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mhed wrote:Hi,

I try your code. However i don't understand clearly the impact of the block size. Because i use 5 and 10 for example, and i've the same value (very close in fact).

However, i try this modification in my application (which is still in debug), and with 40.8M of U64 i made 1min30 to write the file instead of 5min. That's great

Excellent to hear it was faster.

The value of 5 was a guess, a larger number should give somewhat better results I think. In a quick test I just did, 1000 reduced the time from 35 to 24 seconds. Basically here the idea is to reduce the amount of memory that needs to be copied and allocated by the Array Subset.vi and the Array to Spreadsheet String.vi while still using the file IO buffers fully.

Each time you call Array Subset and Array to Spreadsheet String, the system has to allocate enough memory space to hold the values. In the case of Array Subset it has to then copy the values from the original array to the new memory (The compilier might be smart enough to avoid this and just use pointers since we are not changing the values, but not sure). With the Array to Spreadsheet String.vi, it has to allocate the memory to hold the computed string values and then fill them, note that this is signficantly more space than the array block, so locating enough free memory would be a problem without having to use a page file or similar.

The File IO is a bit more complex to explain, and its been a long time since I last had to think about it, but in essence, when you use the Write to File.vi, LabVIEW calls a windows OS function and passes the string to it. The OS write function will copy the data to a device buffer and then trigger the OS code to copy that data to the actual drive to do the writing. The OS write function will also block and wait on any further calls until that data transfer is completed, which means the program stops until it times out, or the transfer is done. The object here is to fill the buffer as much as possible with each data transfer, so that there are fewer blocked OS write calls, since each blocked call incurs a non-negligible time cost (A blocked call doesn't just call immediately after getting blocked, it waits a bit before trying again). Also factor into this time the fact that each file write also needs to update the filesystem information, smaller writes add that delay as well. You can see this in action when you copy directories; a directory with one large file will copy much faster that a directory with hundreds of small files, even if the large file takes up more space on the drive than the small files.

Certified LabVIEW Developer.

09-28-2016 05:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Try to write blocks in multiples of the disk sector size. Each ASCII character is 8 bytes. Try the following snippet, it seem to reduce the time almost in half on my computer.

Cheers,

mcduff