- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

2ms scheduler on windows platform

03-23-2022 01:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I have an application developed, using QT creator, on Ubuntu platform. It is used for MIL-STD-1553 communication with DUT. The app will be sending and receiving 32 words of data at 2ms interval. The laptop+Data Device Corportaion(DDC BU-67103) usb type device will be configured as Bus Controller(BC), and DUT will be configured as Remote Terminal(RT). I want to develop the same app on windows platform using LabVIEW support package provided by DDC. Is it possible to schedule 32 word frame every 2ms on windows platform. If yes, is there any minimum system requirements for the laptop? Please suggest any additional programming tips/advices to make it work as close to as real time. My plan is to use while loop with wait_until_next_ms configured to 2ms. Within 2ms I will update all the variables( 32 words input and 32 words output). Please help me whether this strategy is ok or is there a better way of doing it. Laptop configuration: Acer travelmate - TMP-215-52 series, Processor- i5 10th gen, 16gb ram, 1TB HDD(No SSD)

Regards

03-23-2022 04:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Windows timer used has a resolution of 1 ms but if the 2ms has to be very accurate I think you might have trouble. Have a look at Accuracy of Software-Timed Applications in LabVIEW.

03-24-2022 10:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I don't know anything about the Driver you will be using, nor whether your application requires treating each data point as it comes in (meaning your processing loop has to run at 500 Hz, a strict 2 ms window) or whether you can, say, acquire 100 points at a time (so you get a "batch" every 0.2 seconds).

Microsoft Windows is not a Real-Time OS, so you can't expect accurate timing every 2 ms, especially if you are also doing disk I/O or other processing of the incoming data. But LabVIEW is also a Data Flow language, which means that if you loosen the timing requirements by taking 100 samples at a time, you can acquire and stream the data to disk in parallel loops (so-called Producer/Consumer Design Pattern) very reliably, letting your acquisition Driver (or DAQmx) provide the accurate hardware "clock" the system requires.

Bob Schor

03-25-2022 05:15 AM - edited 03-25-2022 05:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Performance isn't an issue. You can send MHz's of data if you want to.

Accuracy is an issue. But it's the same issue for the QT Ubuntu application.

The OS will cause jitter, and that will be hard to prevent. You avoid lag...

Even if you can time your application accurately, it's only accurate in relation to your computer's clock. Computer clocks can be very inaccurate (like +\- 10 sec. per day).

You might look into timed loops (although I don't like them).

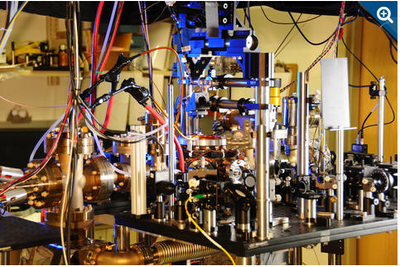

Of course there are better ways. An FPGA doing all the communication, and externally clocked with a Rubidium atom clock, and GPS synchronized for absolute time. How expensive do want it to be (you can also use a ytterbium lattice clock)?

Please let us know why you need 2ms accuracy. Does it need to be accurate (is it just a guideline)? What is using this 2ms data? Is that device accurate? It's clock will be different from your laptops clock. Who's the boss? And so on...

03-25-2022 02:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Of course there are better ways. ...you can also use a ytterbium lattice clock

I had to look that up.

Electrical tape holding things together does not inspire great confidence.

Former Certified LabVIEW Developer (CLD)

03-28-2022 02:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Since this conversation is degenerating a bit I will tell you a little anecdote. Many years ago, around the time of Windows NT, there was a white paper suggesting that it could be used in real-time applications. So we thought "let's give it a go". One of the tests I did was to measure the latency between an interrupt and entry to the interrupt handler,. This was run for many hours and the maximum latency measured. It was consistent enough for our needs running at a ms or so (it was a pretty slow CPU). EXCEPT ... at one particular time during the night it suddenly gave a reading close to 2 seconds! The time this occurred was fairly consistent, about 2 hours after setting the test running in the evening, so we thought "what happens at that time of night"? Was it the lights all being turned off? The cleaners dusting the keyboard?

After a few days I found the answer: it was the screensaver starting, which prevented anything else running on the CPU no matter how high it's priority!