- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Improving deployed executable startup/reboot/longevity reliability

Solved!12-29-2023 11:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi fellow wireworkers,

I have success running my deployed LV startup executable on the RPi Zero 2 W continuously for many days (at least 9 to be exact). I now want to solve two problems:

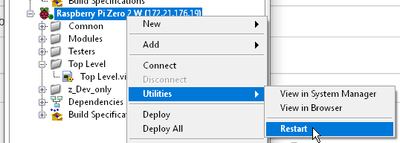

- Startup after a cold start or reboot of RPi is not consistent in that about 30% of the time the executable fails to run, sometimes failing to launch 3-4 reboots in a row . My reboot method is either via RPi GUI (shutdown/reboot) or via a forced watchdog reboot or sometimes removing/replugging USB cable to powercycle. (my app is not doing any SD Card writes at the time). Restarting the LabVIEW service via the LV IDE \Utilities\Restart menu I *think* gives more reliable restarts (I still need to quantify how reliable though)

- Automatically recovering from a lock up of the startup executable after many days of stable running.

On point 1. , is that a common situation that other developers here see ? Any thoughts on how I could improve the reliability because I need it to recover automatically, i.e. if a reboot fails to launch the startup EXE I must keep auto-rebooting until it launches OK.

for 2. I am pursuing two possible ideas

a) Use the RPi internal watchdog. I have succeeded to install and enable the watchdog per many other RPi centric articles e.g. this. My next step is for my LV exe to to disable the wd_keepalive daemon service (using the SSH trick and sudo commands etc). and then have the exe pat/tickle the watchdog every 14 seconds. OR

b) Write my own watchdog (BASH script or even a second LV exe running in parallel at startup if possible) that just restarts the LabVIEW service if the main app doesn't tickle it. It would use the linux command that achieves the same as the \Utilities\Restart I mentioned above) :

sudo systemctl restart labview.service

any thoughts on a) or b) ?

thanks

Solved! Go to Solution.

12-30-2023 04:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Peter,

Is your application using the network interface?

On power-up, it is possible that your start-up application is running before the Raspberry Pi has started the network device and obtained an IP address?

There is an option in raspi-config to wait for network at boot. It may be worth enabling this.

Check that all the inputs to your main vi have default values assigned.

12-30-2023 05:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

FWIW I have located and am attaching LabVIEW's failure log located at /srv/chroot/labview/var/local/natinst/log/LabVIEW_Failure_Log.root.txt

The failure description is always "LabVIEW caught fatal signal" and the 2 possible failure Reasons are:

####

#Date: Thu, Dec 28, 2023 08:33:45 AM

#Desc: LabVIEW caught fatal signal

21.0 - Received SIGSEGV

Reason: invalid permissions for mapped object

....

####

#Date: Fri, Dec 29, 2023 06:14:41 AM

#Desc: LabVIEW caught fatal signal

Reason: address not mapped to object

12-30-2023 05:29 AM - edited 12-30-2023 05:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

HI Andy, thanks for replying ! My other reply with the log file attached , I sent before I realised you had replied.

I'm assuming the failure at times to run after restart behaviour I see is not expected or at least unusual.

to answer your questions:

>Is your application using the network interface?

no it isn't. The main things it does prior to the RPi having fully booted is to

- creating and writing to text files at /srv/chroot/labview/usr/local/*.txt

- set some GPIO lines

- poll the USB serial port for data

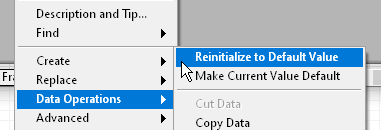

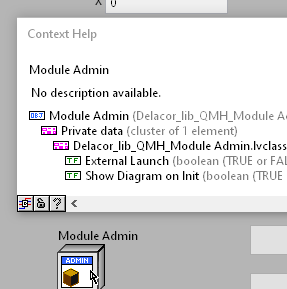

>Check that all the inputs to your main vi have default values assigned.

ok, that is a suggestion out of left field ! The Main vi only has one input to it per below called Module Admin . Don't default value exist for all controls and indicators ?

12-30-2023 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would definitely try to wait for full startup before doing much serious work. Especially the GPIO looks suspicious. Before the host OS is fully started, the mapping of the device entries in the chroot may actually point into non existing device entries on the host. File system could also be affected but I would expect that to be a bit more robust and not just cause a fatal SIGSEGV if not yet fully available.

Since it works much of the time the needed delay may be relatively short and could be just hardwired but you could also try the new VI to check for network availability maybe, that is present since 2019 or so.

12-30-2023 06:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for chiming in Rolf !

From your reply it does seem even a 30% restart failure rate is unexpected. I note the executable runs and thus sets GPIO lines at about the 20s mark post restart and about 5-10 seconds before the RPi's Desktop outputs on its display port. So I will begin by inserting a very large delay of say 30 to 60 seconds just to see if it solves the problem. If it does then I can work backwards from there to better time when I can give my exe the freedom it wants (e.g. using the network availability vi or by inspecting other status indicators of the O/S yet to be elucidated).

I may revisit my watchdog question once I have solved the reboot issue given the latter must be reliable before I implement the former.

01-02-2024 07:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The error is a SEGMENTATION FAULT ! That is causing Linux to abort the LabVIEW Service. I know that was evidenced to above in my error logs, but I'm only learning more about possible causes.

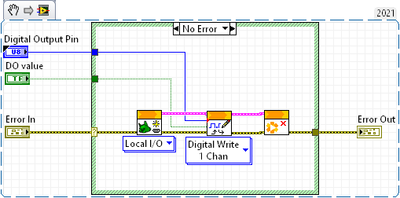

I didn't realise why for the past couple of months in the dev environment why I had to randomly select to reset the connection (i.e. restart the LabVIEW service under the hood). Contrary to above reports, this is not just happening at startup for me, but through intentional stressing of the system and I am now able to getting SIGSEGV segmentation fault errors coincident to every say 10 or 20th toggling of some GPIO pins (and writing to a file) - but most likely GPIO R/W actions.

I am narrowing down the culprit I hope, but it has been a learning experience to get there. The more experienced among you will realise how unusual it is for a native LabVIEW program to generate a segmentation fault. Unlikely to be a bug of the programmer unless they are calling an external library and use incorrect addressing (not my situation).

I am not in the office again for another 10-12 hours (to physically press the push buttons a multiple random # of times to eventually trigger the fault) but I am looking fwd to trying out this "fix" which someone else stumbled across for the RPi 3 some 7 years ago ! Basically disabling the Remote GPIO feature of the RPi was the fix. I hope this is the problem otherwise I must dig deeper. I'll keep you posted.

01-04-2024 01:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I found the main culprit causing BSOD equivalant crashes during execution ! (It was NOT solved by disabling the Remote GPIO setting in the RPi) The culprit - I was calling the following code (Open,Write GPIO,Close) twice in succession without any delay between each successive call.

Inserting a delay of at least 15-20ms solved the "BSOD" equivalent I was getting - a segmentation fault LabVIEW service crashing bug.

Now you might be asking why I do an open and close for my GPIO writes (and I'm inclined to change it). It was because I was doing multiple asynchronous write in parallel. So I initially solved the problem by having the above atomic operation - as I found I could not have multiple instance of the Open Local.vi in my code, I could only have one instance so I would have then had to share the LINX resource reference globally amongst all my asynchronous modules - something I put aside at the time. I suspect/hope that if I was to do that I could then call the Digital Write.vi much more often than 10-15ms, in fact, perhaps I should be able to call it with no delay and it should n ot cause this RPi BSOD ! I am still experiencing this a little at startup per my original post so I will soon go through my init routines to insert delays to confirm I can now solve both problems and the need for a watchdog will drop to a very low priority.

BTW as an aside, it takes only 3 seconds to restart the LabVIEW service and have the startup.rtexe re-running - that is nice and fast. It does take much longer to restart the RPi (like 20-ish seconds before the startup.rtexe is running, so if I ever need to do a watchdog I will do it at two levels, one to restart the LabVIEW service if it or my app stops and then another watchdog to restart the RPi if the first method somehow fails.

My next and hopefully last report back here will be to confirm I solved the startup BSOD that currently happens about 30% of the time and I suspect has the same root cause.

01-05-2024 01:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A mix of good and bad news. Good news is that I had some GPIO writes (per my last reply) at startup causing the segmentation fault error, so I solved those

Bad news is that now the remaining startup error seems to be outside of my control (as I even deleted the startup.rtexe file and still get the error). When I perform a cold reboot (or possibly just a restart), about 1 every 3 times, Linux is unable to mount various directories reporting

- "....E: 10mount: mount: /run/schroot/mount/lv/dev/pts: not mount point or bad option....." or

- "...E: 10mount: mount: /run/schroot/mount/lv/proc: not mount point or bad option....." or

- "...E: 10mount: mount: /run/schroot/mount/lv/sys: not mount point or bad option....."

(complete error for first two copied below at [1],[2])

I've checked those DIRs and they certainly exist when I look.

Does anyone have any suggestions as to the cause, or my next debugging steps ?

[1]

pi@raspberrypi:~ $ sudo systemctl status labview.service

● labview.service - LabVIEW 2021 chroot run-time daemon

Loaded: loaded (/etc/systemd/system/labview.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Fri 2024-01-05 17:49:34 AEDT; 6min ago

Process: 481 ExecStartPre=/usr/sbin/schroot-lv-start.sh (code=exited, status=1/FAILURE)

Process: 591 ExecStopPost=/usr/bin/schroot --end-session -c lv (code=exited, status=0/SUCCESS)

CPU: 848ms

Jan 05 17:49:32 raspberrypi systemd[1]: Starting LabVIEW 2021 chroot run-time daemon...

Jan 05 17:49:33 raspberrypi schroot-lv-start.sh[494]: E: 10mount: mount: /run/schroot/mount/lv/dev/pts: not mount point or bad option.

Jan 05 17:49:33 raspberrypi schroot-lv-start.sh[484]: E: lv: Chroot setup failed: stage=setup-start

Jan 05 17:49:33 raspberrypi systemd[1]: labview.service: Control process exited, code=exited, status=1/FAILURE

Jan 05 17:49:34 raspberrypi systemd[1]: labview.service: Failed with result 'exit-code'.

Jan 05 17:49:34 raspberrypi systemd[1]: Failed to start LabVIEW 2021 chroot run-time daemon.

pi@raspberrypi:~ $

[2]

pi@raspberrypi:~ $ sudo systemctl status labview.service

● labview.service - LabVIEW 2021 chroot run-time daemon

Loaded: loaded (/etc/systemd/system/labview.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Fri 2024-01-05 18:28:21 AEDT; 1min 11s ago

Process: 474 ExecStartPre=/usr/sbin/schroot-lv-start.sh (code=exited, status=1/FAILURE)

Process: 568 ExecStopPost=/usr/bin/schroot --end-session -c lv (code=exited, status=0/SUCCESS)

CPU: 747ms

Jan 05 18:28:19 raspberrypi systemd[1]: Starting LabVIEW 2021 chroot run-time daemon...

Jan 05 18:28:20 raspberrypi schroot-lv-start.sh[493]: E: 10mount: mount: /run/schroot/mount/lv/proc: not mount point or bad option.

Jan 05 18:28:20 raspberrypi schroot-lv-start.sh[483]: E: lv: Chroot setup failed: stage=setup-start

Jan 05 18:28:20 raspberrypi systemd[1]: labview.service: Control process exited, code=exited, status=1/FAILURE

Jan 05 18:28:21 raspberrypi systemd[1]: labview.service: Failed with result 'exit-code'.

Jan 05 18:28:21 raspberrypi systemd[1]: Failed to start LabVIEW 2021 chroot run-time daemon.

pi@raspberrypi:~ $

01-05-2024 07:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator