- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

GPU IMAQ DL Models

03-30-2020 04:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

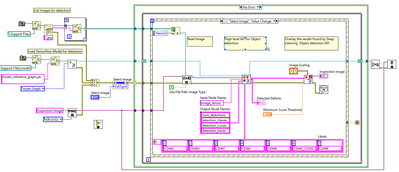

Currently i am working with this example to use IMAQ DL Models block to detect object using neural networks. It is working pretty good so far.

My problem is that it is working pretty slow. It takes even 4/5 seconds to get results; so i want to use the GPU available in my computer. However i have not found the way how to compute the code in the GPU. I have seen some examples in forums and so, but most of them are not very helpful.

I just need a path to take since i am really jammed here..

My GPU is:

Nvidia GeForce GTX 1070.

03-31-2020 05:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First of all, you should check if it is possible to use GPU to handle this model in python, if you can't do that, there is no way LabVIEW can do it with GPU.

Also, you can try call python node to use GPU, I guess. That way you can ensure it is using GPU to do the math.

FIY, there is another thing might help, if you're using Intel CPU, most likely you have a Intel GPU, and you can try to convert your tensorflow model to OpenVINO model, which can take advantage of Intel's GPU. Sure it is not as powerful as Nvidia 1070, but compares to CPU, even Intel GPU have much more tiny processor that can handle data concurrently, which might help you get your result faster.

03-31-2020 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I know there's a CUDA toolkit for LabVIEW - I've tried to play with it a few times, but never had a project for it. I think you could take advantage of it here, though. It may require a refactor to use though.