- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Haptics for Tumour Detection in Surgery

Contact Information

University: University of Leeds

Team Member(s): James Chandler, Matt Dickson, Earle Jamieson, Thomas Mueller, Thomas Reid

Faculty Advisors: Dr. Peter Culmer, Dr. Rob Hewson

Email Address: mn07jhc@leeds.ac.uk

Project Information

Title: Haptics for Tumour Detection in Surgery

Description:

Our project is concerned with developing a haptic system that is capable of delivering force feedback to a user as they virtually prod and manipulate (palpate) a simulated liver with an integrated tumour. The potential application for a system of this nature would be the remote palpation of human tissues during minimally invasive surgical procedures (MIS), allowing the established benefits of these techniques to be retained with the added diagnostic tool of tissue palpation. We have developed a physical testing system that palpates silicone tissue models, recording the response forces across the surface using NI LabVIEW and NI Compact DAQ. This data along with that from FEA models is integrated into our custom haptic surgical system, developed within the LabVIEW environment.

Products:

NI Hardware Products - NI-USB-6008 DAQ, NI CompactDAQ-9178 with modules: NI9205, NI CRIO-9263, NI9403

LabVIEW Products - Connectivity Toolkit (Call Library function), 3D graphics toolkit, MathScript, DAQ-MX, VRML file load

Additional Hardware - PHANToM Omni, Flexiforce sensors, Firgelli linear F12 actuators, MotorMind C motor controller with custom circuit board

The Challenge:

With their numerous advantages over open surgery, minimally invasive laparoscopic and robotic procedures have become increasingly common within hospitals. However, with current technologies the surgeon loses all or most of their sense of force and touch with the soft tissues of the body and therefore cannot perform palpation techniques used within open surgery to assess the health of tissues and detect any internal abnormalities. The field of haptics (the science of force and touch) gives an opportunity to bridge this gap and allow force and touch feedback during Minimally Invasive Surgery (MIS).

The Solution:

The development of a system capable of providing force and touch feedback to the surgeon in real-time, allowing for in-vivo tumour detection. Areas of the project presented here are: Physical Tissue Measurement, Finite Element Modelling and the Haptic Surgical System.

Physical Experimentation:

A physical testing system has been developed in order to generate response force surfaces of silicone models. Steel ball bearings of different sizes embedded at different depths are used to simulate tumours. A force sensitive resistor (Flexiforce A201) is used to measure response forces at discrete points along the tissue domain through integration into a custom sensor probe shown in Figure 1.

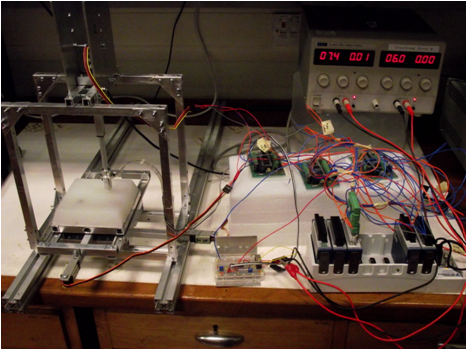

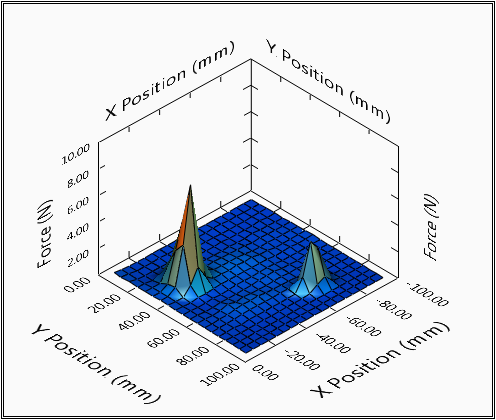

A LabVIEW program is used to control the movement of the probe relative to the silicone models in the x, y and z axis. The full system is shown in Figure 2. Data acquisition and control is carried out through a Compact-DAQ and MotorMind C motor controllers. The LabVIEW simple PID function allowed the actuator control to be established and tuned quickly, so we could focus on obtaining our results. A parametric sweep is used to indent the tissue at pre-defined locations, as illustrated in the video below. Force measurements are taken once the x, y and z target position is met at every indentation. A typical result set is presented within Figure 3, where the recorded data has been processed within LabVIEW to generate a surface plot. Comparisons between an FEA model and experimental data show close correlations and trends for the results obtained. The LabVIEW code developed uses a State Machine architecture, allowing programmatic control over the operational order. The use of LabVIEW also allowed us to leave the depth of palpation, sweep resolution and other factors as variables giving a highly adaptable and flexible solution.

Figure 1 - Sensor Probe with Integrated Force Sensor (Flexiforce A201).

Figure 2 - Complete Physical Testing System Setup.

Physical Palpation System

Figure 3 - A Typical Response Surface Recorded

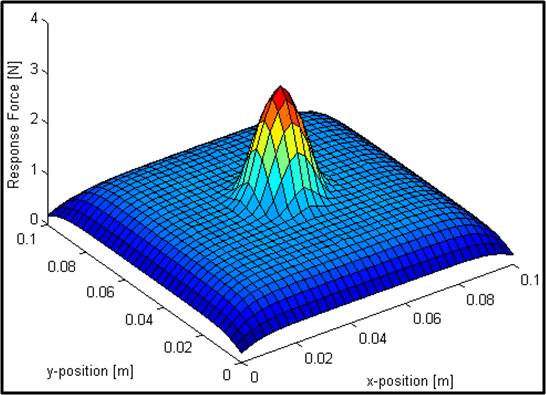

Finite Element Modelling:

To allow cross-validation of the results obtained from the physical experimentations, liver tissue with embedded tumours was also modelled computationally. Just like the physical models, the computational models are palpated across the whole surface. Recording the applied force at each indentation coordinate allows creating Response Force Surfaces (RFS) as seen in Figure 4. These are comparable to the RFSs obtained from the physical experimentations as seen in Figure 3.

Figure 4 - Response Force Surface from computational model showing tumour location

Haptic Surgical System:

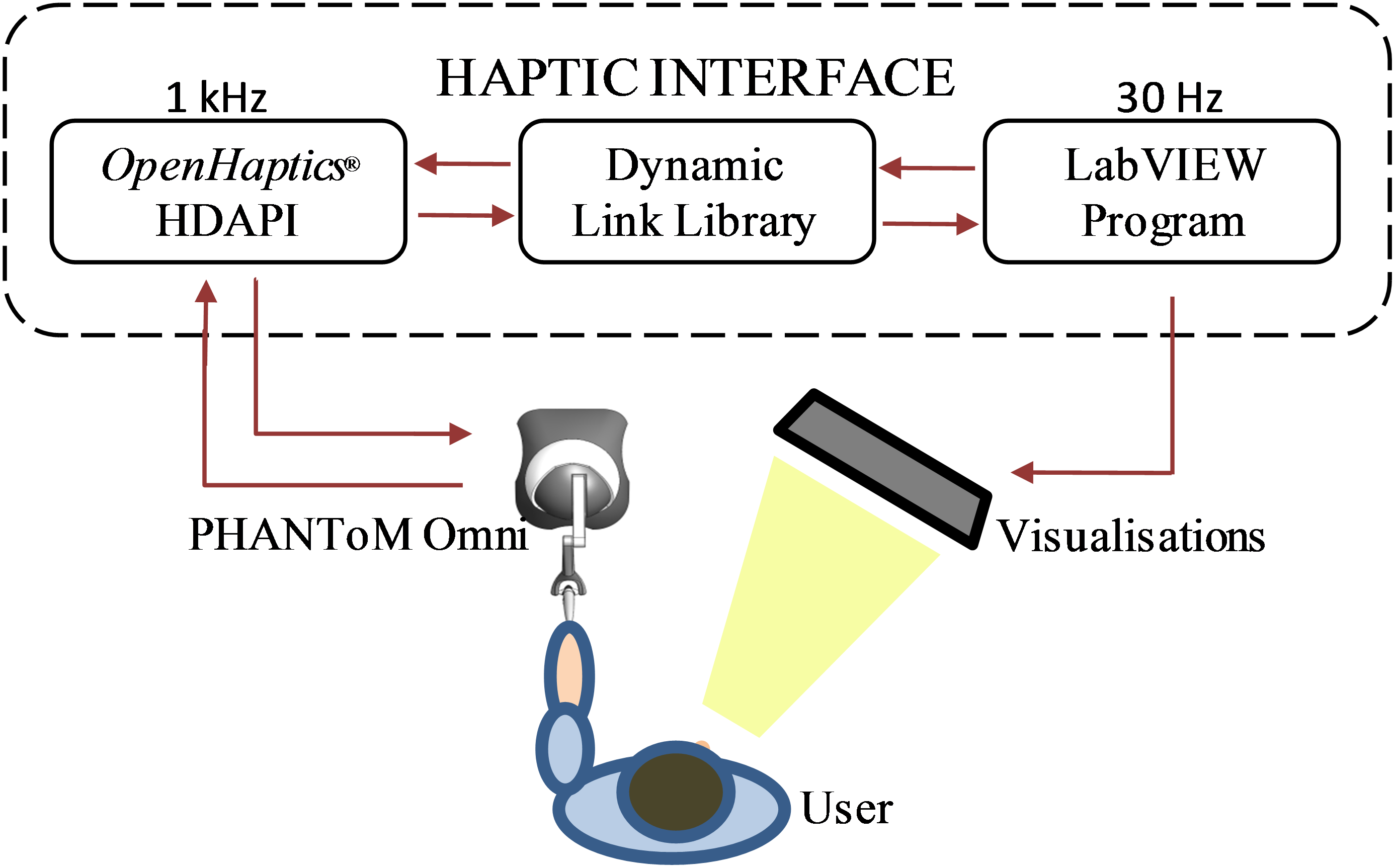

The surgical system was designed in order to allow a user (surgeon) to perform a MIS palpation task using haptic feedback delivered through a haptic device. To communicate with this device we developed a haptic interface within LabVIEW and then added custom forcing functions and visualisation to deliver a complete experience.

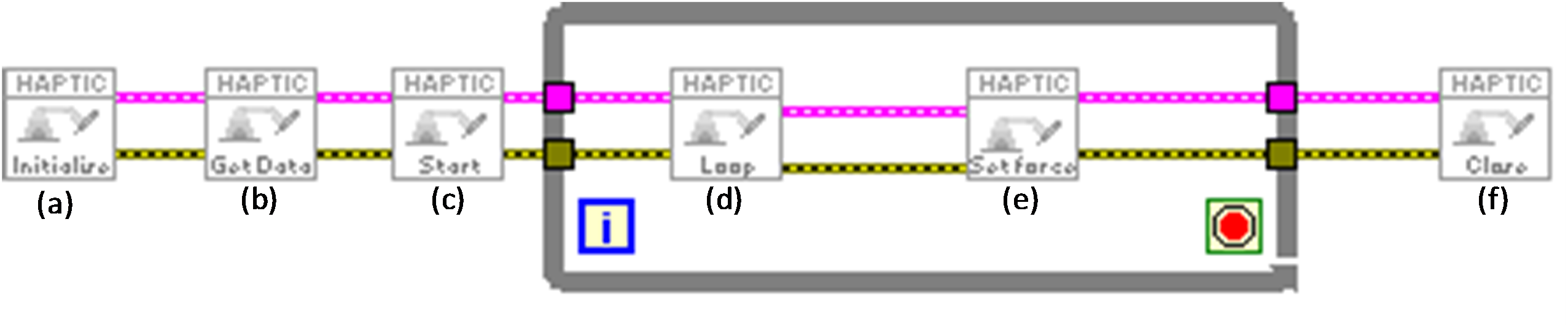

Haptic Interface - A LabVIEW program was developed to interface with the OpenHaptics Application Program Interface (API) of the 6 degree-of-freedom PHANToM Omni haptic device (SensAble Technologies) through a bespoke Dynamic Link Library (DLL). Asynchronous communication was required in order to efficiently generate high-fidelity haptic feedback and visualisations at 1 kHz and 30 Hz, respectively. The schematic of Figure 5 illustrates the implemented program architecture, data flow and loop rates. One of the benefits of the haptic interface is that it allows generic connection to a number of other SensAble Technologies haptic devices.

Figure 5 - Schematic of Proposed Communication Architecture.

Call Library functions in LabVIEW are used to achieve two-way data communication between the high-level control LabVIEW program and the DLL. This allows, for example, to obtain the three-dimensional position of the haptic device end-effector and to send forcing parameters to the OpenHaptics API for haptic feedback. The user is able to move the end-effector of the haptic device and an output force is generated through the DLL. The position of the device end-effector and a simulated liver surface are displayed visually through LabVIEW to represent a typical scene during minimally invasive surgery, as illustrated in Figure 6. This presents a flexible and scalable platform to generate the representative "feel" of human liver with integrated tumours.

Figure 6 - Illustration of the visual feedback that is relayed to the user.

With the communication architecture realised between LabVIEW and the custom DLL, a set of "quick-drop" VI's were created allowing connection to the PHANToM and quick development of haptic scenes without the need of adjusting the low-level program. These are presented in Figure 7.

Figure 7 - PHANToM Communication VI set, (a) Initialise device, (b) Get static data, (c) Start the haptic loop, (d) Acquire dynamic data, i.e. position data, (e) Send forces to device, and (f) Stop haptic loop and disable device.

Forcing Functions - A Finite Element Analysis (FEA) model was developed to generate representative Response Force Profiles (RFPs) of human liver. The stiffness of the tissue is determined as the ratio between the force and indentation depth (k = f/x) and a Gaussian function is used to generate forces at a frequency of 1kHz as a function of probe indentation. This gave force feedback for livers with varied properties such as tumour size and depth. Additionally the data recorded from the physical testing system could be directly implemented.

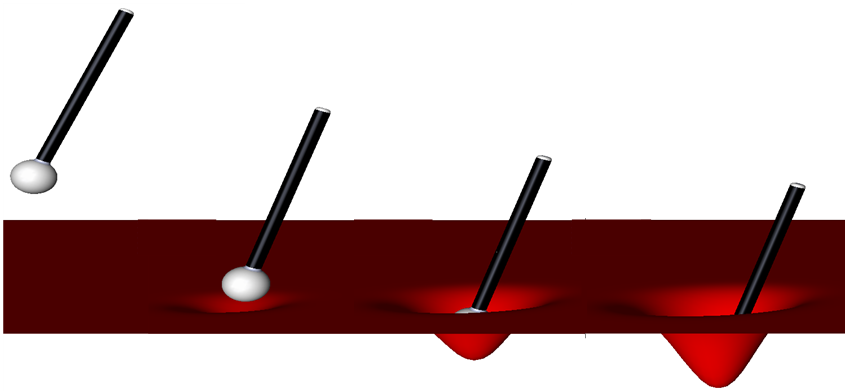

Visual Scene - Having established communication with the Haptic Device, attention was placed in creating a visual scene that including a surface and an end effector, allowing the user to probe around and 'feel' the properties of the virtual surface. LabVIEW's 3D toolbox was used to create a 3D scene mimicking the proposed real world application, i.e. a liver with robotic probe. In order to produce a deformable liver surface the LabVIEW 'create height field' geometry function was used. A height array was programmatically updated depending on the position of the end effector to deliver representative visual deformation of the surface. Figure 8 shows the deformation profile as a function of the depth the surface is indented.

Figure 8 - Indentation visuals during palpation.

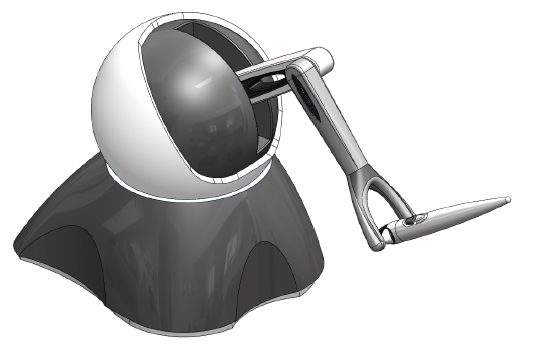

Kinematic analysis was undertaken for the PHANToM to establish the position of the end-effector relative to a ground frame of reference. This system aided in understanding the relation of the physical device to the virtual scene. Simple adaptation to the input parameters can also be made to adjust the model if the end-effector is modified. Finally one of the most useful functions of the kinematic model is that of determining the working range of motion of the PHANToM. A LabVIEW code was written to allow successive frames to be added representing the different joints of the PHANToM. The LabVIEW MathScript function allowed the calculation and successive multiplication of transformation matrices to be made in an efficient way. Following from this work a PHANToM model (Figure 9) was imported into LabVIEW and the 3D toolbox was used to position the components in correct relative position; with joint rotation variables constrained as in the real device. This VI can be downloaded from this page and will give the user the ability to adjust the joints of a virtual PHANToM or play back a set of data recoded from one of our tests. To do this the following files should be downloaded and saved to the same file location: PHANToM_Robot_Generic_File_Location.vi (MAIN VI), Build_Robot.vi, Video_Motion_Data, pen top.wrl, pen bottom.wrl, PHANToM wishbone.wrl, PHANToM arm 2.wrl, PHANToM arm 1. wrl, ball.wrl and PHANToM base.wrl. The video below demonstrates this code in action.

Figure 9 - CAD model of PHANToM Omni.

LabVIEW Kinematic Code Video

Complete Haptic System - the completed haptic system allows the user to palpate in a surgical environment and feel the response force of the modelled tissue. They are shown an introductory laparoscopic insertion and then tasked with locating tumours in a virtual surface. The following video demonstrates the system and some of the implemented features.

Haptic Surgical Palpation System Video

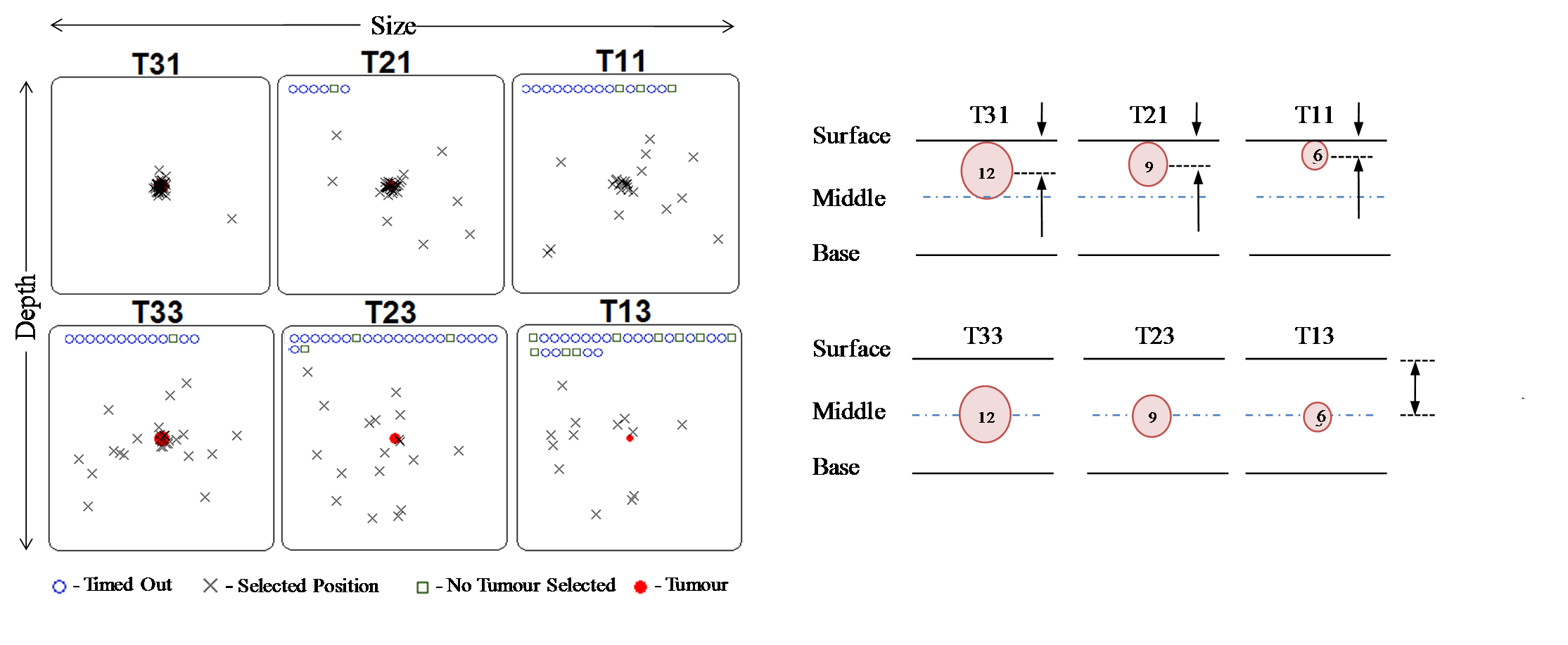

System Trials - The completed system was tested using a number of human trials. LabVIEW allowed us to easily develop an automated testing structure on top of our existing code without major adjustment. Various rounds were presented to the user with specific surfaces present i.e. tissues with large tumours at the surface or one with small tumours deep within the tissue. We were able to randomise the order and position of simulated tumours which resulted in increased power to our statistical conclusions. LabVIEW made it easy to format and save our data, which was then used in custom post-processing LabVIEW code. We tested the factors of tumour size and depth on the accuracy of users tumour detection. Some of the processed data straight from the post-processing code is shown in Figure 10 (having made use of the 2D Drawing functions).

Figure 10 - Centralised selections made by participants using the system.

Benefits of using LabVIEW and NI products:

LabVIEW made it possible to achieve data acquisition, control, data processing, hardware interfacing and visualisations. In addition the ability to learn LabVIEW and develop applications quickly made it possible to develop these systems within our time line of 6 months. The transportability and ease of integrating codes allowed use to develop VI's separately and then compile them without issue, allowing us to operate efficiently. LabVIEW handles the problematic and time consuming low level system requirements leaving the engineer free to focus on the desired outcomes of the project as a whole.

Nominate Your Professor: (optional)

We would like to nominate our supervisor, Dr. Peter Culmer for his support and enthusiastic approach to our project and specifically LabVIEW software. Without his support and guidance we would not have achieved all that we did. Anyone who can make learning computer programming fun deserves a nomination from us!

Dr. Peter Culmer works within the field of Surgical Technology at the University of Leeds. His role includes teaching LabVIEW to Undergraduates and conducting and supervising research within his field. He has had extensive experience with LabVIEW and NI products and is brilliant at describing the programming concepts required to achieve highly flexible and robust applications. We would like to thank him for his support and nominate him as an outstanding educator, a title we believe he fully deserves.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Hey Jameschandler,

Thank you so much for your project submission into the NI LabVIEW Student Design Competition. It's great to see your enthusiasm for NI LabVIEW! Make sure you share your project URL (http://decibel.ni.com/content/docs/DOC-14870) with your peers so you can collect votes for your project and win. Collecting the most "likes" gives you the opportunity to win cash prizes for your project submission. I'm curious to know, what's your favorite part about using LabVIEW and how did you hear about the competition?

Good Luck, Kristine in Austin, TX.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

impressive!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Good luck for the competition guys! You definitely deserve first place.