- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Why the TDMS file is larger than it should be

Solved!07-08-2010 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

For your first question regarding long-term logging and wanting to split the files:

Currently, there is no way to change the file path while the task is running. The only way to do this kind of thing would be to stop and start the task.

That being said, the TDMS files that are written by DAQmx are optimized for integrity. With several optimizations in place, we can protect pretty well against data loss, most notably:

1) One header is usually written to the file at the beginning. After that, we are basically just writing raw data. This keeps the complexity of the TDMS file pretty straightforward so that the chance of a corrupt file is greatly reduced.

2) We added special logic to the TDMS read for these files which will make it as fault-tolerant as possible. Basically, if the reader notices that logging terminated early, it will truncate the data down to the last successful write operation. For example, if the system shuts down in the middle of logging a large file such that the file is left incomplete, TDMS read will return all of the data up until the last segment that would have been invalid.

I'm not saying that you are guaranteed that nothing can ever happen, but generally, the software has been designed pretty well to account for data integrity to significantly reduce any chance of data corruption. While this might not compel you to store everything in the same file, perhaps it might make it more acceptable to only change files once per day instead of every minute (thus making it more tolerable to stop/start the task once per day, in code of course).

For your second question regarding how the raw format is used/specified and later applied:

You do not need to define a data representation. "Under the hood" we write the raw format that the device returns. For most DAQmx devices, this would be 2 bytes per sample. For some devices like DSA, it could be 3 or 4 bytes per sample. We store the scaling information in the file header detailing all the information required to scale the information back to what you would see from DAQmx Read (double). Note that this even includes scaling information like thermocouple scaling, custom scales, etc. Finally, the TDMS readers (such as the Matlab DLL, the TDMS File Reader, etc) will apply all of the scaling information that we specified when writing the file and give you back a double value (making the underlying format completely transparent). They actually compile into their DLLs our DAQmx scaling code so that you are guaranteed to get the same result that you would have from DAQmx Read directly.

I hope I've answered your questions. Let me know if you have any follow-up questions.

Andy McRorie

NI R&D

07-09-2010 03:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This clarifies many things.

Which of those features are also supported by TDMS API?

Right now when I save data, every one-minute file has around 200MB and it would be easier to maintain, distribute and handle smaller files instead one-day file of 20 channels with 44100 kS/s/ch. I am looking forward seeing some improvements in future DAQmx versions.

Thanks!

--

Lukasz

07-09-2010 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As I just mentioned in the other thread, you can access some of this functionality in the standard TDMS API by using the "Create Scaling Information" VI. When you describe scaling information in this way, the scaling information will automatically be applied when you go back to read the file (in whatever environment like Matlab, C, LabVIEW, etc).

When you say "some improvements", are you referring exclusively to the ability to break apart the data into multiple files, or is there something else that you are wanting?

I will take the suggestion for splitting files under consideration. Right now, what I'm thinking about is making it so that you could change the file path while the task is running (and we would in turn use that file path at the next file write internally). With this implementation, it would afford you the flexibility to use whatever criteria/conditions you want for when to split a file and what the file name should be. Do you have any feedback on this kind of approach or any additional suggestions?

Andy McRorie

NI R&D

07-12-2010 04:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey Lukasz,

Would it solve your problems if you could set the file path "on the fly" while the task is running?

That is, you could register for "Every N Event" and set the file path to a new file in the event structure, or based on some other calculation. The only "problem" with this approach is that we would take the file path into consideration on the next write to disk call. While we could guarantee (for example) that it would happen within one second, it wouldn't be possible to specify exactly how big the file should be.

The question is: would this be acceptable for your needs, or do you need a more exact solution?

Andy McRorie

NI R&D

07-14-2010 03:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Andy,

When I mentioned "some improvements" I was wondering about two(three) things:

1. The ability to break data into multiple files directly by DAQmx.

2. Possibility to apply software lossy and lossless compression directly during data logging process. The same as hardware compression for some DAQs in DAQmx. So when I read TDMS files I do not have to think how to process binary raw format, but everything would be done without my intervention. Just like in case of scaling information. This feature might be also implemented in TDMS API. What do you think about it? This would make life much easier and files would be 25% smaller.

3. Another thing but related directly with TDMS API because is already implemented in DAQmx logging process. Would it be possible to log data using TDMS API as binary data and afterwards during reading process apply scales and display as DBL? This would help to save some disk space.

I think it would be a great idea to have possibility to change the path within the same task. In this case it is extremely important, especially for long-term measurements, that none of samples will be lost like in case of the Producer/Consumer structure.

Thanks,

Lukasz Kocewiak

07-14-2010 03:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Andy,

Regarding the file path "on the fly" when the task is running.

One of the assumptions of my measurement software is to save the same number of samples per minute. This help me very much during measurement data off-line processing. I am not really sure if such kind of spontaneous file patch changes will satisfy this criterion. This will be generally dependent on overall measurement system performance.

Another assumption is to save all of aquired samples. In order to meet with this requirement I use FIFO queue. If I would change the path on demand this would also omit some samples because of delays.

Actually in future, probably next month, I will work on something similar. The issue would be save one-minute files triggered according to GPS time stamp. This approach will be used for GPS-synchronized long-term measurements. In this case number of samples between different PXI chassis will differ due to PXI-4472 TCXO random walk pattern and unfortunately the frequency accuracy of 4472 is only +/-25ppm. This helps to have every minte of logged data synchronised. Afterwards, during off-line processing, I will resample data according to the time stamp.

Best regards,

Lukasz Kocewiak

07-14-2010 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey Lucasz,

Thanks for the feedback.

- I'm looking at figuring out how I should do this in DAQmx so that hopefully in a future version of DAQmx, I can get it in there.

- While I can appreciate the concern for saving disk space, this would slow down our performance by introducing an additional buffer. Additionally, this problem in your case would be solved by using a later DSA board that supports hardware compression. All that being said, I'll add it to our product suggestions for its benefit to disk space.

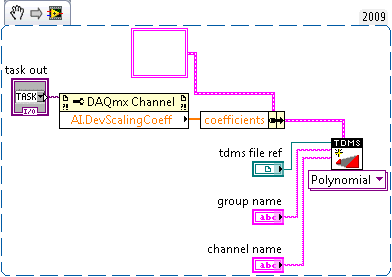

- This is currently available as I mentioned previously via the "Create Scaling Information" VI in LabVIEW 2009. Then, all you do is call DAQmx Read for unscaled data (U32/I32 in your case) and wire that into TDMS Write. Then, whenever you read the data back in any of the TDMS readers, it will automatically be scaled for you (thus requiring no post-processing).

Andy McRorie

NI R&D

07-14-2010 12:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey Lucasz,

For the first assumption, it sounds like you're needing every file to contain the same number of samples. I will take that into consideration in coming up with a solution.

For the second assumption, note that in no solution would samples every be lost. When I was discussing the fact that the file path wouldn't take effect until the next time we write to disk, I didn't mean to imply that the samples would be lost in the meanwhile. It would simply continue writing to the same file and then switch to the new file when doing the next write.

Additionally, I've brought this up on the DAQ Idea Exchange here: http://forums.ni.com/t5/Data-Acquisition-Idea-Exchange/Ability-to-split-between-multiple-TDMS-files-... If you have any ideas or additional feedback on this item, please feel free to post a comment on that idea.

Andy McRorie

NI R&D

09-01-2010 05:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Andy,

I have not know about 'TDMS Create Scaling Information VI'. I have already implemented it into my measurement software for harmonic measurements using Producer/Consumer structure and everything works fine. I also checked how it works with nilibddc.dll and no problems. Thank you for the suggestion.

There are some things that slightly affect overall software performance. I use different custom scales specified in MAX and due to this fact I have to apply scales separately for every channel. This means that every minute I have to go through a for loop to apply scales. This affects slightly higher queue loading when scales and properties are added to a TDMS file and new file is created. Fortunately for me (8 channels with 44100kS/s) it is sufficient. Perhaps if it would be implemented in DAQmx could be more efficient.

I am also wondering if software lossy and lossless compression would be implemented directly into DAQmx. This would save some more HDD space and simplify binary file handling. Of course this will decrease an overall performance but for some applications (low sampling rate, only few channels, low DAQ resolution) would make logging easier. In case of lossless compression raw data would be processed later by TDMS readers to obtain nice scaled signals. What do you think about it?

Best regards,

Lukasz Kocewiak

09-01-2010 07:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We have definitely thought about compression while using TDMS. Please post a suggestion on the Idea Exchange.

- « Previous

-

- 1

- 2

- Next »