- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

which DAQ type will be perfect for me? I read n contsampl exactly every 1 sec.

02-12-2008 01:28 AM - edited 02-12-2008 01:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Message Edited by reza926 on 02-12-2008 01:33 AM

02-12-2008 07:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

used NI DAQ-6210 m series, but it did not works properly

What behaviour/data did you observe to come to this conclusion??

Could you please explain how you are trying to read data from your DAQ 6210??

It will help us in answering your query

02-12-2008 03:51 PM - edited 02-12-2008 03:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

You should be able to acquire N samples per an amount of time. As the previous post we do need a little bit information to help you; like what errors are you getting? what behavior are you watching? Why do you say is not the correct card?

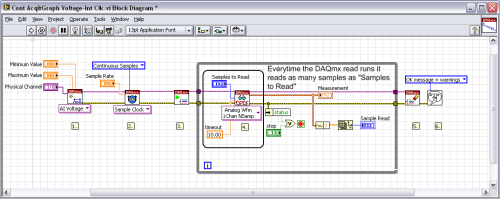

Basically the way you should be approach this specification is by: setting the samples to read to you desired value. This example will help you to accomplish it: Cont Acq&Graph Voltage-Int Clk.vi” and please take a look at the screenshot. With more information I might give you a better answer.

I hope it helps

Message Edited by Jaime F on 02-12-2008 03:52 PM

National Instruments

Product Expert

02-16-2008 12:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

DAQmxErrChk (DAQmxCreateAIVoltageChan(taskHandle,"Dev1/ai1","",DAQmx_Val_RSE,-2.0,2.0,DAQmx_Val_Volts,NULL));

DAQmxErrChk (DAQmxCfgSampClkTiming(taskHandle,NULL,1000,DAQmx_Val_Rising,DAQmx_Val_ContSamps,1000));

DAQmxErrChk (DAQmxRegisterEveryNSamplesEvent(taskHandle,DAQmx_Val_Acquired_Into_Buffer,1000,0,EveryNCallback,this->GetSafeHwnd()));

DAQmxErrChk (DAQmxStartTask(taskHandle));

"

{

DAQmxErrChk (DAQmxReadAnalogF64(taskHandle,-1,10.0,DAQmx_Val_GroupByChannel,EntireDataSet,1000,&read,NULL));

time(&nowtime);

outfile<<ctime(&nowtime);

Error:

if( DAQmxFailed(error) )

DAQmxGetExtendedErrorInfo(errBuff,2048);

return 0;

}

| Thu Feb 14 17:27:13 2008 | ||

| Thu Feb 14 17:27:14 2008 | ||

| Thu Feb 14 17:27:14 2008 | ||

| Thu Feb 14 17:27:15 2008 | ||

| Thu Feb 14 17:27:16 2008 | ||

| Thu Feb 14 17:27:18 2008 | ||

| Thu Feb 14 17:27:19 2008 | ||

| Thu Feb 14 17:27:20 2008 | ||

| Thu Feb 14 17:27:21 2008 | ||

| Thu Feb 14 17:27:21 2008 | ||

| Thu Feb 14 17:27:22 2008 | ||

| Thu Feb 14 17:27:23 2008 | ||

| Thu Feb 14 17:27:25 2008 |

02-18-2008 11:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

You are coding in C++ but it seems you are using CVI examples and even though CVI should be compliable under C++ we do have specific examples for C++. As a reference I would like to point you to the specific examples for c++: “NI-DAQmx, NI-VISA, and NI-488.2 Visual C++ Example Locations”, there is one example name: “ContAcqVoltageSamples_IntClk.cpp”.

The two inputs you use to setup the timing of a task are: “Samples to Read” specifies the number of samples to read when you select N Samples and specifies the buffer size when you select Continuous and “Sampling Rate”.

Since your code works fine for approximately the first 3 hours that tells me you have your configuration setup correctly to read 1000 Sample per second so after taking a long look at your code I have found your issue, you are using the system time stamp: “time(&nowtime)” which does not means your acquisition is not been held at 1000 Samples per Second. This time is software depended on your OS clock and is not an accurate representation of when your data was acquired by the card.

I hope it helps

National Instruments

Product Expert

02-19-2008 01:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi

Thanks for your comments.

I have only OS Time in my hand. Also When I use other functions like GetTickCount I see same result, I think this function also is OS related.

So how can I manage code to test that if DAQ working properly every second? (I'm coding in Visual studio 6)

Remained questions are :

" As you said OS timer is not accurate , but why we see accuracy in about 3 first hours? ", I want to know that is it possible to have inaccuracy when environment's temprature or same thing is changed?

" What can I do when I read samples with OS time? for example in 1hour (I measure this time with system time) I need to read exactly 1000*3600=3600000 samples "

Thanks a lot.

02-19-2008 06:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

You will see accuracy for the first three hours because is totally dependent on your Operating System which might be running out of resources at that time, a test you can try is to start loading up a lot of programs at the same time, stressing your system as much as possible and you should have the same behavior in less than three hours.

If you are not exceeding the recommended temperature of operation your acquisition should maintain its rate through the whole process.

I want to make the difference here that your card is been hardware timed so it is reading the data at 1000 Samples per second, the card has its own internal clock that determines when the samples are read. What you are timing is actually when your code returns from acquiring 1000 samples not at which rate the samples are been acquired. The sampling of the data is totally independent from the OS clock.

I hope it helps

National Instruments

Product Expert