- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Save high sampling rate data

Solved!08-31-2010 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Jim,

Thank for your inputs.

I cannot decide which solution would be the best. Due to fact that my measurement setup is based on a PXI-1033 chassis connected with a HP 6930p unit using a NI ExpressCard-8360 and a MXI Express I have to live with many limitations.

The PC unit does not support eSATA and IEEE 1394a (400Mbit/s) has only one ExpressCard slot. In this case a cannot count on RAID with external HDDs. I have a LaCIE Big Quadra 4TB with all possible interfaces but using USB 2.0 it gives me 28MB/s of sequential writing as well as 512k in RAID 0 configuration (see picture below).

Right now I consider to replace the HDD from the PC with an efficient SSD or replace the optical drive with another HDD and create RAID 0. I am not really sure which solution would be better. I have found that OCZ Summit and Vertex SSDs can operate quite impressive (according to http://www.anandtech.com/).

| Name | Sequential Write [MB/s] | Random Write [MB/s] |

| Vertex | 93.4 | 2.41 |

| Summit | 195.2 | 0.77 |

I have also Fujitsu T1010 with the same limitations, so maybe I will try with SSDs firstly. In case of something, would it make sense to put a HDD and a SSD into one array together?

Best regards,

Lukasz

09-01-2010 02:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Lukko,

I have looked into some different forums and technical articles about making a RAID with different types of hard drives. I found that it is possible to do this but I got the impression that this is hard to do and not advisable. I have added the forums and articles about it.

Can I RAID with Different Hard Drives?

After reading your post, it seems that the limitation is with streaming the data back to the computer and then writing to a RAID. Do you have any open slots in your chassis? We can cards or RAID drives that can go into your chassis that would completely solve this problem. Here's the link about it.

Jim St

National Instruments

RF Product Support Engineer

09-02-2010

02:37 AM

- last edited on

01-30-2026

09:14 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Jim,

Thank you for your fast reply.

Right now I have only two available slots in my PXI-1033. I have came up with an idea when you mentioned about HDD extensions for PXI systems. Something like NI-8260 might be a solution, but I would rather buy another portable computer with and ExpressCard and eSata interface. Besides it needs three slots. I have made some test using LaCie BigQuadra connected with Dell Adamo using eSATA and it has occurred completely sufficient for my application. Please take a look at the figure below.

For transient measurements I need right now to save 6 or 8 channels with 2.5MS/s from PXI-6133 (14bit ADC), so this solution should be enough when I need to save around 30MB/s or 40MB/s. I have been wondering if there are any PXI-based controllers to which I could connect the LaCie HDDs with RAID 0 using eSATA and also configurable in LV? I have seen something like EKF CompactPCI storage modules a little described here: Removable Hard-Drive Options for Deploying PXI Systems in Classified Areas. What do you think?

Thanks!

--

Lukasz

09-02-2010 05:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Andy,

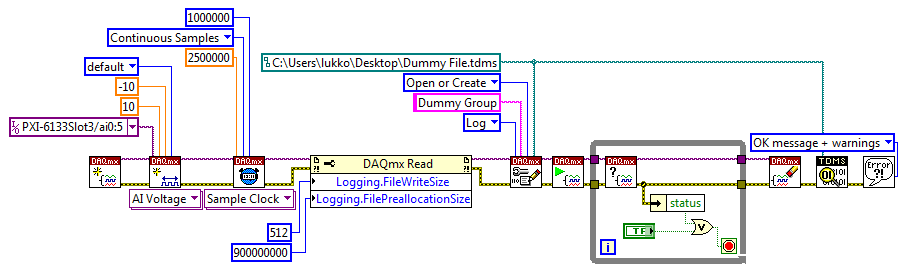

I have tried to play with the new DAQmx features but I cannot make it working. Right now it looks like in figure below:

But with the DAQmx Read property node it does not work and I obtain Error -201309: Unable to write to disk. Ensure that the file is accessible. If the problem persists, try logging to a different file.

I have tried to place the property node in different places as well as to change operation which specifies how to open a TDMS file.

Do you have any suggestion what might be wrong?

Thank!

--

Lukasz

09-02-2010 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are you on WIndows Vista or Windows 7? If so, make sure that you run LabVIEW as an administrator (right-click and say "Run as Administrator").

Andy McRorie

NI R&D

09-02-2010

04:45 PM

- last edited on

01-30-2026

09:16 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Lukko,

After looking over your post, we do sell cards that work with SATA RAID arrays PXI cards and I have provided some links to these below for you to look at. These are designed for our RAID arrays but I believe you could use an converter to make this work for you. In the DeveloperZone you added, we recommend ADEXC34R5-2E RAID5/JBOD eSATA ExpressCard adapter for the operation you are looking for. From my research though, there isn't a PXI controller that will provide this interface for you though.

NI 8352

I would go with the recommended card that is stated in the DeveloperZone since this has already been research.

Jim St

National Instruments

RF Product Support Engineer

09-06-2010

03:19 AM

- last edited on

01-30-2026

09:16 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

Jim:

Thank you for the suggestions. In that case I will firstly try to replace the drive in the PC. Right now I have 3 possibilities: SSD, RAID 0 using another HDD instead of the optical drive and Hybrid-HDD. Due to fact that it is faster to get the Hybrid-HDD, I will test it firstly. I observed that in many cases first few seconds of data acquisition are the most crucial. Using the SSD buffer in the Hybrid-HDD should hopefully solve this problem. I will later let know about the results.

Andy:

Of course the problem with pre-allocation was because of administrator rights. Thanks! I have played a little with the Logging.FileWriteSize and Logging.PreallocationSize properties and I have to admit that I have obtained some improvements. Right now my HDD has 512B/sector but actually 256 samples of FileWriteSize is the best configuration for my hardware. Also preallocation seems to be working. I observed that when number of acquired samples exceed preallocated file size in total samples the buffer immediately overwrites.

When I do not set Logging.FileWriteSize, the default value is 4294967295. What does it mean? Why it is so much bigger then my 256? Of course the acquisition with the default value cannot last long enough. Available samples per channels exceeds the input buffer size and it overruns. Generally as I observed it is difficult to observe obvious regularities in both configurations this might be because the Windows environment is non-deterministic but the new features in DAQmx 9.2 can really help.

John:

Once you mentioned about TDMS Direct Integration in NI-DAQmx Logging:

"Using this feature would bypass application memory and write to the disk directly from Kernel memory, which results in lower CPU usage and higher streaming performance (assuming the disk is physically capable of writing this much data)."

I would like to clarify this. Is not it that direct I/O (non-buffered) about which Andy mentions previously requests direct data transfer between the disk and the user-supplied buffer (in LabVIEW) for reads and writes? This bypasses the kernel buffering of data, and reduces the CPU overhead associated with I/O by eliminating the data copy between the kernel buffer and the user's buffer.

Thanks!

--

Lukasz Kocewiak

09-08-2010 02:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Of course the problem with pre-allocation was because of administrator rights. Thanks!

Thanks for checking that out. Sorry the error was so bad. I will improve that to be very explicit about what the problem is.

I have played a little with the Logging.FileWriteSize and Logging.PreallocationSize properties and I have to admit that I have obtained some improvements.

Cool.

When I do not set Logging.FileWriteSize, the default value is 4294967295.

That value is (uInt32)-1, which is the default value for this attribute if logging is not enabled. If "Logging.Mode" is off, this attribute doesn't make much sense. If you turn logging on, the attribute value returned should make more sense.

Is not it that direct I/O (non-buffered) about which Andy mentions previously requests direct data transfer between the disk and the user-supplied buffer (in LabVIEW) for reads and writes? This bypasses the kernel buffering of data, and reduces the CPU overhead associated with I/O by eliminating the data copy between the kernel buffer and the user's buffer.

Your explanation is mostly correct, but with one clarification. The user specifies the size of the DAQmx buffer, but does not supply it; that buffer will be a user-kernel shared, page-locked buffer allocated by the driver. Data is streamed directly from that user-kernel buffer to disk with both "Log" and "Log and Read" mode. For "Log and Read" mode, the data is additionally taken from that buffer and copied (and scaled) into the user's application buffer in a DAQmx Read call. We bypass the user-mode buffering of data and reduce overhead (like CPU utilization and memory bandwidth) by eliminating the data copy between the kernel buffer and the user's buffer.

Let me know if you have any further questions.

Andy McRorie

NI R&D

09-13-2010 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I made a small comparison how my measurement system performs if File write size varies. Below you can see some results.

Fig. 1 Available samples per channel measured during high-speed data acquisition with sampling rate of 1MS/s/ch.

Fig. 2 Available samples per channel measured during high-speed data acquisition with sampling rate of 2.5MS/s/ch.

For this test the buffer size was defined as 1MS. The best performance was reached for 2048S file write size in both cases. For value 512S the buffer was overwritten. Available samples per channels exceed the input buffer size and the data acquisition process fails. In case of 2.5MS/s/ch sampling rate 128S and 1024S failed as well.

Simultaneously I also did CPU burden and RAM usage measurements. I observed that the RAM has nothing to do during data logging and behaves in the same way for all of cases. From CPU monitoring is difficult to get clear tendencies and some correlations between CPU usage and buffer usage. In case of 2.5MS/s/ch (6 channels) the lowest CPU usage was observed if the File write size parameter was set to 2048S.

The CPU usage almost for all of cases was around 35%. Only sometimes it was even 100%, but this can be due to the non-deterministic operating system. When the sampling rate was set to 1MS/s/ch the lowest CPU usage was obtained for 1024S. Generally it can be said that CPU loading is not directly related with buffer usage, at least for my measurement set up.

Also from figures presented above it can be seen that for file write size lower than 512S (the HDD sector size is 512B) some trends in buffer usage are recognizable. This can be affected by I/O overall performance.

Looking forward to hearing your comments.

Best regards,

Lukasz Kocewiak

09-21-2010 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It's interesting that NI has stated that SSDs can occasionally go 'out to lunch', considering that SSDs are an option for their PXI-module internal 4-disk RAID. I have been experimenting with SSDs myself in a 12-disk RAID array that interfaces a 12-disk enclosure with 4 eSATA ports (in a 1-1-5-5 port multiplier configuration) tied to a RocketRAID 2314 PCIe controller. I would have to agree with NI on their 'out to lunch' commnet. I have noticed that my logging can suddenly crater, and the drive activity lights go dark for a second or more. I don't think SSDs are quite ready for RAID0 applications, especially since I have learned that they cannot be restored to 'factory fresh' condition without removing each drive from the RAID. There is a 'TRIM' command that will 'clean up' an SSD when it is idle, but only Vista and 7 support it. It is not supported in RAID0 however. I would love to hear from NI with respect to how their SSD option for their 4-disk RAID0 works out in the long term. After multiple fill-ups and deletions, are they still able to get 200MB/s sustained?

I would also be very interested in an NI article that did some 'real-world' benchmarking with TDMS streaming data from, say, 6 different cards (each with their own task). Hopefully they will produce such an article someday.