Machine Vision

- Suscribirse a un feed RSS

- Marcar tema como nuevo

- Marcar tema como leído

- Colocar este tema arriba del foro

- Favorito

- Suscribir

- Silenciar

- Página de impresión sencilla

Subpixel image shift

el 11-16-2011 06:19 PM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

Bruce -

Thanks for taking the time to elaborate on your method.

I have at times 2000 images that I have to align. Another approach I thought of that I am considering this afternoon is (in this order):

1) Histogram matching of all of the images to a reference image (see http://forums.ni.com/t5/Machine-Vision/Histogram-matching/m-p/192576#M5513)

2) Resample using IMAQ Resample by factor of 4 each image and reference image

3) Compute difference between IMAQ Centroid shift outputs of resampled image and IMAQ Centroid shift outputs of resampled reference image

4) Perform IMAQ Shift on resampled image using difference computed in step 3)

5) Downsample each image by a factor of 4 to get back to the original image size

In theory, this would seem to be a good approach to me. However, my images are already ~ 2000 x 2000 pixels. Resampling by a factor of 4 gives me 8000 x 8000 pixels per image. This is why I would probably keep it to a factor of 4 resample rather than using larger factor.

In any case, I know this will be a time-consuming process but time is not the issue. I am hoping tomorrow to have time to test the different approaches. I will report back when I have results.

Don

el 11-16-2011 08:43 PM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

I don't think I would go the route of resampling x4. Like you said, it uses large amounts of memory. It also only has 1/4 pixel resolution when all is said and done. My shifting filter can do any fraction of a pixel, and it doesn't use any extra memory.

I'm not really sure how you are going to align the images. I would probably use some sort of convolution to see if the edges match up. You could do very fast convolution tests with narrow vertical and horizontal strips to figure out how much they need to move. Unless all the images are the same, I don't see how the centroid will help you. I suppose if you are trying to track a dot or something it might work. More information or some images might generate more useful help.

Bruce

Ammons Engineering

el 11-17-2011 08:08 AM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

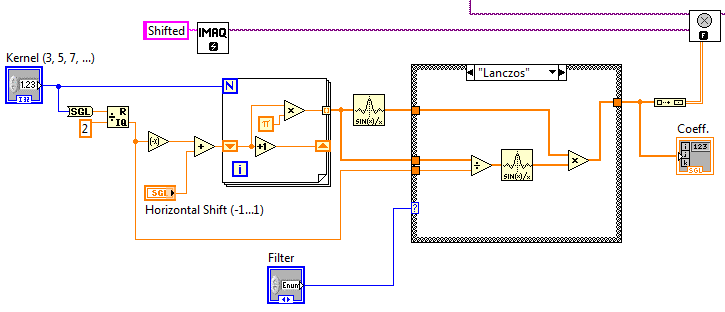

Agree with Bruce, resampling is just "workaround", but not very elegant way. You can use Lanczos or other windowed sinc function (for example, Hamming or Blackmann), then convolution with obtained coeffs, something like that:

el 11-17-2011 08:53 AM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

Hello Bruce & Andrey:

Here is some really good background on the project.

http://forums.ni.com/t5/Machine-Vision/Image-Registration-Alignment/td-p/1478312

For now, I am using a Python script supplied by a peer which does some curve fitting and utilizes a subpixel shift routine to provide great results. Since I have some time in this next week prior to Thanksgiving, I was hoping to get back to this and try to obtain similar results with pure LabVIEW to integrate it into other existing LabVIEW code.

Sincerely,

Don

el 11-17-2011 03:23 PM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

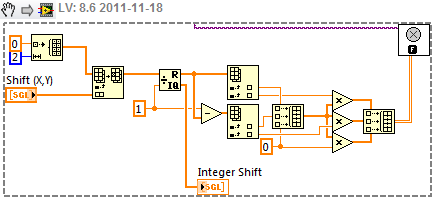

One more alternative which gives roughly equivalent results is to use a Bilinear Interpolation for the fractional shift:

el 11-18-2011 08:02 AM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

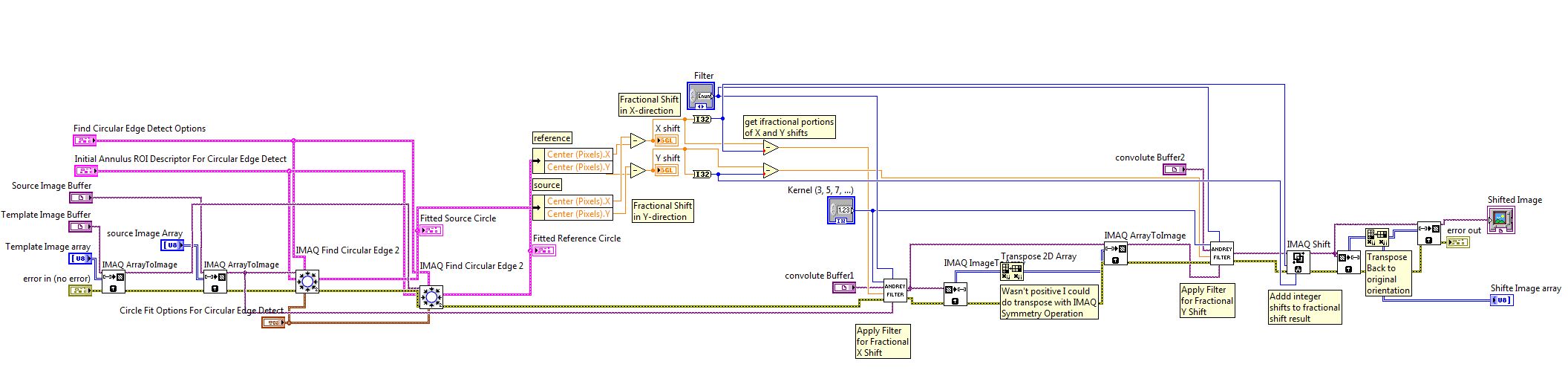

I plan on trying the following strategy with each of the filters you propose. I started with Andrey's and added some code to it to handle the borders issue.

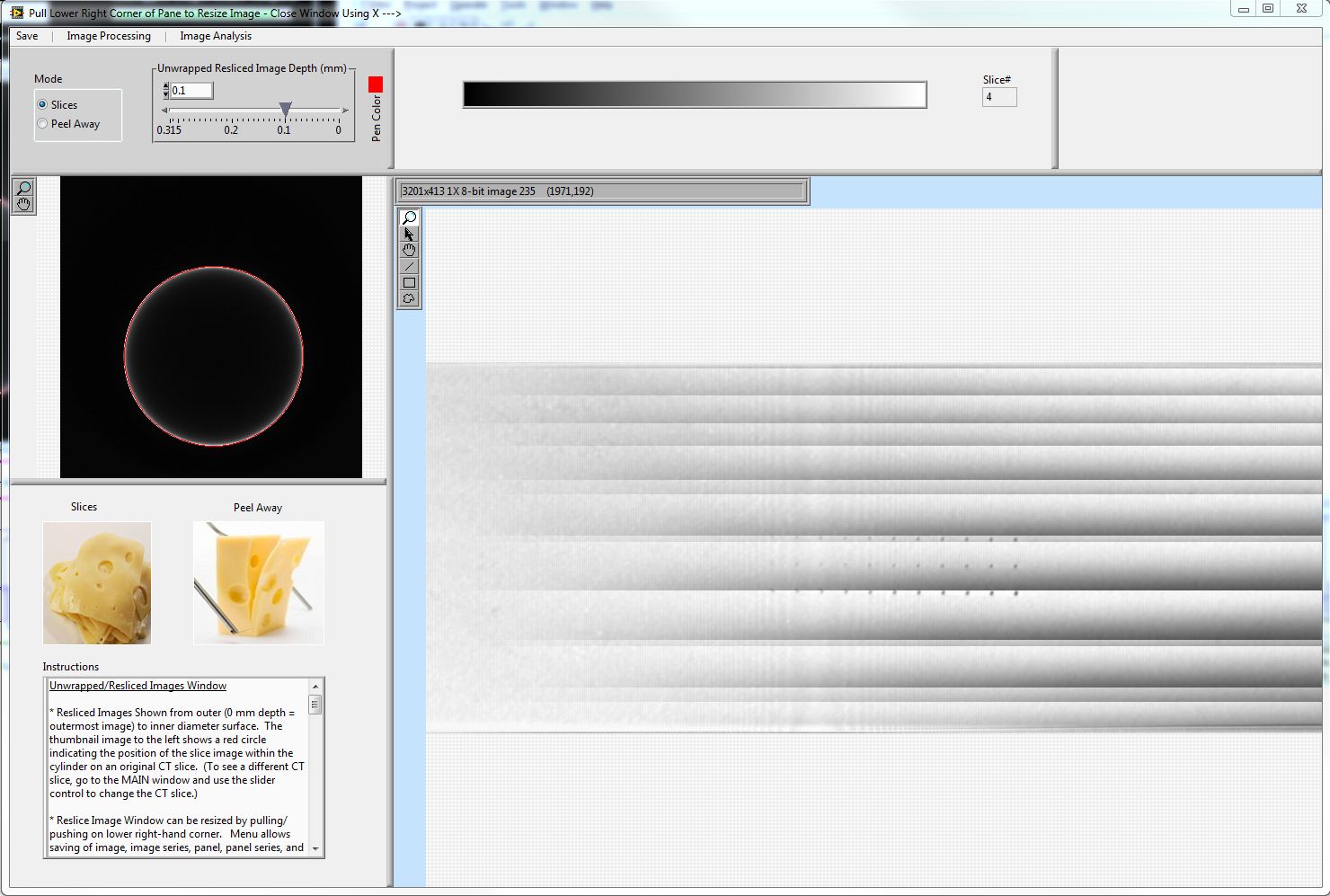

I first went back to my circular edge detection scheme which provides subpixel resolution for Center (x,y) of the circle.

I think that Andrey's filter is working fine, but I am still not getting where I need to be as I still see discrete horizontal lines in the "unwrapped" image (I am stacking the circle images to form a cylinder and unwrapping the cylinder to form a sheet) rather than a smooth image that I get from ImageJ RigidBody Registration and the custom Python code.

I suspect the problem is with the edge detection itself rather than the subpixel registration. I will plug in Bruce and Greg's subVIs in next. I can also try modifying some of the parameters in Andrey's code such as kernel size and filter type but again, now I am suspecting the discontinuity lines may be more of an issue fundamentally related to the IMAQ algorithm.

I may want to experiment again with centroid after threshold and filling the circle to see if Centroid provides better results.

el 11-18-2011 08:39 AM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

Don,

If your final goal is to unwrap the circle into a strip, I would start with that first. I would find the center of the circle in each image, then use IMAQ Unwrap to get a strip. I would include a few pixels of thickness to make sure you get the details you need. You could analyze the strip to see if you hit the center (check for sin and cos components) and iterate. You could also equalize each strip if needed. Then you could combine the strips into a 3D sheet several pixels thick. I'm not sure how you would convert that to a 2D sheet, but there must be some rule for that.

I think doing subpixel manipulations on the original image is just adding blur to your final results, since you have to do more interpolations for the unwrap anyway.

Bruce

Ammons Engineering

11-18-2011 09:06 AM - editado 11-18-2011 09:08 AM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

Hi Bruce -

I do use the Unwrap procedure to get my strips.

However, we use either the ImageJ RigidBody RegStack or custom python script I mentioned to date with really good results to align the image stack prior to unwrapping in LabVIEW/Vision. The Python script has a custom linear fitting routine to smooth out the oscillations and that may be the next step now that I think about it. When I look at the shift numbers between the reference and the source image, the shift is only up to about 7 pixels max in either direction.

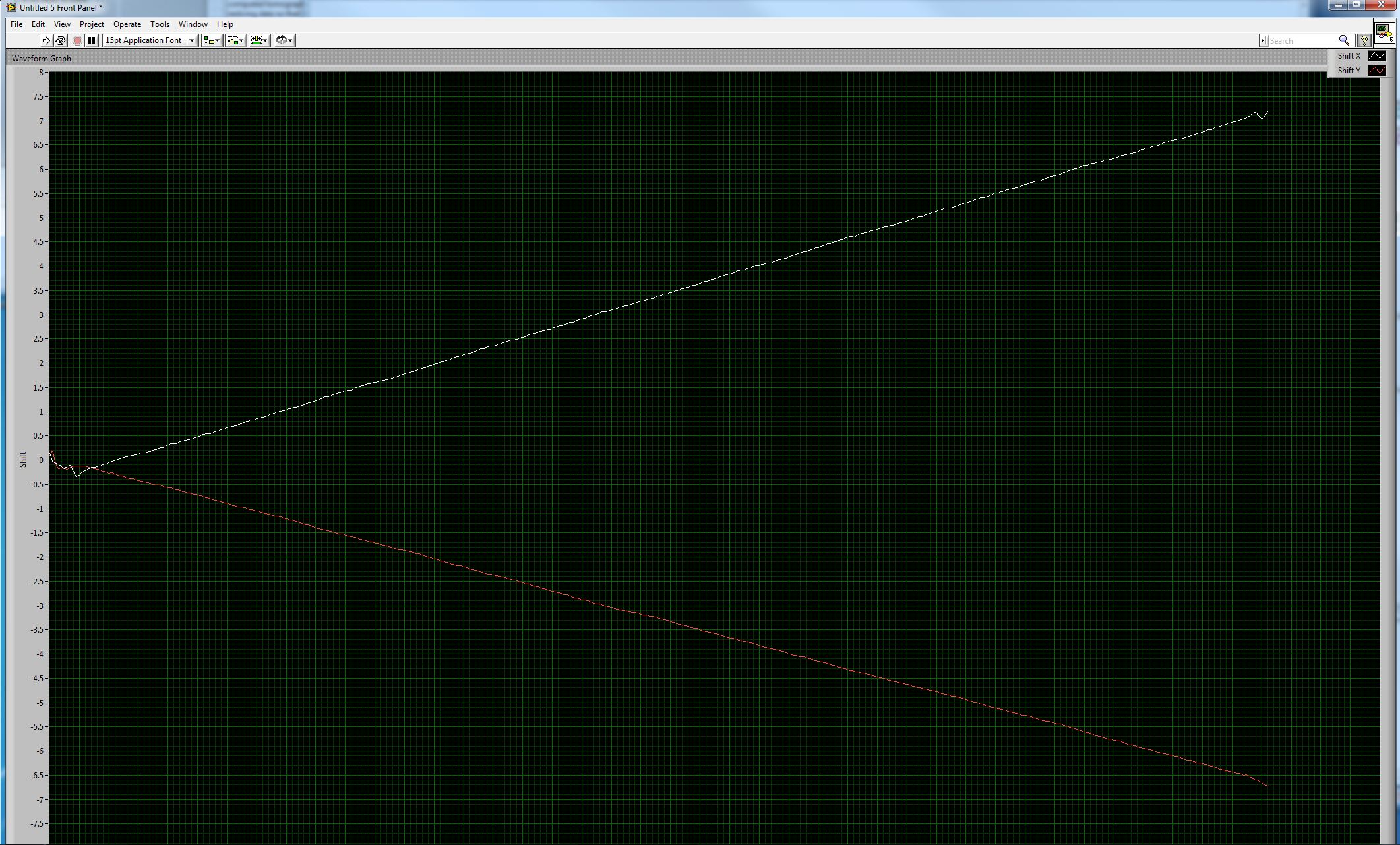

Looking at this plot of shifts, we can see the slight oscillations probably resulting in the lines on the unwrapped image:

I think that the subpixel resolution will help me get a better fit.

11-18-2011 01:56 PM - editado 11-18-2011 01:57 PM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

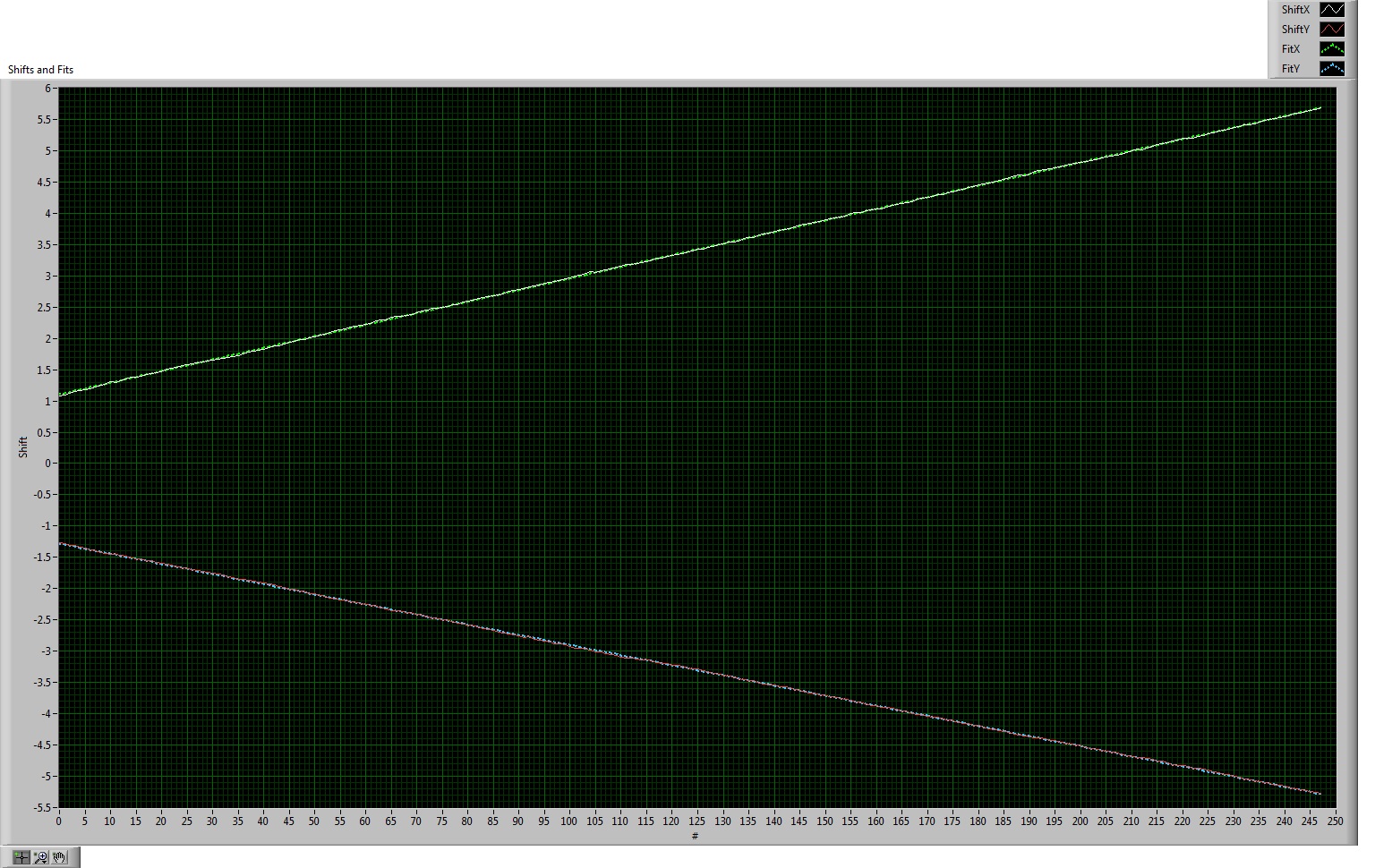

I have applied the linear curve fit to the measured shifts from the circular edge detection routine (source minus reference center x,y position) to get slope and intercept and compute shifts in another loop iteration from Y = mx + b in order to hopefully get a smoother unwrapped image when applying the shifting. Reference image is an excellent quality image from the center of the stack. I use the middle 60% of the image stack for the linear curve fit slope and intercept. I do this in both the X and Y directions. As I mentioned earlier, the subpixel resolution of the center coordinates of the circles determined from the circular edge detection routine is helpful here because it should provide a better fit. However, as it turns out, the subpixel resolution of the circular edge detection routine is so good, I am not even sure I need curve fitting step (the best linear fit and the measured shifts from circular edge detection center outputs lie almost on top of each other).

However, I still run into the same problems related to the horizontal banding in the unwrapped image. I did try reversing the shift direction for both X and Y, and that made things worse. I tried applying each of the Ammons, Andrey, and GregS additional subpixel shifting on the back end for the actual shifting after computing the shifts from the linear fit, but I still get the banding. Bruce, basically, my procedure is what you have described in your last post. I am only showing one unwrapped image as an example but there are a whole number of them separated by the voxel dimension of the CT scan. The edge detection routine appears to work really well. I am not sure why this is not working. Have to stare at this a little longer. All of the help has been greatly appreciated.

el 11-18-2011 03:11 PM

- Marcar como nuevo

- Favorito

- Suscribir

- Silenciar

- Suscribirse a un feed RSS

- Resaltar

- Imprimir

- Informe de contenido inapropiado

Don,

Any way you can post a small set of images that I could play with? I really don't understand where the banding is coming from. I suspect the interpolation during the unwrap is the issue. Is the image with banding a single layer of the 3D slab after unwrapping? If you look at a single strip, is there any sinusoidal variation?

Bruce

Ammons Engineering