- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Coordinate transformation

Solved!10-16-2018 05:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

we have a dispensing robot with a camera mounted on the robot arm. The camera is rotated vs. the robot coordinate system.

We would like to transform pixel coordinates to world coordinates.

Everything is in 2D : all points that we are dispensing (and looking at with vision) are all the same Z-value (height).

The goal is to use 3 reference points for which we know the real-world coordinates, and also the pixel positions AND positions of the robot when the camera is located above the reference point.

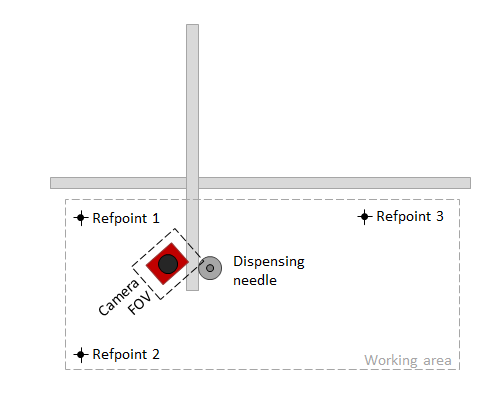

This is our setup :

- We have 3 fixed reference points. Let's call them P1, P2, and P3.

They are determined by moving the robot so that the tip of the dispensing needle is touching these reference points exactly, and writing down the encoder values of the robot.

- These reference points are at the edges of the working area of the robot, so we determined 3 robot positions (P4, P5, P6), where the camera is above the reference points (so that the reference point can be located with the camera).

- So we get a table :

At position P4 (x,y), we measure reference point P1 at pixel location A(x,y), and know that this reference point is P1 in the real world.

At position P5 (x,y), we measure reference point P1 at pixel location B(x,y), and know that this reference point is P2 in the real world.

At position P6 (x,y), we measure reference point P1 at pixel location C(x,y), and know that this reference point is P3 in the real world.

So we are looking for the math : if the robot is at position U(x,y) and the camera measures a point a pixel position V(x,y), what is the real-world position of that point?

I hope this makes some sense...

Thomas.

Solved! Go to Solution.

10-16-2018 08:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It sounds like the camera is attached to the end of the robot arm. The relationship between the camera and the needle is fixed, if I understand correctly. That means you are defining the same point in the camera FOV three times. This will not tell you anything about calibration for the FOV.

You will need multiple points in a single FOV to define the calibration of the camera. This would assume that the plane that the camera sees is fixed, and doesn't change as the robot moves. If the robot rotates the camera relative to the XY plane (any axis), this will not work.

Can you explain your robot and the camera better? A sketch or photo would help. I might be able to suggest how to calibrate it if I can see how everything goes together.

Bruce

Ammons Engineering

10-17-2018 02:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Bruce,

Thank you for your response. You are right : the camera and the dispensing needle are mounted on the robot arm (XY). See sketch below :

To calculate the relationship between the real world coordinates and the camera coordinates, I thought of 2 methods :

- We can use 3 reference points that are close to each other, within the FOV of the camera. If we know the real-world coordinates of these reference poins, we can measure the pixel coordinates, and calculate the pixel to mm (resolution) and angle between the camera and real-world coordinate system. I think this is what you suggested.

- However the FOV of the camera is very small compared to the working area of the robot, and we would be calculating the coordinate transformation based on measurements within the FOV. Therefore, I would like to use 3 reference points covering the complete working area.

By using the known real-world coordinates of these reference points (P1..P3), the 3 positions where the robot was positioned for taking the image of the reference points (P4..P6) and the measured pixel positions of the reference points in the camera FOV, I would suppose that the coordinate transformation can be calculated.

Thomas.

10-17-2018 11:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are not going to be able to get it to work that way, if I understand you correctly. You need to map the pixel coordinates relative to the robot coordinates.

So the camera is attached at the end of the robot, and nothing rotates, correct? So you need to determine the relationship between a point in the camera FOV relative to the robot position, correct?

The simplest way is to start with the robot at a known position, probably around the center of motion, with the needle touching the center of a dot. Move the robot a known amount in the X and/or Y directions to get the dot in the FOV of the camera. Record the pixel position of the center of the dot, and the robot offset from the original position. Move the robot to two other positions, recording the pixel position and robot offset from original position. The three points must be in a rough triangle (not a straight line). You could do several additional points, but it is unlikely to improve your results. The spacing should be a good fraction of the image size.

The robot offset (Rx, Ry) of a point in the camera FOV can be written as a function of the pixel coordinates (Px, Py) of the point. Rx = A*Px + B*Py + C, and Ry = D*Px + E*Py + F. You can use linear regression to determine the coefficients for these functions from the data you have collected. You will do a separate regression for the X and Y offsets.

After this, you can locate a point in the image and use the equations to figure out the position of the point relative to the robot's current position. This relative position could be used to move the point directly under the needle, as well.

I hope this helps.

Bruce

Ammons Engineering

10-18-2018 01:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Bruce,

You are absolutely right : I only need one physical reference point, and need to take 3 pictures of it : @P, @P+(x,0), @P+(0,y)

That way I can calculate the relation between needle position and camera position.

Thanks for the help!

10-18-2018 05:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just implemented the linear regression using "general linear fit" to 'solve' the 2 unknows for X, and 2 unknows for Y, and it works perfectly!

03-27-2019 06:36 PM - edited 03-27-2019 06:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bruce,

I have a similar problem like Thomas, but I have more questions regarding the calibration of this setup. (I need to perform calibration of the camera to get measurements of length in mm from what the camera observes) I am a bit confused of how to calibrate the camera and what affects the calibration after.

The camera is mounted at the end of the robotic arm. The robotic arm is extracted at height Z from the plane, which plane is the FOV for the camera.

Supposing that the wrist of the arm is not rotated and the rotation is always constant how can I calibrate the camera using a calibration grid with dots?

From NI vision assistant I could use the camera model grid but what happens if the arm change its height Z from the plane and get closer? Will this affect the calibration and will not be accurate anymore? Is only the rotation that could affect the calibration?

During calibration, the grid should be tilted in different angles regarding to the camera to get the minimum error as possible?

In your answer to Thomas you mentioned also that:

@BruceAmmons wrote:

The robot offset (Rx, Ry) of a point in the camera FOV can be written as a function of the pixel coordinates (Px, Py) of the point. Rx = A*Px + B*Py + C, and Ry = D*Px + E*Py + F. You can use linear regression to determine the coefficients for these functions from the data you have collected. You will do a separate regression for the X and Y offsets.

The Rx and Ry does correspond to the distance covered? or to Cartesian coordinates of the Robot needle position? If we can have the robot Cartesian coordinates to the new points do we still need three points to move the robot?

I attach a graph to make more clear my thought.

Thanks in advance.

03-27-2019 09:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think there are several examples for calibration using the dot grid, so I won't say much about that.

It sounds like you need to separate calibration (scaling) and location/rotation.

The problem is that the calibration changes when the distance changes. If the camera is always perpendicular to the FOV, and the height is known, and the camera has a low distortion lens, you should be able to calibrate at several heights and figure out the height to scale conversion. It should be fairly linear.

Rotation shouldn't affect the scaling if the FOV is perpendicular to the camera. It will just affect the XY orientation and the relationship between pixel and global coordinates.

Bruce

Ammons Engineering

03-28-2019 03:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bruce thanks for your answer.

If the height to the plane Z need to change, a telecentric lens would fix the problem with only one clalibration?

Thanks in advance

03-28-2019 07:22 AM - edited 03-28-2019 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thomas,

If I understand correctly, we have the needle touching the dot only in the first time and we grab a picture. Then we record the pixel position in that image. Afterwards we move the needle positive x for example (+x,0) and we get a second picture making sure that the dot is still in the FOV but without touching the dot? Then we grab another image and we record again the pixel position of the centre of the dot and so on for the third movement?

To summarise: We want the centre of this dot be always in the FOV and the dot not moving as the needle moves and have the needle to move to relative positions from that centre?

Thanks in advance