ni.com is currently undergoing scheduled maintenance.

Some services may be unavailable at this time. Please contact us for help or try again later.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

displaying a signal in a waveform chart in "slow-motion"

03-26-2018 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

In my application I acquire data with 500KHz and 100K samples to read. I display the signal on a waveform chart, but it is shown to fast on the screen. I was wondering if it is possible to define the scale in the waveform chart to be 20 cm/sec, meaning at every given screen I will see 20 seconds of the acquired data. Maybe even if it is possible to allow the user (in the main screen of this application) to choose the number of seconds he would like to see on the screen.

thanks in advanced,

Naama 🙂

03-26-2018 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You would have to set the history to be able to hold 20 seconds worth of data. At 500kHz, that would be 10M samples. Obviously you do not have 10M pixels on your screen, so your data will be decimated somehow. You could take control of this in your code, for example, average every 5,000 samples which reduces 10M samples to 2k samples, which will fit nicely on your screen and not take up such a large amount of memory. By changing the number of samples averaged, you could control how many "seconds" worth of data are shown at a time; a graph may be better than a chart for this.

03-27-2018 07:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

Thank you for your response.

This is not what I mean... I don't want to change my number of samples (or the fs) I want to show more data in one screen, for example 20 seconds (what I really want is to give the user an option in the GUI how much time he would like to present on one screen).

I guessing now I see one second in every sceen (since it so fast) so I would like to show 20 seconds on one screen.

thanks in advanced,

Naama 🙂

03-27-2018 07:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I was acquiring behavioral data at 1KHz and wanted to see 30 seconds of data scroll (slowly) across the screen. As the data were being streamed to disk, I sent a copy to a "plotting" routine that decimated the data by a factor of 50 (initially by replacing every 50 points by the average of those points, but later, at the request of the Experimenters who were interested in the "noise" component of the data, by the first point, discarding the other 49). This lowered the display rate from 1KHz to 1000/50 = 20 Hz, and meant that 30 seconds of data required a 600 pixel Chart, an easy fit for a PC Display.

Bob Schor

03-27-2018 08:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Bob!!

So how can I do what you suggest?

I tried to look a bit on this subject and tried to use the proporty node (see attached pic) but it shows me the same chart.

thanks in advanced,

Naama

03-27-2018 10:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

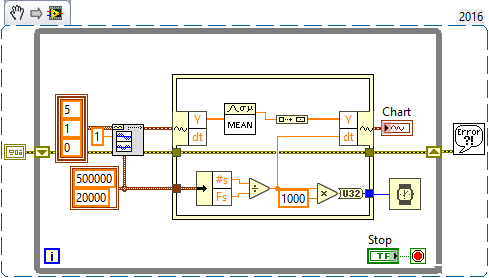

As I understand it, you are sampling a signal at 500KHz, and are gathering 20K samples at a time. Thus you get data at 500KHz/20K = 25 Hz, a reasonable rate to update a display. Here's a bit of code that generates a Noisy Sinusoid (using the Waveform Generating Functions) of 5 Hz, amplitude 1, with RMS Noise also with an amplitude of 1 (for S/N ratio of 1, pretty low). Instead of plotting all the data, I plot just the Mean. I'm using an In-Place Element structure to unbundle and rebundle the Waveform, replacing the Y array with a 1-element Array of the Mean of the 20K points, and changing dt to reflect the down-sampling rate. I also slow down the While Loop so it runs at 25 Hz. You'll notice that what you get is a sampled Sinusoid with no evidence of the (averaged-out) Noise.

I'm attaching it as a Snippet from LabVIEW 2016, and also as a VI saved in LabVIEW 2015 (which is what I think you are using).

Bob Schor

03-28-2018 08:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

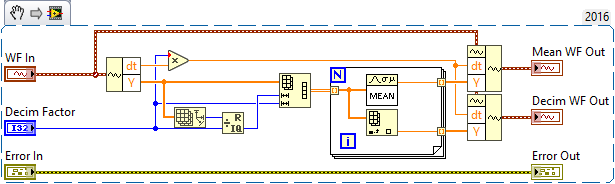

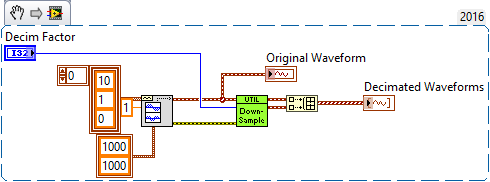

I'm attaching a second Down-Sampling Demo that I think does a more useful job of illustrating the point. This is the "Driver", which generates a 10Hz Sinusoid with amplitude 1 + Gaussian Noise with Amplitude 1, 1000 points sampled at 1KHz. It feeds this into a DownSample Utility with a Decimation Factor (e.g. 20 means "combine 20 points into a new point, either by taking the Mean of the 20 points or simply choosing the first one"), returning two Down-Sampled Waveforms, plotting everything.

Note that the Meaned Waveform has less "noise" (the Mean acts as a Low-Pass Filter), while the Decimated version "preserves the Noise".

The process of the Down-Sample Utility is straight-forward:

The attached VIs are in LabVIEW 2015 (though the Snippets are LabVIEW 2016).

Bob Schor