- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

XML Timestamp Interpretation?

11-25-2006 04:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

<Name>Time</Name>

<Cluster>

<Name></Name>

<NumElts>4</NumElts>

<I32>

<Name></Name>

<Val>0</Val>

</I32>

<I32>

<Name></Name>

<Val>180387840</Val>

</I32>

<I32>

<Name></Name>

<Val>-1047981475</Val>

</I32>

<I32>

<Name></Name>

<Val>0</Val>

</I32>

</Cluster>

11-26-2006 09:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are correct that an LV timestamp is a DBL of the number of seconds since t0, but whoever wrote the XML primitives seems to have made a confusing choice.

If you open the LV Data representation white paper (should be availble in Help>>Search the LabVIEW Bookshelf), you will see that the actual format of a timestamp is indeed 4 32 bit integers, with 2 of those integers as the seconds and the other 2 as the fractional part.

What it doesn't say is that those numbers should apparently be U32 (I think that only in LV 8 they started allowing negative times).

If you typecast these 4 I32s to U32 then you should have the third and fourth element as your number of seconds since 1904 (or just the third for now, since I don't think the fourth will be relevant for 30 years or so). The XML primitive saves it as an I32 which does make it confusing.

___________________

Try to take over the world!

11-26-2006 06:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have figured "most" of it out. At least for practical purposes. I'll leave the actual consideration of the numberical conversions up to those who are more familiar with working in bytes for calculations. The XML snippet above was generated by the LV7.0 Express built in "Flatten to XML" VI. I have inferred the following about the above, and for ease of notation, we will consider each of the above integer values as W, X, Y, and Z, in that order from top to bottom.

The flatten to XML has taken what are two 64 bit Integers and artifically cut them into four 32 bit Integers. W and X are derived from the Fractional Component of the number of seconds from 1/1/1904. Y and Z are derived from the Integer number of seconds from 1/1/1904. Z is never accessed under normal circumstances since the timestamp constant in this version of labview maxes out in 2038, and is thus always 0.

If each integer is converted to its 32 bit representation, we can then denote the 32 digit bit representation as Wb and Xb, etc... It is important to recognise that the sign of the Integer representation will become a 1 or 0 in the bit representation. The representations are then concatenated as follows (order of operation is important):

Xb & Wb = Ab , The Fractional component of seconds in 64 bit representation (not directly equal to a fractional component though, we still need to do some more)

Zb & Yb = Bb, The Integer component of seconds (as above for Ab).

We then must take both components and independently convert each to base 10 integers, represented by Ad and Bd

Ab ---> Ad

Bb --->Bd

Dealing with the integer seconds component (now as a base 10 integer), the number of whole seconds since 1/1/1904 00:00:00 is given by

Bd - 28800 = #of integer seconds from base time.

I don't know where that offset comes from, but it represents about 8 hours before allowed times, even though LV timestamps don't handle that.

The fractional component (as a base 10 decimal) is now given approximately (at least 10 digits of precision) by

Ad * 5.421010862*10^-20 = Fractional Seconds

I expect that the conversion factor has something to do with the base unit of bite, but I sure can't figure it out. Anyway, this is how it works.

They've probably fixed/made sense of this in newer versions of LV, but I ain't got that so tough luck for me.

Cheers,

D

11-27-2006 02:33 AM - edited 11-27-2006 02:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think the eight hour come from your local timezone! Check also the DST settings!

And your conversion factor is just 2^(-64) or the amount of time for 1 bit, so for better accuracy you might use this number!

Ton

Message Edited by TonP on 11-27-2006 09:34 AM

Nederlandse

My LabVIEW Ideas

LabVIEW, programming like it should be!

11-27-2006 09:18 AM - edited 11-27-2006 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

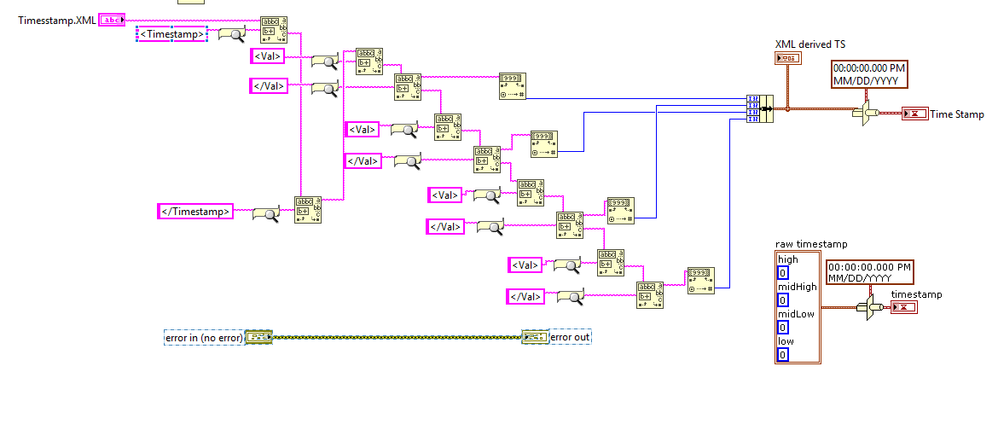

The easiest way to convert the four 32 bit values to a timestamp is to cluster them in high to low order, then do a type cast to the timestamp (see graphic). Make sure you use the same format as the numbers were saved (I32 in this case).

Note that converting to a double will lose over half your precision (128 bits down to 52). This is why the timestamp datatype was invented in the first place. If you don't need better than about millisecond resolution, you are probably OK.

Message Edited by DFGray on 11-27-2006 09:20 AM

04-30-2019 09:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

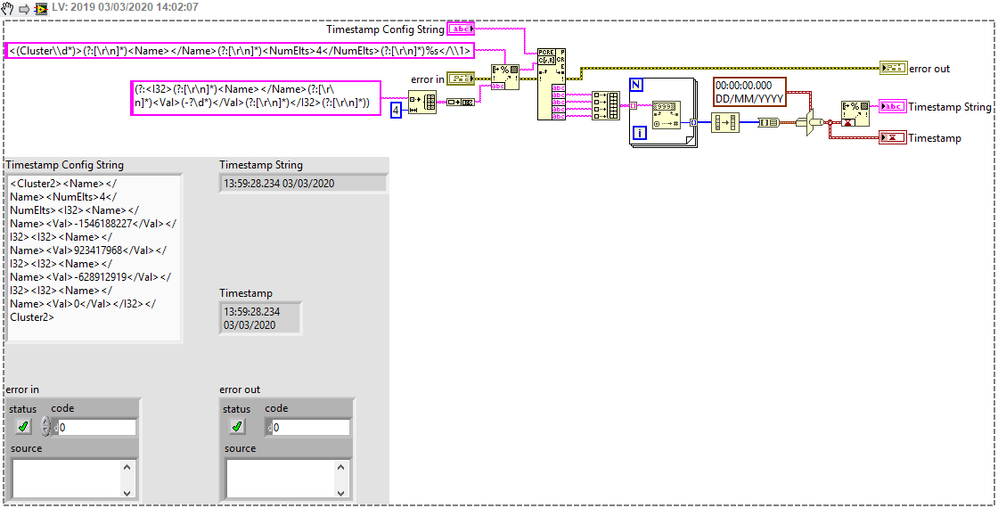

Here is a VI that extracts the 'Timestamp' section of XML and outputs a LabVIEW timestamp...

LabVIEW 2018...if you have earlier labVIEW here is a screen shot of the code diagram...

03-02-2020 11:05 PM - edited 03-02-2020 11:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's my RegEx based solution (in a similar spirit to Jack Hamilton's, above).

I'm using a match for "Cluster\d" because I'm using this with a library I'm writing in which I number cluster pairs to allow matching starts and ends of nested clusters with greedy regexps.

Line end constants have already been removed when I reach this VI, so I've placed optional non-submatching groups for the line ends produced by Flatten to XML (this is the (?:[\r\n]*) that repeatedly appears).

I added a * (rather than +) to allow it to work with the standard "<Cluster>...</Cluster>" tags (the backref for \1 is why I needed to make the first pattern match, and use subgroups 2-5 for the timestamp).

Of course, the Format to String at the end can be modified to allow whatever output time-string you like.

The attached VI is backsaved to 2015 (although I don't know if the PCRE library version changes between LabVIEW versions, and this wasn't tested in 2015).