- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Weird behaviour with malleable and standard VIs in parallel nested for loop

Solved!10-16-2019 04:17 AM - edited 10-16-2019 04:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello everybody,

I have a problem with the uploaded VI. It converts numbers to string with fixed significant figures. In the real project, it reads 720 arrays of 86 elements each one, that's the reason because the benchmarking is done like this.

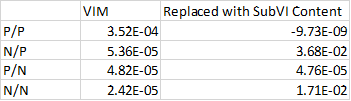

The problem is that I want to run in parallel to run it faster, but trying to do it I have get very weird results. There are two for loops (excluding the benchmarking[10k] one, that is for average purposes): The inner one[86] that is inside the VIM, and the outer[720]. I have tried to parallelize one of them, the other, both and none of them. And I repeated the process converting the VIM into standard VI and replacing the subVI with contents. Here are the results in seconds (outter/inner; P por parallelized loop, N for standard one):

The VIM seems to run slower with every kind of parallelization, meanwhile with the replaced contents the non parallelizated at all version runs slow. Paradoxically, the N/P runs even more slower, but the P/N is fast, and the P/P also runned really fast but returns a negative timming¿?¿??

Does this make sense for you? Download the VI and play a little bit with it if you want, and check if you have the same results. Can't understand why in the VIM version the fastest version is the N/N. Also I don't understand the P/P and N/P rows of the replaced contents column.

Best regards and thanks for your time,

EMCCi

Edit: The parallelization configuration was always set to 12, the maximum cores of my CPU (6 with hyperthreading).

Solved! Go to Solution.

10-16-2019 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A couple of issues I am seeing:

1. Make sure debugging is turned off on your VI.

2. Your inner loop on your VI is always going to return the same value (not autoindexing). So that may be eliminated at dead code.

When I parallelized inner loop, it took twice as long (60us parallelized vs 30us normal). What we are seeing here is the overhead of parallelizing loops (thread generation and combining). So it looks like your algorithm here is simple enough that you are not going to benefit from the parallelization.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

10-16-2019 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are 100% right. I was having wrong timming measures due to the elimination of dead code at compilation. I'm not familiar with this function, and I would have never guessed it, so thank you so much.

For the actual setup, (720x86) I'm getting timmigs of 50/60 ms aprox. How I could reduce it?

10-16-2019 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

You are 100% right. I was having wrong timming measures due to the elimination of dead code at compilation. I'm not familiar with this function, and I would have never guessed it, so thank you so much.

Altenbach had a presentation a few years ago that covers this along with several other things to consider with performance measuring.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

17 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

10-16-2019 11:50 AM - edited 10-16-2019 11:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

For the actual setup, (720x86) I'm getting timmigs of 50/60 ms aprox. How I could reduce it?

With this code, I am getting more around 22ms. Faster computer? Optimizations were done in LabVIEW 2019? Debugging seems to matter very little.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5