- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timestamp to number - precision

05-22-2019

08:10 AM

- last edited on

10-21-2025

10:42 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

could you explain to me the precision of a timestamp and the related conversion to a number.

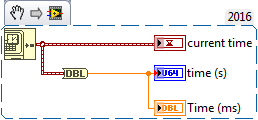

Here is my test VI:

LabVIEW Help states "Use the To Double Precision Float function to convert the timestamp value to a lower precision, floating-point number."

https://www.ni.com/docs/en-US/bundle/labview-api-ref/page/functions/get-date-time-in-seconds.html

Here is a tutorial explaining a timestamp is actually a "128-bit fixed-point number with a 64-bit radix.".

https://www.ni.com/en/support/documentation/supplemental/08/labview-timestamp-overview.html

Looking at the converted number, the double claims to be giving a nanoseconds precision.

The U64 shows the time in seconds (as expected).

I'm on a regular PC, so I was expecting a preciosion down to maybe 1 ms, not 1 ns. Where are those ns coming from? How do I know the precision of a Timestamp and the converted double on a system?

Regards

Christoph

05-22-2019 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There's a distinction to be made here between the accuracy of your timestamp and the precision. LabVIEW provides a certain precision for your converted timestamp, but whether or not your computer is capable of generating a timestamp that accurate is another thing, entirely; you'll have to use your own judgement here. Just because LabVIEW can convert a timestamp into seconds at that precision doesn't mean it's that accurate. But maybe, given the hardware/software combination, it can be that accurate, too.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

05-24-2019 08:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I believe the precision comes (indirectly) from the crystal clock that powers the workings of the processor chip, which I seem to remember (from the last time I looked at my PC's specs) is in the GHz range, so a tick is about a nano-second. The accuracy depends on how you set the System Clock and whether or not it "synchs" with a good Time Standard.

Bob Schor

05-24-2019 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

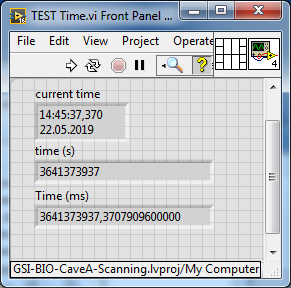

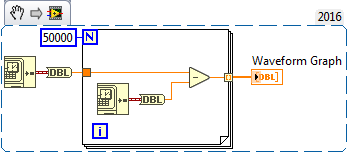

Do an experiment: Use a FOR loop to collect a huge amount of timestamps in a row and converting them to DBL. Say, 10 million.

Now look at the values. On my PC at least, you don't see a smooth increase in values, there's a ton of identical values, followed by a sudden jump of around 1 ms, followed by another ton of identical values.

I think you'll find that it is on a 1 ms clock, and it just gives the closest value it can to the values after the decimal point because it needs 32 bits just to keep track of the values before the decimal point, and doesn't have as many as it would normally have to keep the binary closer to a good decimal approximation.

05-24-2019 03:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh, boy! Kyle is saying what I often say in these Forums, "Do the Experiment!", which I'm ashamed to admit, I didn't do! When I did, I have to admit that Kyle is correct in saying that calling the Get Date and Time in Seconds function repeatedly gives values that appear to differ from each other in "approximate milliseconds" (since it's a floating point number, the subtraction might not be "exact".

This seems to indicate that (at some level) LabVIEW takes the TimeStamp from the Millisecond Clock. But ... Do a second Experiment. Recall that the Time Stamp is the number of seconds since the Epoch (Midnight, 01 Jan 1904 UTC). If this is true, then I would assume that if one looked at the fractional part of the TimeStamp (by, say, converting it to a Dbl), one should get something like a "fraction of a second in more-or-less milliseconds". However, if you do this several times (once!, which may be "part of the problem" or "part of the solution"), you do not get round milliseconds, suggesting that (when making a single measurement) the clock being used is not "ticking" at 1000 kHz.

I once wrote a set of functions to pull the TimeStamp apart. It is a really strange quantity, and I don't remember my conclusions from that Experiment except to say that when used for single measurements, it does seem to be based on a rapidly-ticking clock.

Bob Schor

05-26-2019 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The resolution on Windows is actually 100 ns exactly. But note that resolution does not mean accuracy. The system time clock is NOT updating exactly every 100 ns by incrementing the counter value nor is the increment always 1. It’s very normal that that counter value can stay the same for many us and then suddenly jumps the elapsed time of 100 ns intervals ahead.

And since Windows is not a realtime system there is absolutely no guarantee that the interrupt that makes the system time tick gets called every 1 us and not even every ms. It usually gets to run with sub ms intervals but there is nothing that can guarantee that is gets executed quicker than even 1s after the previous execution.

05-27-2019 01:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you all for the information.

Initially I did not really think about precision vs accuracy.

I guess the “precision” is just the result of the datatype (timestamp or double) and it is reasonable to round this value to milliseconds.

@Bob_Schor wrote:

This seems to indicate that (at some level) LabVIEW takes the TimeStamp from the Millisecond Clock. But ... Do a second Experiment. Recall that the Time Stamp is the number of seconds since the Epoch (Midnight, 01 Jan 1904 UTC). If this is true, then I would assume that if one looked at the fractional part of the TimeStamp (by, say, converting it to a Dbl), one should get something like a "fraction of a second in more-or-less milliseconds". However, if you do this several times (once!, which may be "part of the problem" or "part of the solution"), you do not get round milliseconds, suggesting that (when making a single measurement) the clock being used is not "ticking" at 1000 kHz.

Thanks. But instead of guessing I'd rather know, this should be written somewhere. What about Realtime Systems? At some Point you might want to know the actual precision of the System clock.

I'm aware that - on a non realtime windows system - timing is at least not deterministic. But still, time itself has to have a known precision. That seem to be 1 millisecond.

Here are also two pictures visualizing the effect (as @Kyle97330 suggested).

The substraction should have been the other way around, but you get the message...

Thanks Christoph

05-27-2019 01:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ChristophHS wrote:Thanks. But instead of guessing I'd rather know, this should be written somewhere. What about Realtime Systems? At some Point you might want to know the actual precision of the System clock.

The reason this is not documented in the LabVIEW documentation is because it is not implemented by LabVIEW. LabVIEW uses whatever system service exists to read time. It does go to some length to use a high resolution timebase but it has no control about how this is implemented on the OS level. And the actual resolution of the timer increment can and will change on a number of factors.

Traditionally Windows used to have a resolution of 16 ms, but LabVIEW enabled a high resolution mode by calling the multimedia API which changed that from 16ms to 1ms. This multimedia API could fail on Windows systems based on available hardware resources which meant that the timer still had the standard resolution of 16 ms. Windows NT changed the default resolution to 10 ms, and the multimedia API was not available there so the default was 10 ms. There is however always the possibility to install some third party service that might in a similar way than the multimedia timer service improve the resolution in a more or less transparent way at the cost of hardware performance. The latest Windows versions seem to use a default timer resolution of 1ms but could go lower.

On other systems like Mac or especially Linux things are even more complicated as there do exist many ways to change the timer resolution on those systems in true system programmer mentality.

How would you document these "facts" in the LabVIEW documentation? Whatever you write down, the chance that it does not apply to your situation based on a unique combination of available hardware and installed software is very existent.

05-27-2019 01:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

How would you document these "facts" in the LabVIEW documentation? Whatever you write down, the chance that it does not apply to your situation based on a unique combination of available hardware and installed software is very existent.

Maybe a tutorial / application note ?

Even assuming that 1ms is the accuracy of a timestamp is not correct, als "Get Date/Time In Seconds Function" also states:

"Timer resolution is system dependent and might be less accurate than one millisecond, depending on your platform. When you perform timing, use the Tick Count (ms) function to improve Resolution." But it does not explain any Detail.

In total this topic leaves room for confusion an Errors 🙂

05-27-2019 12:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm just going to say that if you need to take such a deep-dive into a subject, that you should do the research to enable you to understand the subject matter. In this case, if you're delving into the sub-millisecond world of timestamps - and timestamps in general - so you should prepare yourself by reading up on the related material and asking questions.

Which is what your original post was all about, of course.

But you have to accept that there is a limit on what information LabVIEW help provides, and after that, you have to find things out by yourself. I think the cutoff point is quite reasonable, myself.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.