- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Task/Channel Reconfiguration in State Machine

09-14-2019 02:37 PM - edited 09-14-2019 02:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Say I have a cdaq with 1 analog output card and 1 digital output card and I want to use these cards in a state machine (in labview) with different states doing different things - perhaps one state allows the user to issue manual analog output commands and manual digital output commands on a subset of channels and another state generates waveforms that are output to all channels on both cards. The only way I've been able to do this is to place the create task, configure task, timing, write, start, stop, and clear functions inside each specific state (so it fully creates, executes, and clears the task before going back to the "waiting/idle" state). If I try to create a task using all the channels outside of the state machine and then just start/stop inside the state machine states I get an error about resources being reserved. Is there a way to create global tasks that contain all the channels I'm interested in using outside of the primary state machine and then "reconfigure" the task (or perhaps this isn't the correct terminology) in each state machine state so I can just configure, start, write, and then stop the task without having to also create, set timing, and clear the task as I'm doing now?

09-14-2019 03:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is your third post in three days, where a number of us have given you extensive suggestions (including "Use a State Machine") without any feedback from you (such as a Kudo, a Solution, or even "Thanks for the suggestion, what do you think of this State Machine?").

Now you've apparently "heard" our message, and want more help, again without providing specifics (such as attaching your VI). I'll wait for more information from you.

Bob Schor

09-15-2019

03:16 AM

- last edited on

05-14-2025

03:08 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Firstly, everything Bob_Schor said ^^.

Beyond that (and as he highlights, more information will allow us to give you better information) you need to look at what's being reserved and how you're trying to reuse it.

You can use the DAQmx Control Task VI to adjust the state of various tasks, but if you're running into conflicts, it's probably easier and more productive to fix the initial setup than be constantly rearranging things via the Control Task VI.

Please upload a VI which demonstrates the problem you're describing (reserved resources being unavailable) in the simplest manner that solving will be helpful to you (so I mean, if you don't want to share your entire project/setup, that's fine, but don't give an example that doesn't represent your system in a way that you will be able to translate answers about to your actual system... I'm not sure if that helps or not...)

09-15-2019 08:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Makes complete sense. The VI I'm working on is attached. This has evolved significantly over the past week and I definitely feel like I'm getting closer (in part due to the help I've been receiving here - thank you again!).

The attached isn't quite finished yet but I'd love any feedback folks may have on the approach (or any super obvious rookie mistakes I'm still making).

A few high-level questions:

- What would the best way be to go about displaying analog input data while this VI is running? I was thinking a producer-consumer-type of queue setup but where would I put the producer code such that it's always running (i.e. I have 6-8 channels of data that I'd like to always be displaying in a plot or two). The only note here is that the data rate for these inputs is something between 1k and 10k and I certainly don't need to display the data that fast.

- I'm not getting the behavior that I really want in the "standard waveform" section...what I'm looking for is:

- When the state machine is idle the user can specify "standard" waveform inputs and send these to the analog output channels (i.e. a sine wave with an amplitude of 5 at a frequency of 10hz)

- I'd like the user to be able to specify if they want to run this profile once, for some period of time (repeating if necessary for say 20 seconds), or indefinitely until they click some sort of stop button (perhaps the stop button appears if they opt to run indefinitely?)

- For the indefinitely run case I'd like the user to be able to update the waveform inputs on the fly - basically they can start running the standard waveform and change the frequency, phase, amplitude, etc. of either channel until they click "stop".

- How would I go about adding data logging to this such that the user has the option to log data when they execute on the user input profile (so after they upload the spreadsheet and when they hit "run user profile") OR if they decide to start collecting data while running standard waveforms in the "indefinite" mode (referenced in the above couple of bullets)

Any thoughts/help would be very much appreciated - thanks again guys!

09-15-2019

11:54 PM

- last edited on

05-14-2025

03:09 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Looks like you're making nice progress!

Regarding your questions:

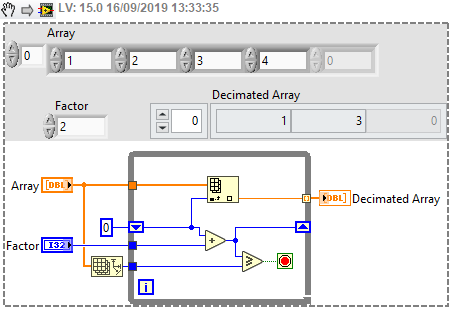

1. Producer/Consumer sounds good for this situation. Take care to correctly handle the ends of your loops (Bob_Schor might advocate Channel Wires rather than Queues for this purpose!) Ensure that you're sending blocks of data (not 1 point at a time) and when you display on a graph, you can choose to decimate the array of points if the numbers are far too large. Unfortunately "Decimate 1D Array" doesn't really do this in the way that you'd want, so consider a subVI with something like this:

Depending on your decimation factor, Quotient and Remainder, Index Array or Reshape Array followed by Indexing a row/column might be faster. I tested it in the past but forgot the results!

2.

It seems like you want the "standard waveform" section to behave like the "User Profile" section in some ways... Perhaps you can simplify the logging by ensuring that they run through the same case. You could do this by loading data into the Shift Register at the bottom based on the Function Generator output using a "Load Standard Profile" button, then using the same (now double used) "Run (loaded/generated) Profile" which runs whatever is visible in the "Loaded Profile" display.

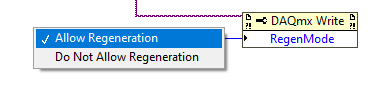

To run indefinitely, you'd need to be able to either loop (assuming you know you generated an integer number of periods of waveform) or continue to generate. The first can probably be accomplished by switching to Continuous Samples with "Regeneration":

This is a DAQmx Write property node - you'd need to execute it for both DO and AO if you wanted both to repeat. It's also not available on all hardware, and can have limited buffer space. I haven't checked if your hardware will support your typical waveform lengths... sorry!

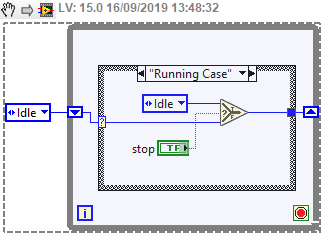

The second is a bit trickier because you'll need to keep calling Write.vi as needed to add more samples to the buffer, and generate them continuously. A separate state with "repeatedly buffering" or something might work for you here. You'll need to pass the task references into that state to be able to repeatedly call Write, along with information about the waveform to be generated. You can exit the case with something like the following:

You could hide or show this button by manipulating the Visibility setting under the Property Node for that control.

To be able to adjust on the fly, you'd need to check those controls in this case (or whatever solution you choose for repeating). Note that if you want to change continuously (i.e. no jumps in value) you need to separately program that, or be careful to only change at locations in time where a discontinuity won't occur. Bob_Schor has a nice post in another thread that discusses a solution to this problem. (I also attempted to provide a solution there, but his is better and has more explanation).

Recording data is something you'd often do in a separate consumer loop. You probably do want all of the data (no decimation) in this case, so you'd need to make sure you send a complete set and then write to disk using your preferred format. TDMS is nice for NI-based systems but can be a little fiddly if your colleagues all use MATLAB ( 😞 😞 ) Raw binary is fiddly in any case, but presumably has the "best performance" - but this isn't always necessary and the complication is often considerable. ASCII/text is simple but takes lots of space at high data rates. A comparison (guess what NI favours...) is available here: Comparing Common File I/O and Data Storage Approaches. If you go down this route and view the linked Writing TDM and TDMS Files in LabVIEW, do yourself a favour and skip over section 2 in preference of section 3 😉

09-16-2019 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This seems to be the most-recently-active of the seemingly related threads (see also here and here) so I'll respond in this one to help consolidate. I'm only gonna focus on hardware and sync stuff, you're getting great help on other aspects of architecture and code structure.

Earlier Sync Questions:

It appears your 9202 AI module is a Delta-Sigma device. As I said before, that's gonna make sync a little trickier. Your AI task will need to play the role of master and be the last one to be started. The AO and DO tasks should use the AI sample clock signal as their own sample clock.

Delta sigma modules induce a delay between the time a value is present at the terminal block and the time the A/D conversion is finished. I *think* (but you'll need to check your manual to be sure) that the AI sample clock signal doesn't assert until after the delay from the digital filter. If so, the rest of sync is reasonable straightforward. If not, you'll need a scheme to compensate for the unavoidable digital filter delay involved with a Delta-Sigma device.

Later Sync issues:

Some of your more recent questions suggest an expanded scope to your overall plans for the app. It's no longer quite so clear how important it is for AI to be sync'ed to AO and DO. It *does* seem clear that AO and DO need to be sync'ed to one another.

To sync AO and DO, you can continue to have the DO task use the AO sample clock signal (but be sure the DO task starts *before* the AO task, as already mentioned by @cbutcher). If you don't need AI samples sync'ed to AO & DO, you're free to start and stop AI as you please.

If you do need all 3 tasks sync'ed, you'd need to stop AI if it's already running, configure AO to use the AI task's sample clock, start dependent tasks DO and AO, then start AI.

One advantage of sync'ing via sample clock (instead of the commonly recommended start trigger) is that sample timing across different devices won't drift relative to one another over long periods of time.

That begs the question, how long is "long"? Well, a lot of devices have clock accuracies spec'ed at ~50 parts per million. In practice, you should expect devices to vary from one another by at least 10-20 ppm.

The idea of parts per million scales very straightforwardly. If devices deviate by 10 ppm, then they'll be skewed by 10 sample intervals for every 1 million samples. Or by 1 sample interval for every 100k samples. Your code shows indications of sampling at either 1 or 10 kHz. At 10 kHz on independent devices whose timebases vary by 10 ppm, you'd be skewed by 1 sample for every 10 seconds of data collection.

-Kevin P

09-16-2019 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks guys - this is extremely helpful!

The 9202 being a delta-sigma device is great feedback - I suspected it was but the NI documents I found that listed the DS devices didn't have it listed. It sounds like the action for me is to configure the code to have everything synched off the AI clock (as opposed to the AO clock I was using) and start the AI task last. This is great - thank you!

As far as displaying the analog input data and periodically pulling it into the state machine (like grabbing the mirror position feedback when manual analog commands are issued or logging multiple seconds of data automatically when the user uploads/runs a spreadsheet defined waveform) I'm still a bit confused on where to place the code and/or how to "structure" the code. I clearly still don't have a great grasp on how multiple while loops interact with one another (and the data flow in general...). I think I grasp the general idea but I'm struggling to "see" how I would do this. Would I configure the AI task outside the main state machine while loop and have a second parallel while loop running with the read and then use notifiers to send the data into the main state machine loop? Or would the read loop be somewhere inside the main state machine while loop and/or inside one of the cases? I apologize if this is a dumb question...I'm drinking from a firehose! Thanks again for all the help!

09-16-2019 05:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Again, my focus has been on the DAQmx & sync stuff, and your initial posts about sync made me suppose you'd want sample-for-sample correlation among DO, AO, and AI. In other words, you'd want to know exactly which DO and AO samples were being generated when a particular AI sample was acquired.

So that's one key question - is it important to sync AI with DO/AO? Or is it ok if AI is independent of DO/AO, with no particular time correlation?

(Note: unlike apps using SAR type A/D converters, you can't simply loop back your DO/AO into 2 of your AI channels to get "close-enough" correlation. The inherent digital filter delay of Delta Sigma converters would throw that off and you'd need to shift your DO/AO data to realign it.)

Tight hardware-level sync will be harder to implement across the various modes of operation you want to support and you may get stuck with some undesired constraints -- such as needing to restart all the tasks when switching modes. If you can live with letting AI be independent from DO/AO, some parts of your app become more straightforward.

-Kevin P

09-16-2019 08:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks Kevin. Let me think about this a bit more and get back to you - it's important that they are synchronized but not to the extent where I need exact sample-for-sample correlation.

Perhaps someone else can help me with the data logging? I can't figure out for the life of me how to incorporate displaying/logging analog input data while the state machine is running without duplicating entire portions of code (create/configure task, start, read/write, stop, clear) in each separate event case.

Thanks!

09-16-2019 08:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@needhelp2378 wrote:

Perhaps someone else can help me with the data logging? I can't figure out for the life of me how to incorporate displaying/logging analog input data while the state machine is running without duplicating entire portions of code (create/configure task, start, read/write, stop, clear) in each separate event case.

I can take a look at it again. Is "Master Controller (v0).vi" from a few posts up the most recent version?

Generally there is some way to avoid code duplication. In some cases, that may just be a subVI (which is still duplication, but less egregious).