- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Synchronizing Analog Input and Delayed Digital Output (LabVIEW 2014)

01-15-2018 03:27 PM - edited 01-15-2018 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm using LabVIEW 2014 with a USB-6363. Since I suspect that my attempt at a solution is much more complex than it should be, I'll separate what I want to do from how I tried to do it.

Objective

Given a set of U8 binary numbers and a certain clock, I'd like to, for each U8 binary number,

- begin recording analog input

- after a small initial delay, write out the binary number to a port on my device

- after a preset number of clock pulses, end analog input collection and append the data to an existing file.

I would like to repeat the above three steps for each U8 number in an array, so I end up with a file with a different series of data for each number in the array.

Implementation

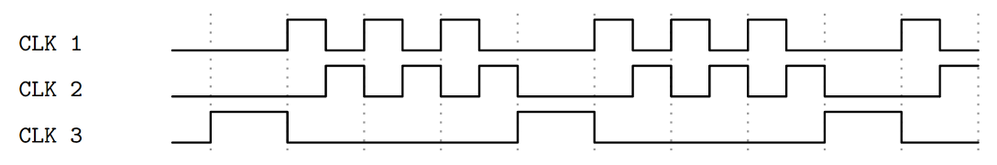

I'm new to LabVIEW, and I spent a fair amount of time trying to figure out the best way to do this. I ended up trying to control each measurement "cycle" of reading, writing, and saving with one clock and handling the measurements with another (but triggering off of the cycle clock). I was going for something like this,

In my implementation, I didn't know how to change CLK 3's low time so that it encompassed a variable number of preset clock pulses in step 3, so I just made it long enough to encompass any number I might choose (1 second). That is likely my first of many mistakes.

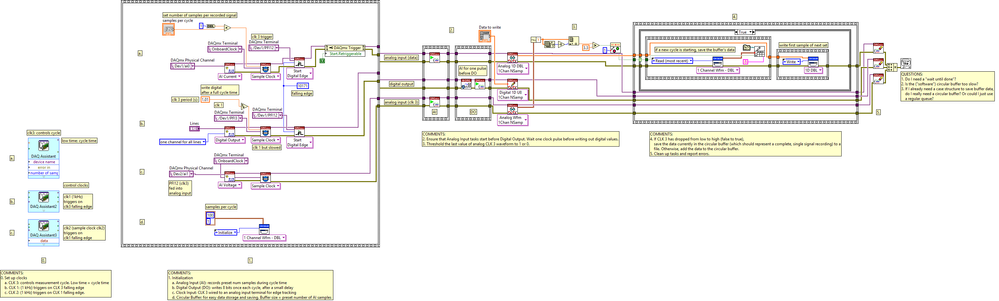

I ended up with the following VI (which I've also attached):

which uses three DAQmx tasks, three DAQmx Assistant clocks, and a circular buffer. The circular buffer is sized to accommodate exactly one recorded signal's worth of samples, and it saves whenever one of the DAQmx tasks (CLK 3's terminal fed into an analog input on my device) detects a falling edge.

I know this is likely far too complicated for what I'm trying to do, and probably contains many mistakes. However, I figured I should ask for advice about better, simpler, or more effective methods of solving my problem before attempting to further complicate and debug my own. This is far from my first attempt, and each time I feel as if the best method of doing it completely escapes me.

Please let me know if there's anything I can clarify.

- Tags:

- 6363

- synchronization

01-16-2018 12:52 PM - edited 01-16-2018 12:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There's is a little too much for me to fully engage on all the details right now. Let me break it down into grandpa-on-a-porch-rocking-chair advice, starting with good news and bad news:

Good news: this is a valiant effort and impressive effort for someone new to LabVIEW

Bad news: it still isn't close enough for me to help solve fully & quickly.

Next grandpa advice: let's take the scenic route.

I'm going to assume that you'd *like* to learn some good practices for programming data acq programs in LabVIEW. And when you have multiple tasks with closely interrelated timing and sequencing, you're probably gonna end up putting all the tasks into the same vi, just as you've already done.

HOWEVER, I think you'll get more out of the scenic route where you make a separate vi for each of the tasks. First get each individual task working as close as you can to the way you want it. Only when you've worked out all the kinks in the individual tasks should you then try to gather them all back together into a single vi.

You're gonna learn better lessons on the scenic route. It's too easy to draw wrong conclusions from lots of shotgunning efforts to tweak and tweak and tweak on a single diagram full of complex interactions. It'll also be easier to get help here because the scope of your code and questions will be more manageable. Bite-sized pieces.

-Kevin P

01-16-2018 06:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I appreciate you taking the time to reply! You are correct that I do wish to learn to use LabVIEW effectively; though I'm just starting with it, I anticipate it being a tool I'll use frequently in the future—it's an incredibly versatile piece of software! I will gladly take the scenic route.

I'll try to get each of these tasks running separately with the correct clock structure (if the described clock structure is indeed the best way to handle the timing). I'll post again (as a new question) when I hit my first bite-sized roadblock.

Thanks again!