- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Minimum time between conversion - NI 9205

Solved!03-11-2022 01:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all;

I use one NI 9205 for acquiring data from 20 different channels (NI compact RIO 9114, LabVIEW FPGA).

I need sampling frequency of 12.5 kHz or higher.

As far as I understood, with default settings (minimum time between conversion = 8 us), the maximum sampling frequency for my case is:

fs = 1 / (20*8e-6) = 6.25 kHz.

To increase the sampling frequency, I am going to set minimum time between conversion to 4 us, so I can have:

fs,max = 1 / (20*4e-6) = 12.5 kHz

In the link below, it has been stated:

https://zone.ni.com/reference/en-XX/help/373197L-01/criodevicehelp/conversion_timing/

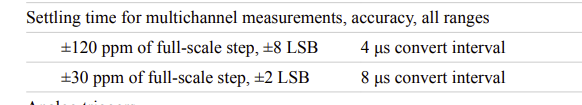

"The default minimum time between conversions for the NI 9205/9206 is 8 µs. The accuracy specifications in the NI 9205 and NI 9206 hardware documentation on ni.com/manuals are based on this default value. If you set the minimum time between conversions to at least 8 µs, the accuracy of the module is not affected. If you set the minimum time between conversions to less than 8 µs, the accuracy of the module degrades if you sample data from multiple channels."

My question is: how much accuracy will I lose?

In my case, my readings on the channels should have maximum error of 16.5 mV.

Does setting minimum time between conversion to 4 us produce more error than 16.5 mV?

I understand that the error and accuracy depends on the temperature, calibration, noise, etc.

I want to have a rough idea about the amount of error.

Please let me know if you need more information.

Thanks a lot,

Sina

Solved! Go to Solution.

03-12-2022 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your question is answered in the instrument datasheet

The accuracy will be thrice worst than the 8us covert interval.

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution

03-14-2022 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Santhosh

Thank you very much for your answer.

I didn't notice that the datasheet has mentioned the accuracy.

Now I have two more questions:

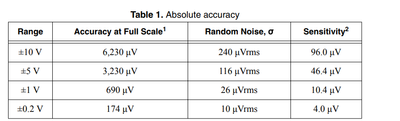

1- How should I calculate LSB?

For example, if I work with +-10 V, range, is it true to say that:

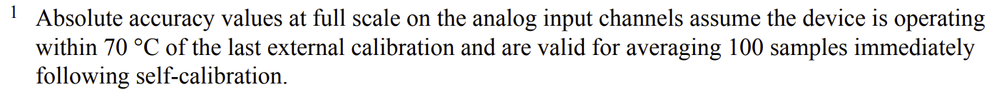

LSB = Full Scale/(2^n-1) where n = number of bits (n =16 for NI 9205) --> LSB = 10/(2^16-1) = 0.153 mV?

Then the accuracy for 8 us interval would be +-2*0.153 = +-0.306 mV

and accuracy for 4 us interval would be +-8*0.153 = 1.224 mV ?

2- In the datasheet, what is the difference between the accuracy mentioned here:

and the absolute accuracy?

Which one should be considered when we want to estimate the maximum error?

Thanks again,

Sina

03-14-2022 08:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would add the conversion error on top of the absolute accuracy if you are measuring just 1 sample +/-(6.23mV + 1.224mV)

As per specifications, it uses a 100 sample average to arrive at the specification numbers.

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution

03-14-2022 08:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks a lot Santhosh!

Best regards,

Sina