- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Mean PtByPt calculations

05-18-2016 09:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

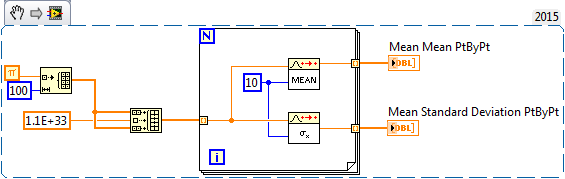

We recently discovered that there is a difference between using 'Mean PtByPt.vi' and using the Mean output of 'Standard Deviation PtByPt' vi. If the dataset contains a large value (a spike) compared to the rest of the set the Standard Deviation returns an erroneous mean value after the spike. This doesn't happen in 'Mean PtByPt.vi' since it uses a function to detect large changes in the data and uses another algorithm in that case. I believe this has something to do with the way DBL:s are handled, it looks as if we cannot get the resolution back when we go from high to low values. Either that or there is somehow some other leftovers of the large value even after it is out of the mean window.

Is this a bug in Standard Deviation PtByPt, or is there a rational explanation of why it doesn't use the same technique as Mean PtByPt?

Also, could someone explain why this happens. The Mean PtByPt turns to another algorithm if the relative difference between values are greater than 10^5, where does this value come from?

CLA

https://www.prevas.se/expertis/test--regulatoriska-krav.html

05-18-2016 10:02 AM - edited 05-18-2016 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can you tell us more about what the erroneous mean value you see?

I dropped your snippet into LV 2015, ran it. The two graphs appear identical to me.

If there is a difference, please point it out.

05-18-2016 10:03 AM - edited 05-18-2016 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You need to understand what each one of these is giving you because they are not the same.

The mean mean gives you an average values of the incoming data. 4, 5, 4, 7, 8, 10 mean = 6.3

The mean Stdev gives you an average of the change in the values of the incoming data. 4, 5, 4, 7, 8, 10 mean stdev = 2.422

the change from 4 to 5 is 1. the change from 5 to 4 is 1. The change from 4 to 7 is three. do that nand get and average it does not equal the mean value.

GHSP

05-18-2016 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

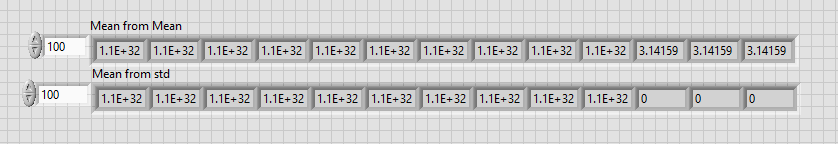

No, they are not identical. After the peak Mean calculation in StdDev.vi outputs zero, not Pi. You do not see it at this scale.

It happens because of DBL precision - it is ~15 digits, if difference is below that, calculations in that VI fail.

Yes, there is difference how these functions handle DBL precision limitation. Mean.vi does not modify sum at all if relative change is smaller than 10^-5, mean calculations in Standard deviation fail completely. I would agree it is a bug.

05-18-2016

10:22 AM

- last edited on

07-11-2024

03:22 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi M_Peeker,

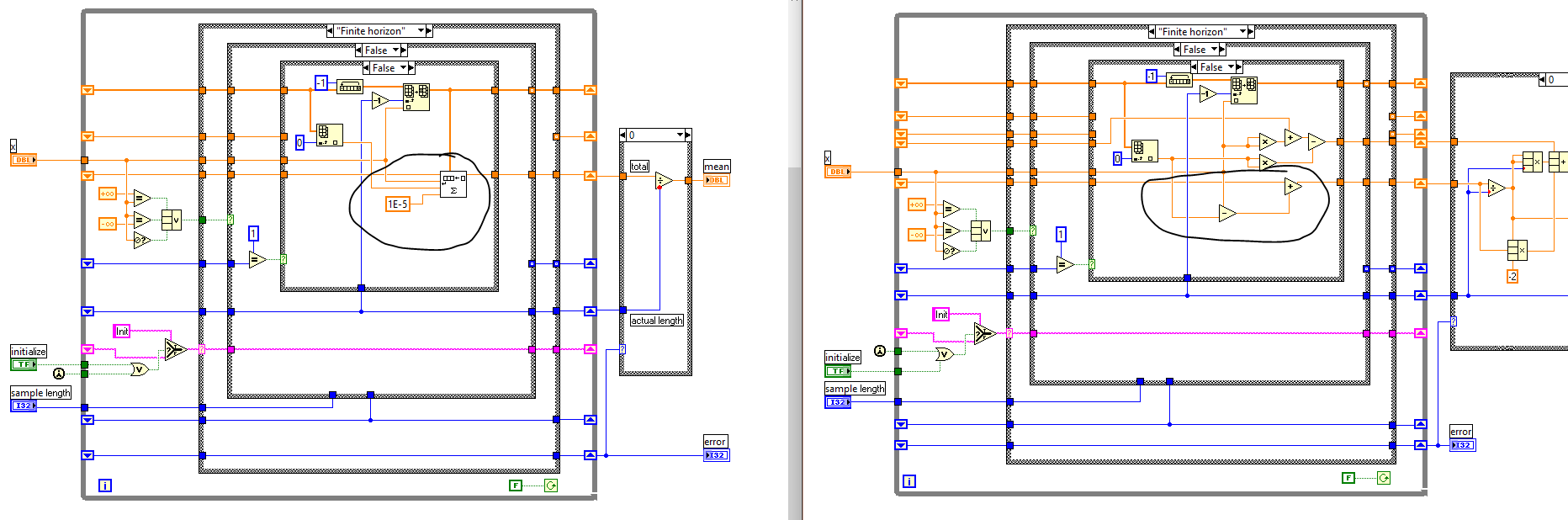

When you take a look inside, it doesn't work the same way for both VI.

In the Mean standard deviation point by point VI, at one point, iteration 101 i =100 it store the value in a shift register call him X. On another shift register an array with the previous tenth elements call him Y. are stored then at the iteration 110 when your entered in state finite horizon it does the following calculation

Pi-Y [0] +X and divide it by the length to calculate the mean value.

In number it does: 3.14-1.1e33+1e33= and it returns 0 instead of pi. Before the division.

Tried it on your LabVIEW it will do the same. It's because when you're using a double precision, you will only have 15 significant digits.

So 3.14-1.1e33 will give -1.1e33

Here a nice document about double

The mean point by point only divide the "Total" by the actual length

Anyway, you're right the you should use the mean ptBypt

Regards

05-18-2016 10:33 AM - edited 05-18-2016 10:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I too can confirm this behavier. Here is the output from the two functions from op's vi: Note that after the spike, the mean ptbypt function goes back to pi, but std ptbypt function somehow goes to zero.

The difference is in that mean ptbypt use a special fuction that detects large change in the input value. If there is a large change in the input value, the function redo the summation on the whole rolling sum instead of simply subtract the last one element add a new element. See the picture below: The left is mean ptbypt andthe right is std ptbypt. Note the circled part: the mean ptbypt use a special function to update the summation while std ptbypt did not. I really don't any reason why std ptbypt did not use that function. My guess is that this was a bug for both functions, but when when NI people fix it they only did it on the mean ptbypt function, they forgot about the std function need the fix as well 🙂

edited to include the where the differencein the vis.

05-18-2016 10:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The originaly poster should have given that level of detail in their original message. They presented a VI, but it did not demonstrate the problem because the graphs do not show it. A clear description of what the difference was (Hey it's pi here and zero there) is needed with indicators that show that.

I don't agree that it is a bug because it is ridiculous to try to math on numbers that have such a huge difference in magnitude. It's like all the posts we get where people complain of a bug because 0.3 is not equal to 0.3.

05-18-2016 10:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Ravens Fan,

I don't agree as for both function, when you look a the detailled help, you have the same description for bot mean output

"mean is the mean, or average, of the values in the set of input data points specified by sample length. "

But the algorithm aren't the same inside, and in some cases it return different value.

Regards.

05-18-2016

10:57 AM

- last edited on

07-11-2024

03:22 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

NI did a bug fix at LV2009 on mean ptbypt function. See id 138025 of LabVIEW 2009 bug fix. I think they tried to fix this on mean ptbypt and actually forget about the std ptbypt function . The cause of the problem is indeed as sabri.jatlaoui mentioned, but what I don't under stand is that after the rolling sum goes to 0, it just stays at 0 forever, even when the spike has long passed.

05-18-2016 11:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

AuZn

what I don't under stand is that after the rolling sum goes to 0, it just stays at 0 forever, even when the spike has long passed.

Because in Mean in StdDev pntbypnt they do not sum rolling array of old values. They keep sum and modify sum by difference between new value and the eldest value. Due to dbl precision small difference is lost and sum is modified by wrong value.