- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Jitter issues in LinuxRT when changing timed loop period

02-04-2016 01:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I ran into an issue with timed loop jitter in my RT application running on a cRIO-9035 (linux). After monitoring execution time in many different places I have come the conclusion that it is not the code within the loop, but the loop itself.

In the loop I am consuming a DMA FIFO from the FPGA. I always ask for the same number of values out of the DMA FIFO. My program is pretty well sync'd with my FPGA, but to account for minor jitter I run the timed loop faster for one iteration if the AvailableSamples is >= my desired samples after the FIFO read.

Anyhow, I guess my issues it that when I change my period, my loop hangs up. Normally the code within the loop takes ~1.5-2.5ms to complete. When I change speeds my iteration shows ~30+ms. Is it known that changing period like that is not a good practice or does anyone have any insight to why this might be?

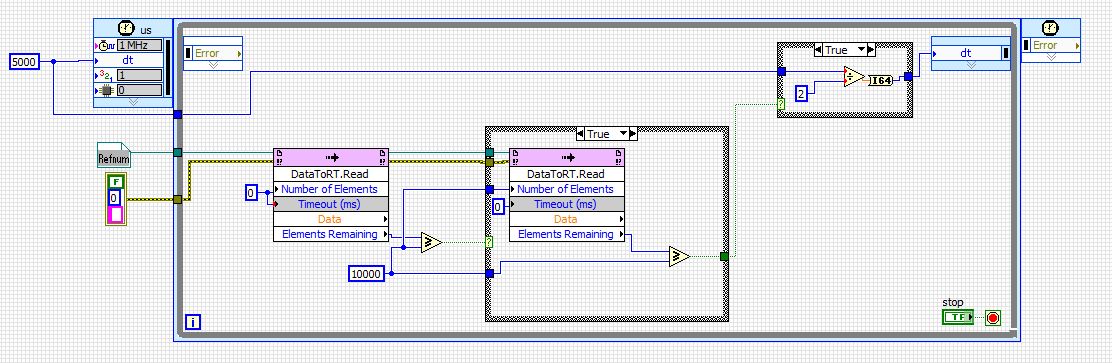

(The posted screenshot is just an example of my issue, not my actual VI)

For now my solution is to run an inner loop that executes once (normal mode) or twice (when buffer is getting kind of full) while leaving the timed loop period unchanged.

I would love some comments on this

Thanks,

Corey

Corey Rotunno

02-04-2016 02:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

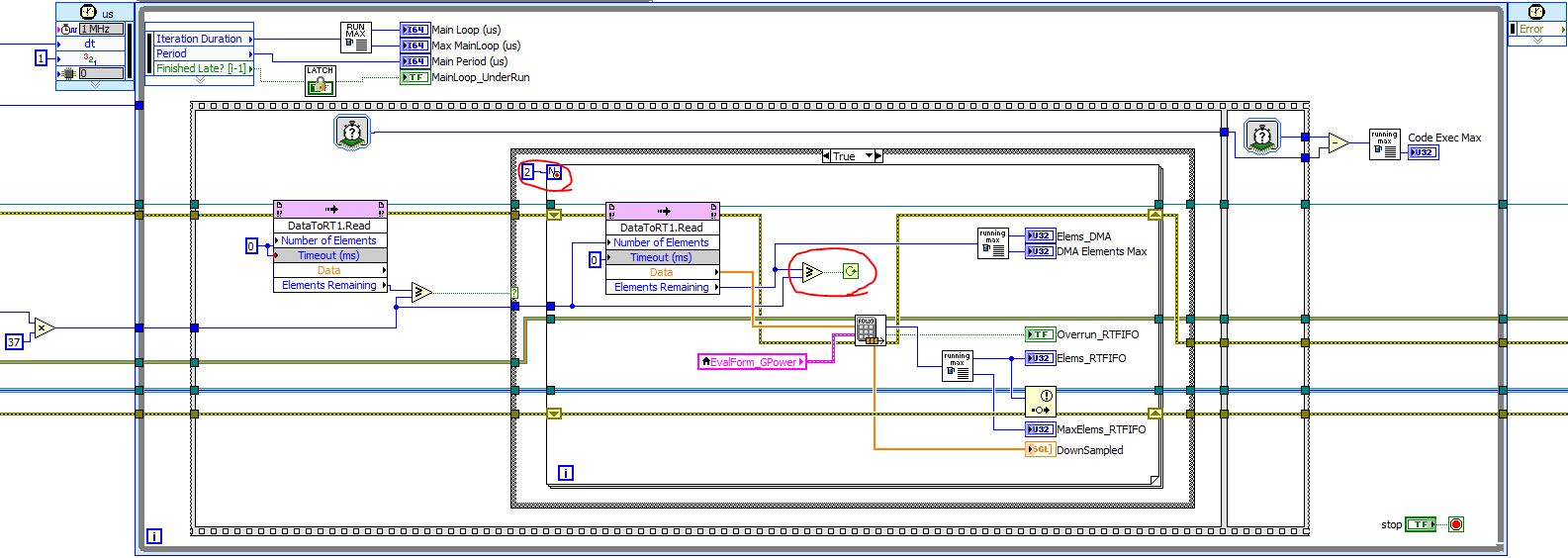

As a follow up, my "solution" didnt work. I am no longer changing the period of my timed loop, but I am still getting some infrequent but pretty major jitter. I timed the execution of all the code within the timed loop, and the maximum I saw was <2ms, however my timed loop is reporting late iterations that can run anywhere from 10ms-30ms using the "Iteration Duration" left node. My CPU utilization of my cRIO is ~40% and RAM usage is <30% with no spike in either.

My buffers are sufficient to deal with this, but I am hoping for some insight into my problem being that I am new to the RT world.

Thanks,

Corey

Corey Rotunno

02-05-2016 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Corey,

This may be the result of reading only when the number of elements is greater than 10000. Your code could execute under 2ms most of the time because you don’t read anything during those iterations. However, the occasional iterations where you do read all 10000 elements take much longer.

Have you tried waiting for fewer elements or even reading fewer elements every iteration? You could also just increase your timed loop period to accommodate the time it takes to read all 10000 elements.

Also, do you have any other high priority loops running that would prevent this loop from executing on time?

02-05-2016 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Wilbur,

So, In my application I was reading 9000 per iteration every 5ms (36 chans @50kS/s). There are really never any iterations where I dont read that many values. The 2ms is during an iteration that actually reads. I am actually having pretty good luck right now reading more samples per iteration, at a lower loop rate. I am currently running the pictured loop at 100Hz, so now I am reading 18000/iteration. My jitter issues are much less pronounced now, although I still do have an iteration every now and then that exceeds my 10ms loop time, although most are in the 2.5-3ms time range. On a related note, I also split my DMA FIFO's into two channels that I re-combine in the RT system. This seems to help a little bit, but the lower loop rate seemed to have a much larger effect.

I do have one other high priority timed loop, assigned to core #1...the loop in question is assigned to core #0 (I am running on a dual-core atom cRIO).

Corey Rotunno

02-05-2016 01:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am actually having pretty good luck right now reading more samples per iteration, at a lower loop rate.

That makes sense, given that there is some processor overhead associated with each FIFO read event. Reading a smaller number of elements means the loop has to execute faster to catch all the data in time. If you try to read too few elements at a time, the processor overhead for the FIFO read (and any other functions in the loop) will be greater than the time period required.

02-05-2016 02:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, that is what I was reading in the documentation provided by NI. As I previously stated, that helped a lot but did not eliminate the late loop iteration completely.

Since then I have made one more modification that seems to help. There was one other high-priority loop in my RT application running on a different core. This loop also had logic that increased the loop period for one iteration to help catch up on a buffer when needed. I have removed that logic and I havnt had any late iterations of my main loop, although I have only let it run for 20 minutes...we will see how it does running overnight.

The way I got rid of reducing the loop period for one iteration was to leave the period alone, and instead run a conditional FOR loop that normally runs once but can run twice if need be.

Am I onto something, does changing the period of a timed loop during run-time cause performance issues in other parts of the application? Or is it just coincidence? If anyone has any insight into this please feel free to chime in.

Corey Rotunno