- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Is LabVIEW a Time Machine?

Solved!04-10-2022 03:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

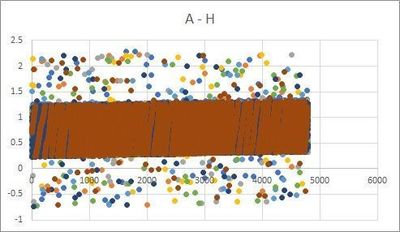

Hello Friends: LabVIEW is NOT a time machine, 😃. However, using 'Get Date/Time In Seconds' sequentially the time actually can go backwards. NOT a program flow error. Could attach code but a LARGE Project.

- Is 'High Resolution Relative Seconds VI' any better than 'Get Date/Time In Seconds'? I can forego the timestamp and just record elapsed time, perhaps in hours as runs for at least 3 days.

- Using an about 1 YO, Mfg. D**l SSD Workstation PC of moderate quality with large HD for data. W10 Pro and LabVIEW 2020 64 bit. Cortana and other 'news' junk disabled. Software timing runs from the canned crystal oscillator (CCO) on the mother board and that may not be of high quality in this PC... Yeah, you see the issue...

- Used to be a way, about 18 years ago, to change the LabVIEW timing from standard Windows 3.x 36 ns to 9 ns in the LabVIEW.ini file with an added key. Does this still method exist for W10 and LabVIEW 2020? I recall I had to add that key... Might not resolve if motherboard CCO is not too 'wonky'.

- If NOT a time machine then the 'Get Date/Time In Seconds' if futzing up as shown below. Negative delta t is not possible. Seems the CCO, do you agree?

- Any suggestions appreciated Friends. We don't have any NI cards with a good NI timing source on this PC.

Negative delta t between subsequent reads is NOT real. In 76,672 readings overnight 0.347% of timestamps go back in time... That is bad.

Warm Regards and Thank You,

Saturn233207

AKA Lloyd Ploense

Solved! Go to Solution.

- Tags:

- labview.ini

- Timing

04-10-2022 03:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Could you please post a simplified VI to replicate the issue?

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution

04-10-2022 03:35 PM - edited 04-10-2022 03:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So what does the time axis represent? Milliseconds? The help for Get Date/TimeIn Seconds says "Timer resolution is system dependent and might be less accurate than one millisecond, depending on your platform. When you perform timing, use the Tick Count (ms) function to improve resolution." But even then, you may see jitter. If you want under 1 ms accuracy, you may have to go with a stratum 0 time source.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

04-10-2022 03:45 PM - edited 04-10-2022 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Saturn233207 wrote:

[...] Software timing runs from the canned crystal oscillator (CCO) on the mother board [...]

Are you sure about that? Time is still processed by the CPU (if it is able to run Win10, anyway). You seem to be measuring time differences close to the CPU tick rate. I would not be surprised if a scheduler sometimes decided to reorder your time requests. They may even go to different CPU cores.

04-10-2022 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, as Fabiola has taught us, LabVIEW is not a "Time Machine", as she advocates that we should "Get Yourself a Time Machine" (by which, of course, she means that we should use some form of Version Control Software to manage our LabVIEW work).

LabVIEW, running on a PC running Windows, has (I believe) two main Timing "engines" -- the one that drives "Get Time in Seconds" and the Wait and Tick Count functions, which have a resolution on the order of a millisecond (usually), and the "High Resolution Timer", typically used for attempting to time shorter intervals (usually for benchmarking), and probably uses ticks of the CPU clock. This latter timer does not, as far as I know, give you "clock time" or date.

So why are you recording "negative times"? The most "reasonable" answer that I can imagine is that you are measuring the time twice, and the "second" measured time is less than the "first", suggesting time went backwards.

I don't believe this is happening -- what I'm guessing is going on is that your LabVIEW code records two Time Stamps in two different places, but in some instances, the "second" one runs before the "first", and thus the time difference is negative. How can this happen? The "Third Law of Data Flow" -- two operations ("Get Time in Seconds" and "Get Time in Seconds") that do not have a Data Path connecting them operate essentially "in parallel", so you can't tell which comes first.

If you can't spot where your code might be "fooling you", post it and let us puzzle it out. Be sure to post it as a VI (rather than a "picture") so we can try to run it ourselves.

Bob Schor

04-10-2022 10:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not sure if LabVIEW uses the windows RTC for the get date time vi but if it does, Windows has a (terrible) control loop that runs on that time that makes the time on the PC jump when a correction is made. Of course the correction is usually in ms so you don't see it jump unless you are comparing it to a much more stable time source. So maybe you are reading the time before and after windows does a correction in time which makes it look like you going back in time.

Have a pleasant day and be sure to learn Python for success and prosperity.

04-11-2022 03:09 AM - edited 04-11-2022 03:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Jay14159265 wrote:

Not sure if LabVIEW uses the windows RTC for the get date time vi but if it does, Windows has a (terrible) control loop that runs on that time that makes the time on the PC jump when a correction is made. Of course the correction is usually in ms so you don't see it jump unless you are comparing it to a much more stable time source. So maybe you are reading the time before and after windows does a correction in time which makes it look like you going back in time.

It depends what you consider terrible. Basically the time control in Windows is based on a timer value that gets updated from the battery backed RTC on bootup. Once Windows has booted up it does not use the RTC anymore but instead uses its own internal timer value. This timer value is typically incremented by a timer tick interrupt, which is indeed operating on the crystal controlled system clock. That crystal controlled system clock is fairly accurate but not comparable to an Iridium or such atom clock source by a far stretch as it needs to be fairly cheap and easy to manufacture. So Windows also allows time synchronization over the network. This can be a Windows domain server or an internet time server if available. And this was in the past indeed pretty nasty since when Windows discovered that there is a discrepancy between its own time and the time server it simply adjusted the internal clock in a single or very few big steps.That has changed to a more adaptive change in recent versions so that the time should not jump around in steps anymore, but of course that only works so much if your Windows system for some reason got very much out of sync with the internet time. Basically if the difference between the received time sample and the local time is within a certain (registry controlled) limit, the Time Service adjusts its internal clock rate such that the local time will slowly diverge towards the desired time reference. If the difference is to big, the local time will be adjusted at once, since trying to diverge to that value would take way too long to be useful. As far as I'm aware that is not very different than what other operating systems including Linux do. The RTC is also regularly updated to the current Time Service value if it was determined that it is officially synchronized to a trustful external time source.

So there are basically three clock sources in Windows, one is the Time Service which is initialized to the RTC time on bootup and kept running from the timer tick in between synchronization moments with an authoritative time server.

The second is a timer tick that is the base for the LabVIEW ms tick counter and which is implemented with a hardware timer in the chipset but nowadays often is actually updated from the third timer source which is the high resolution timer that is build into all modern x86 CPU architectures and is derived from the clock speed of the CPU itself.

04-11-2022 10:32 AM - edited 04-11-2022 10:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote: It depends what you consider terrible.

I guess I would qualify that as a non monotonic clock : )

Have a pleasant day and be sure to learn Python for success and prosperity.

04-11-2022 02:12 PM - edited 04-11-2022 02:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry, your posts does not really contain much suable information, for example your graph has no units. Are we supposed to guess what they are?

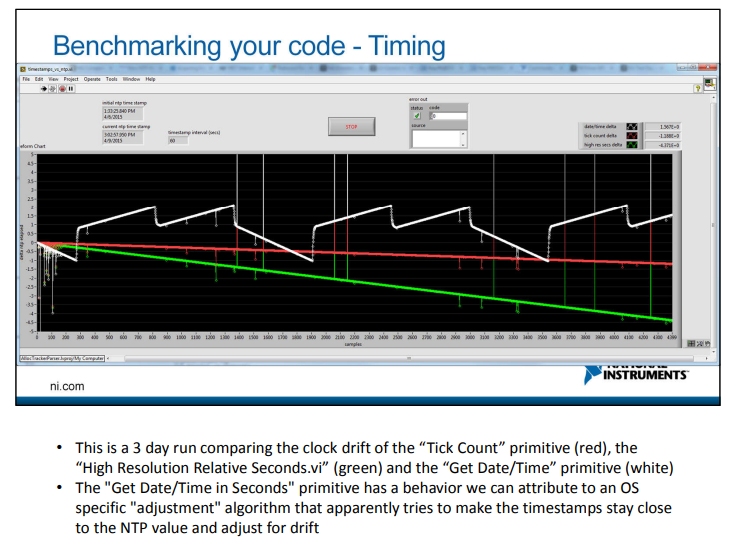

High resolution relative seconds is better. A windows machine will occasionally resynchronize to the atomic clock, so getting a timestamp is less accurate. because it tends to speed up and slow down to adapt to the precise source.

There was a NI week presentation (?) (Or other discussion?) quite a few years ago comparing the various time sources. can't find it at the moment.

04-11-2022 02:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

There was a NI week presentation (?) (Or other discussion?) quite a few years ago comparing the various time sources. can't find it at the moment.

Found it! See slide #8 of Ed's part of the talk. (i.e. part I of TS9524 - Code Optimization and Benchmarking )

(I still suspect a coding error even if you don't believe it :D)