- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

How long does a tick last for the Tick Count (ms) VI in LabVIEW?

10-03-2013 05:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is the tick time dependant of the processor frequency? If you say that the Tick Count (ms) VI in LabVIEW generates ticks each millisecond, then an algorithm may last less than one millisecond, right? So, how can I compare both software (with a processor running at 3.5GHz) and hardware (with an FPGA running at 40MHz) implementations in the same conditions?

10-03-2013 06:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@vitrion wrote:

Is the tick time dependant of the processor frequency? If you say that the Tick Count (ms) VI in LabVIEW generates ticks each millisecond, then an algorithm may last less than one millisecond, right? So, how can I compare both software (with a processor running at 3.5GHz) and hardware (with an FPGA running at 40MHz) implementations in the same conditions?

I don't think that is right. One tick is one increment of the operating system clock.

So what happens is that LabVIEW requests the value of the next tick of the operating system clock. The operating system advances the clock one tick and services the request. LabVIEW translates the answer into milliseconds.

I'd never thought much about how the Tick Count (ms) works - until you started asking all these questions. Thanks for making me search around and increase my knowledge! 🙂

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

10-04-2013 09:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Tick Count works very differently for a desktop OS and FPGA. On the desktop OS, the tick count has a resolution of one millisecond. It is very easy to repetitively run a FOR loop and get the same answer back from the Tick Count primitive, since the FOR loop is running far faster than 1kHz. However, it is also very easy to get jumps of over a millisecond, since desktop OSes are multitasking. Typically, this occurs when the LabVIEW process is suspended to allow another process (You have mail!) to run. At this point, you get a jump equal to about the OS time slice time (the 55ms mentioned earlier for Win95, user settable on NT based systems). On the FPGA, the Tick Count is configurable for milliseconds, microseconds, and actual tick counts. The actual tick count resolution will depend upon how fast you are running the FPGA. There are no multi-tasking or time slice issues on an FPGA, so it behaves very predictably.

If you want higher resolution on desktop systems, you can use <vi.lib>\Utility\High Resolution Relative Seconds.vi. You will still be subject to the vagaries of the multi-tasking time slicing, but as long as you stay in the same time slice, you can get relatively decent data. Run multiple times and take the lowest value. It will be the one that did not get interrupted.

10-04-2013 10:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OS plays the trick

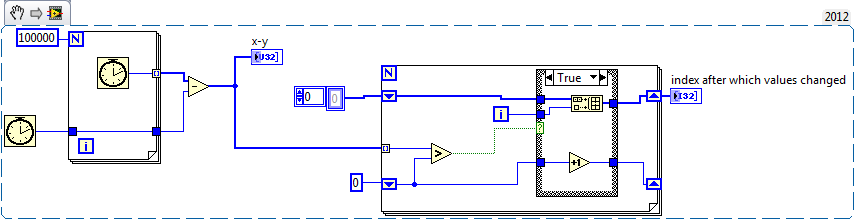

just did a simple experiment

i tried to measure time slices with tick count function ( inspired by the idea DF has given , for loop will run faster than 1KHz ).

in the second for loop just checking when time slices came.

run the VI as many times as you want, the time slices will increase linerly ( 1,2,3.....) , tick count resolution 1 ms

but the iteration after which they came will change every time, OS dependent??

using LV 2012 on win7

- « Previous

-

- 1

- 2

- Next »