- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Flatten Unflatten vs TypeCast - Speed Test

02-16-2018 11:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are these average times or shortest times? What's the variability?

The time is the total time for 10000 loops, each loop converting 1000 random "generic"s into "Specific"s, and then divided by the number of conversions. So, yes that's an average.

the variability is quite small, for example the 227 number is 226-228 in 5 trials.

The VIs are attached above, if you care to play yourself.

Once they are inlined, the priority is irrelevant (... if you are talking about "subroutine priority". Or are you using it in the generic term for a subVI?)

I habitually make such things INLINE SUBROUTINE, without regard to the necessity of the SUBROUTINE priority. In any case, it applies equally to both versions and doesn't bear on the question.

Hard to tell....

Indeed.

Culverson Software - Elegant software that is a pleasure to use.

Culverson.com

Blog for (mostly LabVIEW) programmers: Tips And Tricks

02-16-2018 12:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ah thanks. I somehow missed the attachment. 😉

02-16-2018 12:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How does Variant to data compare?

I never thought of trying that but it can't be in the same league, speed-wise.

I did some work last year with taking variants apart and I know that if you encode an enum into a variant, the variant contains a copy of all the value strings from the enum.

I actually can manufacture a variant by manipulating those strings.

I would wager that the variant to data function would complain about the enum mismatch, and waste a whole lot of time doing it.

Culverson Software - Elegant software that is a pleasure to use.

Culverson.com

Blog for (mostly LabVIEW) programmers: Tips And Tricks

02-16-2018 03:00 PM - edited 02-16-2018 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just some other random observations:

If you remove the error wire in the "flatten" version, you gain about 10% in speed.

(no big deal, still interesting ;))

Do you typically only do single scalar conversions? It seems doing the same directly on arrays is significantly faster (10-20x) for the same amount of work, again with the typecast slightly slower (20us vs 15us).

There is probably a size threshold. When the array gets shorter, N scalar conversion might still win. Not tested.

Note: One thing I changed. I replaced the "tickers" with high resolution relative seconds, thus getting results in simple units (use SI notation for the time format, e.g. %.4ps). Now you get measurable results with N=1 on the outer loop (or the outer loop removed) and debugging can be disabled (With N>1 and debugging disabled, the loop gets folded and you get false fast readings, of course).

02-16-2018 03:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Do you typically only do single scalar conversions? It seems doing the same directly on arrays is significantly faster

Yes. The only purpose of the arrays here is to provide enough of a workload to time correctly. the purpose of the randomizer is to keep the LV optimizer from calling the whole thing a constant.

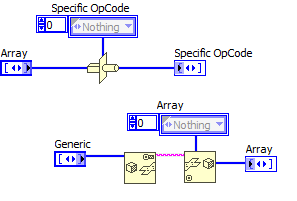

My purpose was to investigate the feasibility of having a general-purpose TCP connection manager, and defining a series of "standard" opcodes, for connect, disconnect, etc., and each instance would have specific opcodes outside that range.

So each call to XMIT or to RECEIVE would involve a translation from generic to specific or vice versa and I wanted to see the CPU burden of doing that.

The Convert to I8 method is the best, and is even faster than I indicated after you account for the loop autoindexing overhead.

The purpose of this particular QUESTION was to better understand the rules in general as to why a seemingly simple operation (TypeCast) is slower than a seemingly more complicated one (Flatten + UnFlatten).

I still don't know the answer to that.

Culverson Software - Elegant software that is a pleasure to use.

Culverson.com

Blog for (mostly LabVIEW) programmers: Tips And Tricks

02-16-2018 04:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@CoastalMaineBird wrote:

The purpose of this particular QUESTION was to better understand the rules in general as to why a seemingly simple operation (TypeCast) is slower than a seemingly more complicated one (Flatten + UnFlatten).

I still don't know the answer to that.

Seems unlikely you'll be able to get one definitive answer without someone from the NI compiler team looking into it and explaining exactly what's going on. It also wouldn't be surprising if this changed from version to version. One likely factor is that flatten/unflatten has different behavior when the types don't match than Type Cast does. For example, if your output enum was 16 bits, with the same 8-bit input, unflatten would generate an error (because the input string wouldn't be long enough) whereas Type Cast would put the 8 bits of input into the upper 8 bits of the output, then it would coerce to the closest value in the enum. Even though the types are known at compile time, it's possible that the error checking code doesn't get optimized out in one case (or gets optimized to a different degree) resulting in the timing variation.

02-18-2018 05:22 PM - edited 02-18-2018 05:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A LabVIEW Typecast is a lot more than a C typecast. In C it is basically a retyping of the memory buffer but in LabVIEW there is always a copy, checks that the types on both sides match in space and eventually padding it if necessary with 0 bytes (like converting an int8 into an int16 or int32) or trucating it (like typecasting an uneven amount of bytes into an array of int16 for instance). In addition it also always does big endianization, while for the Flatten/Unflatten you can choose 3 different endianization strategies. Typecast was created in LabVIEW 2.0 and likely hasn't been revisited besides adding support for new datatypes, while the Flatten/Unflatten function got a complete rework in LabVIEW 8 to support the different endianization modes and remove the typedescriptor array.

So it is likely that there has been some optimization put in at that time for simple datatypes that Typecast doesn't profit from.

All the things above about the Typecast may seem unnecessary here as your typecast a single byte value into another single byte value, so size mismatch, endianization and all that does not play into it, but I'm pretty sure the checks for that are still in there as the underlying function is programmed in a generic way and executes all those checks and copying independent of the two datatypes on both sides.

- « Previous

-

- 1

- 2

- Next »