- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Export Unicode (UTF-8) text to clipboard

12-01-2018 06:04 AM - edited 12-01-2018 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is it possible to programmatically write multibyte (unicode) text to the Windows clipboard? The standard "write to clipboard" method expects ASCII text, thus as soon as there is a zero byte in the string, it is interpreted as the termination character, so a unicode-encoded ASCII string would only contain the first character in the clipboard (since a zero follows every standard ASCII character when encoded as UTF-8). Is there a way to "properly" write multibyte-strings containing zeros to the clipboard? In the windows clipboard API the clipboard type can be set to unicode (instead of standard text) which eliminates the zero-termination problem, but how can I use these Windows-API clipboard functions in Labview? I know it's used by Labview's GUI because you can properly copy unicode text displayed in string indicators with Ctrl+C, but there is no programmatic access to it.

12-01-2018 01:39 PM - edited 12-01-2018 01:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Drop a VI server reference. left click, convert it to "This Application".

Right click the reference and CREATE --> METHOD FOR APPLICATION CLASS --> CLIPBOARD --> WRITE TO CLIPBOARD.

0xDEAD

EDIT: Something strange going on, when you paste in LabVIEW it's fine, but in other apps its still being truncated at the 0x00.

Not sure if this is a LabVIEW issue or not.

12-02-2018 03:43 AM - edited 12-02-2018 03:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Novgorod wrote:

Is it possible to programmatically write multibyte (unicode) text to the Windows clipboard? The standard "write to clipboard" method expects ASCII text, thus as soon as there is a zero byte in the string, it is interpreted as the termination character, so a unicode-encoded ASCII string would only contain the first character in the clipboard (since a zero follows every standard ASCII character when encoded as UTF-8). Is there a way to "properly" write multibyte-strings containing zeros to the clipboard? In the windows clipboard API the clipboard type can be set to unicode (instead of standard text) which eliminates the zero-termination problem, but how can I use these Windows-API clipboard functions in Labview? I know it's used by Labview's GUI because you can properly copy unicode text displayed in string indicators with Ctrl+C, but there is no programmatic access to it.

I think the problem is this statement: (since a zero follows every standard ASCII character when encoded as UTF-8). From doing some reading about UTF-8 The ASCII characters are represented as 1 byte and 0x00 as a terminator is valid.

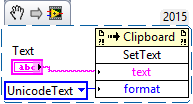

If you want to set the clipboard using the windows API, you can drop a .NET INVOKE NODE

Select WINDOWS.SYSTEM.FORMS -> Clipboard -> SetText(String text , TextDataFormat format).

This will have the string input and an Enum which can select TEXT/UNICODETEXT/RTF/HTML/CSV.

You will need to set the VI that contains this Invoke node to run in the UI thread from VI properties/execution or you will get a thread exception error because of STA.

Though in your case, I'm not sure this is going to help, for the reason I stated at the top.

0xDEAD

12-02-2018 09:09 AM - edited 12-02-2018 09:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think the problem is actually a little bit different than what you describe. UTF-8 encoding does not contain embedded 0 bytes, as that is one of the main reasons for UTF-8 to exist. It is meant to encode Unicode strings with an 8 bit encoding without any NULL bytes in it.

So what you most likely do is flattening an UTF-16LE string (the Windows standard Unicode encoding) into an 8 bit bytestream and then trying to post that to the Clipboard. That of course must fail as LabVIEW's clipboard functionality indicates to the clipboard that it added an ANSI encoded byte string (in the default codepage that Windows is configured in) to the Clipboard. LabVIEW not using any NULL bytes internally to indicate the length of a string, but rather encoding the length itself in the string handle has no problems to deal with the embedded NULL bytes that exist in the flattened string and simply copies the full string to the clipboard. When you copy it back into LabVIEW from the clipboard the entire buffer is copied into a LabVIEW string and everything is exactly as it was, with a flattened UTF-16LE string in the LabVIEW string, so when unflattening it to a 16 bit array and interpreting it as UTF-16 everything is fine.

Other applications however will see that the cipboard format is CF_TEXT and almost certainly will use a standard C string copy routine to copy the string from the cliboard into its own buffer and that will of course terminate at the first NULL byte. So eventhough the Windows handle in the clipboard contains the real length of the data buffer this buffer is by most applications not copied into its own memory in full as it will likely use C runtime functions to do so and they stop at the first NULL byte.

So what can you do in LabVIEW to remedy this?

1) Do not flatten the UTF-16 string to copy it to the clipboard. In that way you are lieing to the clipboard about the actual data in the clipboard and other applications are bound to run into problems. Better would be to CONVERT it to a UTF-8 encoded string (which is something entirely different than flattenting it to an 8-bit bytestream) But it still would be potentially troublesome if the string contains any characters that do not fit into the currently configured Windows ANSI codepage since the clipboard format set is CF_TEXT which will tell other applications to interpret the data as ANSI 8-bit text rather than UTF-8.

2) LabVIEWs clipboard function does not allow a method to post other data than a normal ANSI string to the clipboard nor does it allow to specify a different format.

3) The Windows clipboard function allows to specify the CF_UNICODE format code but then the data needs to be in UTF-16LE format and nothing else. And when you access it through .Net you run into the problem that the LabVIEW .Net interface automatically does a conversion between the LabVIEW ANSI encoded string to a .Net String (which is always an UTF-16LE encoded strinig). By flattening your UTF-16 string to a LabVIEW string and then passing it to the .Net method you get an UTF-16 encoded string of the flattened UTF-16 encoded string, which is of course wrong too, since double encoded.

The only method that has some sort of possibility to work would be the .Net SetDataObject() method, but constructing the right String object from the 16 bit array form of a LabVIEW UTF-16 data string is going to be a bit of a challenge too.

4) The most "simple" way would be to access directly the Win32 API SetClipboardData(). In this way you have full control of what is passed to the clipboard and what format is indicated to other applications as being contained in the clipboard. But the problem here is that this API requires one to create a Windows data handle (similar to the LabVIEW data handle idea but not compatible with each other). So one has to first create such a handle, copy the information into it and then pass this through the SetClipboardData() API to Windows. That's why I put "simple" above between quotes.

12-03-2018 09:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It would help if you explained how you got your Unicode string in the first place and how you think you created an UTF-8 encoded string from that. Depending on these answers I could probably show you a way to do what you really want to do.