- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error when converting binary string

06-27-2019 05:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi there,

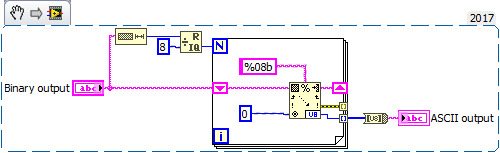

In the attached screen shot, the VI works normally with a manually entered binary string, and converts it to ASCII text without any issue. However, when using the myDAQ to receive data from a transmitter, I get this issue. How can I fix this and get my ASCII text output correctly?

Regards,

Christopher

06-27-2019 05:11 AM - edited 06-27-2019 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi lad,

it really would help to make the display style indicator visible in ALL string controls, indicators and constants!

(Or to attach your VI with some useful data set to default…)

The error message is quite clear: what have you tried to solve the issue so far?

(I guess there is a wild mix of "binary string" and "ASCII string" in your VI. Display style really matters for strings…)

06-27-2019 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi there,

I've made a system which functions as a receiver connected to a myDAQ transmitter. It receives a repeating binary string of 0s and 1s, for example in the string 011111101100011000010110010011101001011011001110000001000111111011000110000101100100111010010110110011100000010001111110 we have the start bit which is always detected first, ~, which has a binary representation of 01111110. So after this, we have a match pattern function which reads after the start bit, so all that is fine. However, once we have the start bit, the conversion does not work. I have attached the VI image.

06-27-2019 05:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

06-27-2019 05:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh okay sorry. I think I've narrowed down the issue to a small section in the VI, and I have no idea what the issue with this is. It's attached, and everytime I run it I get an error, but it should be receiving what it wants.

06-27-2019 05:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

06-27-2019 05:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It is attached.

06-27-2019 05:53 AM - edited 06-27-2019 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

06-27-2019 06:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you very much. But now, when I implement this in the program which reads 1000 samples of whatever word you input (in this case, it's chris) it doesn't read the word properly. The program you simplified and created works perfectly for a manually entered string of the correct length, but now, with my 1000 sample transmitter, I just end up getting garbage. The screenshot is attached, with the output I receive.

06-27-2019 08:03 AM - edited 06-27-2019 08:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi lad,

for the next time: please make the "display style" indicator visible for ALL string controls, indicators and constants!

(Right-click the string -> visible items-> display style. For each of them!)

Where does that "Binary output" come from?