- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Does LabVIEW timeout and wait function time accuracy depend on computer configuration?

04-11-2021 10:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One of the modules I wrote in my own program does this:

1. First, there is a waiting notification, with the timeout set to 450ms (normally there is no notification, but the timeout after waiting 450ms);

2. It takes less than 1ms to do some operations after waiting for the notification to end;

3. Calculate the total time of 1 and 2 by time counter and add 2ms

4. The software design requires that the whole process takes 498ms, so 498 is subtracted from the total time calculated before, and the wait MS function is used to wait the time.

In order to test the accuracy of the time, I added a sequence structure to both of the whole module and waiting notification, calculated the time by subtracting from the time counter, and displayed the time by the waveform chart. It turns out that the time accuracy is a bit off. The timeout for waiting for notification is set to 450, but in fact it may exceed 10ms+, and the waiting function will also have an error of about 10ms. And the results are not the same with the two computers.

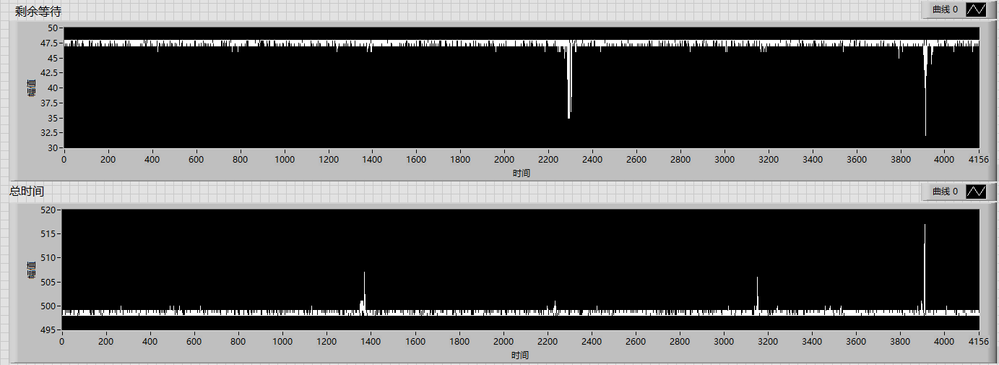

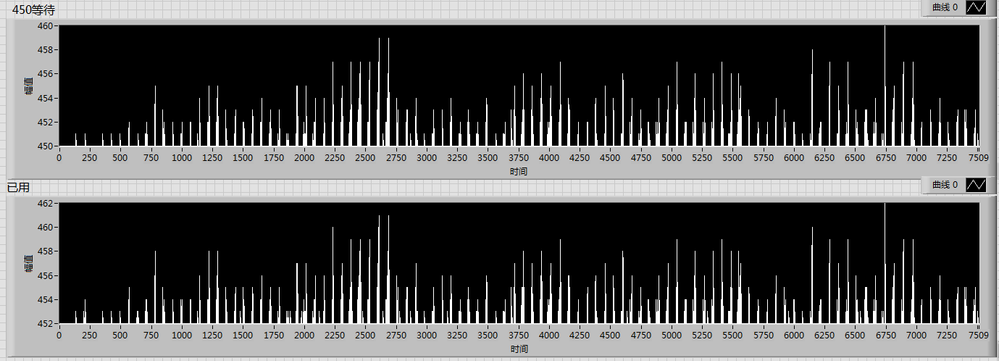

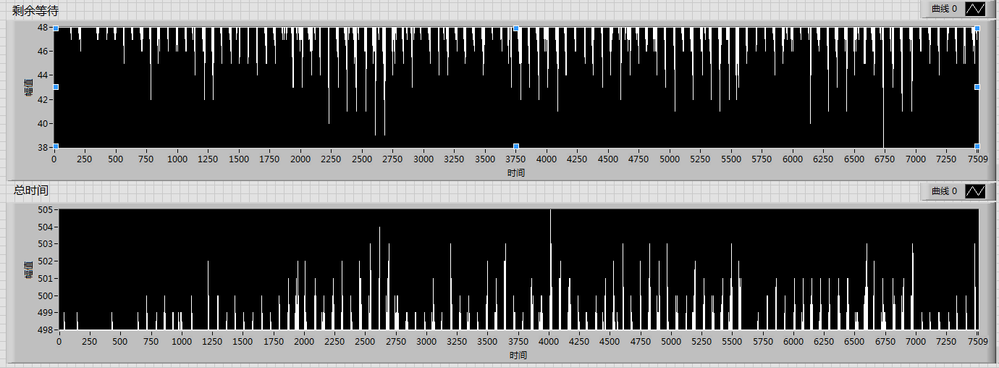

The first wave chart shows the time waiting notifications, set at 450, but the highest at 466.The third waveform chart is the last waiting function time, which is dynamically adjusted according to the second chart to control the whole process to 498ms.The fourth waveform chart shows the time consuming of the whole process. According to the theory, the final time consuming should be 498ms after the dynamic adjustment of the waiting function, but the final error feels a little too large.In addition, this is me running the same program on another computer, and the results are still different.

04-11-2021 11:38 PM - edited 04-12-2021 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your description is way too ambiguous to really tell what you are doing. Can you boil it down to a small example that still shows all points and attach it here? Thanks!

Are you running this under windows?

04-12-2021 07:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This has all the looks of the well-known-around-here limitations of software timing under Windows. Search for such terms and you'll find probably 100's of prior threads related to complaints / disappointment about timing limits and inconsistencies under Windows.

The solutions boil down to:

1. Leave Windows and program under RT or perhaps FPGA. This can require a significant investment in additional learning curve and/or hardware.

2. Accept it and figure out how to live with it. This is very viable for many, though not *all* apps. Thus far it isn't clear why you want timing perfection for your app, nor whether there's a viable workaround in the absence of such perfection.

-Kevin P

04-12-2021 05:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This sounds to me like you're running through limitations imposed by the operating system. I would switch to a real time system or live with that "offness" in accuracy.

You will find it nigh impossible to achieve sub-millisecond precision under windows.

Alternatively, if your target is RT and you're experiencing those effects, then that sounds like it would be another problem.

04-14-2021 02:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@MrNNNICE wrote:

One of the modules I wrote in my own program does this:

1. First, there is a waiting notification, with the timeout set to 450ms (normally there is no notification, but the timeout after waiting 450ms);

2. It takes less than 1ms to do some operations after waiting for the notification to end;

3. Calculate the total time of 1 and 2 by time counter and add 2ms

4. The software design requires that the whole process takes 498ms, so 498 is subtracted from the total time calculated before, and the wait MS function is used to wait the time!

If this is on Windows, forget it! Anything shorter than 10 ms accuracy under Windows is simply a wet dream, even if you run it on the same computer. If you add multiple computers to the mix it is not even a fairy tale. Even 100ms is not doable under Windows if you need hard real-time operation. If it is acceptable to sometimes miss the mark you can usually get away with it, but nothing more.

If you need such timing accuracy, you need to do the time critical part either on a real time system (sub ms accuracy is doable if you are very careful and know what you are doing), or fully in hardware (where you can go down to sub us accuracy). Hardware can be either a plugin card in your PC that handles the entire time critical part of your system such as a DAQ card for instance, or a dedicated FPGA device if you need customizable functionality.