- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Custom subarrays

09-24-2021 11:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I need to do windowing on big arrays (4-D with millions of elements) which is very expensive.

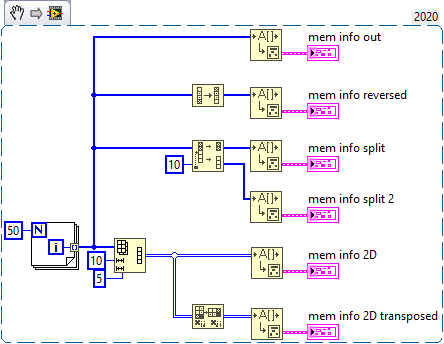

When we do simple operations on 1D or 2D arrays such as reverse or transpose, LabVIEW doesn't copy any data, instead it creates a structure with the information needed to read or reconstruct the array.

Rolf Kalbermatter said that this information is stored in the wire Type description on lavag.

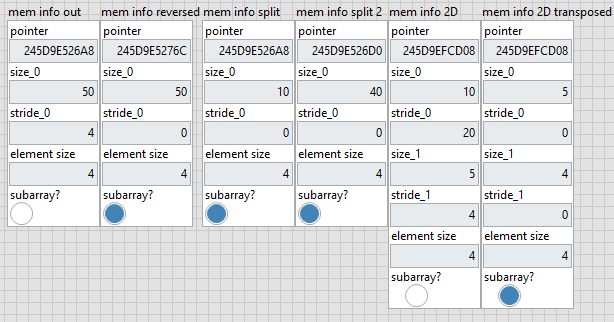

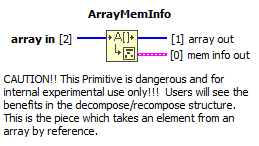

We can read this information with the vi ArrayMemInfo.

It gives the pointer to the first element, the size and stride of each dimension and the element size. The stride is the number of bytes to skip to get the next element in a given dimension.

Modifying this information would allow me to do efficient windowing and much more.

From the answer I got on lava, it is theoretically possible but there is no documention on it.

Do you have any idea on how to change them ?

09-24-2021 12:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'll have a look at the lava thread later to see what you definition of "windowing" is. 😄

As the help on these functions shows, they are dangerous, so thread lightly. For example inserting an "always copy" function after the reverse operation will change the result (i.e. it is no longer a "subarray").

Can you explain a little but more what happens with the output of the windowing? What's the further processing where these structures are needed? What's the deeper purpose of all this?

Maybe there is a much simpler solution.

09-25-2021 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Have you investigated Data Value Reference routines? I think they may be intended for something like the situation you describe.

Bob Schor

09-25-2021 10:53 AM - edited 09-25-2021 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OK, I looked at your Lava post and your main problem is the highly unreasonable decision to generate that gigantic 4D output that has so much redundant information (Asymptotically for large inputs, each element occurs 9 times !!!!)! Typically that output is not very useful and you could probably place the needed processing right inside the innermost loop.

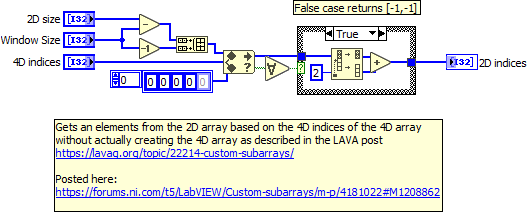

Alternatively, any particular "slice" can be extracted on demand from the original array by doing some simple integer math on indices. No need to copy the original array. Basically for any given set of 4D indices, you can calculate a 2D index pointing into the original data from first principles. That won't be any slower than what a subarray transform would do. All you need to create is a inlined subVI that takes four I32 inputs and generates two I32 outputs. (That's exactly the same as what LabVIEW does with subarrays, e.g. swap 2D indices instead of transposing the data, calculate the 1D index from the end (based on I and N) instead of reversing the array, etc.)

We really need significantly more information to offer advice to do whatever you need to do in the context of the entire program. "Millions of elements" is typically peanuts for a modern computer. Your use of the term "expensive" makes me believe that your problems are actually elsewhere.

09-25-2021 01:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Basically for any given set of 4D indices, you can calculate a 2D index pointing into the original data from first principles..

Here's a quick draft how that could look like:

09-27-2021 03:55 AM - edited 09-27-2021 03:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I realize I did not give you enough information.

I am implementing convolution layers of neural networks.

These layers multiply each window with a filter and sums the result.

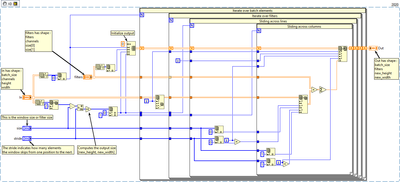

Here is a naive implementation of a convolution.

This is really slow. One way to make it faster is to move the multiplication and summation out of the loop and do it later.

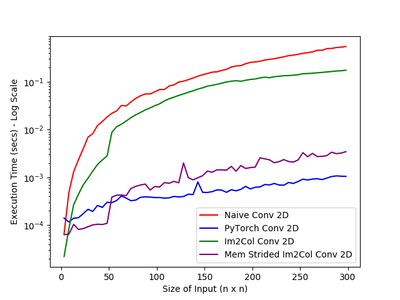

Instead, you flatten each window into a 1D array and get a big matrix with "batch_size*new_height*new_width" lines and "channels" columns.

Then you flatten the filters into a matrix of "channels" lines and "filters" columns and you do a matrix multiplication.

BLAS (Basic Linear Algebra Subprograms) is so well optimized that (as altenbach pointed out) having so much redundant information is worth it.

The function that flattens the windows is called im2col (or im2row in my case) and makes convolutions around 4 times faster.

Now there is still room for improvement. As altenbach said,

@altenbach a écrit :

Basically for any given set of 4D indices, you can calculate a 2D index pointing into the original data from first principles.

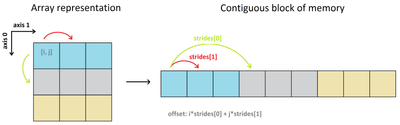

So this is how it is done in Numpy, PyTorch and other librairies. Arrays (or Tensors) also have a shape and strides like in LabVIEW and there is a function to change them to create a "view" of the same array without any data being copied.

By changing the shape and strides, you only change how the data is viewed as the array is in one contiguous block of memory.

The adress of the element at index i,j is pointer + i*strides[0] + j*strides[1].

Obviously there is still a copy of data when you call BLAS but I thought that given the new shape and strides, LabVIEW would be faster than my im2row function.

This is why my original question was about creating subarrays.

You can find more details here.

To illustrate the gain in time, here is a plot from the linked article.

Thank you for your answers.

09-27-2021 04:10 AM - edited 09-27-2021 04:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Bob_Schor a écrit :

Have you investigated Data Value Reference routines? I think they may be intended for something like the situation you describe.

Bob Schor

I did but I don't think I can use them here.

09-27-2021 10:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

@altenbach wrote:

Basically for any given set of 4D indices, you can calculate a 2D index pointing into the original data from first principles..

Here's a quick draft how that could look like:

Of course you can equally well create a subVI that returns any given 3x3 window (or any other size), given two indices and a direction. That's exactly what subarrays do internally do (doing simple integer math instead of creating a new data structure).

I have not looked at your links in detail yet, but I am sure that you never need to create these humungous redundant data structures at all, ever! Getting any subarray from the original 2D array structure on demand is only a small cost compared to the convolution operations you'll apply to it.

09-27-2021 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@hugoch wrote:

This is really slow.

Yes, it is slow because it shuffles a lot of data around, not because of the operations.

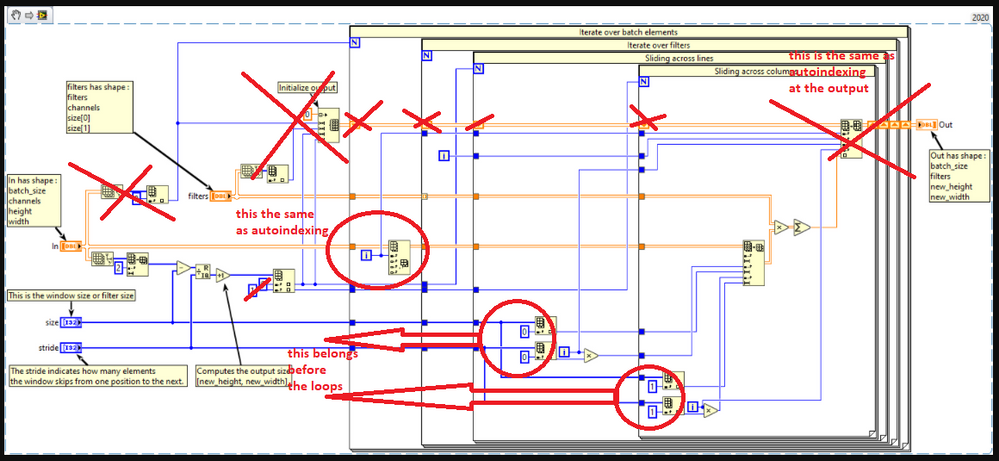

While the code can be cleaned up significantly (see e.g. the picture below, but that won't really make a difference in speed but much easier to read, maintain, and debug).

Shouldn't the filter array size depend on the blue inputs?

09-28-2021 02:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach a écrit :

I have not looked at your links in detail yet, but I am sure that you never need to create these humungous redundant data structures at all, ever! Getting any subarray from the original 2D array structure on demand is only a small cost compared to the convolution operations you'll apply to it.

I really need to create the redundant data because BLAS is faster than making the operation in the inner loop and because the convolution is done on all the input data. As far as I know, this is not something I can escape and is a key part in making convolutions faster....

Yes, the blue input size is the same as the last 2 dimension size of filters. Each window has the shape as the filters.