- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Converting from a 16 bit integer to a 12 bit integer

06-27-2018 10:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

I need to convert a 16 bit integer, from a slider on the front panel, into a 12 bit integer. The DAC I will be using this data to write to requires a 12 bit voltage value and 4 trailing zeros (i.e. a 16 bit word). Is this possible?

Thanks!

06-27-2018 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Since you want trailing zeros, just use Logical Shift to shift 4 places.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

06-27-2018 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

LabVIEW does not have 12 bit integers, so apparently you want 16 bits, with only 12 bits significant and aligned the way you need. Tim already solved that for you.

Now we also need to adapt the slider control. First, you need to change the representation correctly (probably U16). Next you need to correctly set the"data entry...". Make sure to coerce to the valid range and appropriate for 12 bits. Make sure to read the documentation regarding byte order (LabVIEW is always big endian, but your instrument might not be).

06-27-2018 11:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

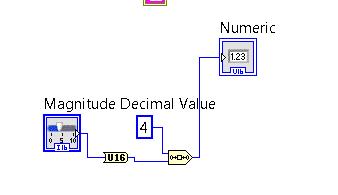

Thank you crossrulz and altenbach, I have implemented both those things and I am getting the output I've desired. However, it is only appearing as I want it to when I use an indicator and manipulate it to display U16 as a binary 16 digit number with leading zeros. If I don't wire the output from logical shift to the indicator, the data

isn't transferred in U16 binary 16 digit with leading zeros form. Is there a way to get around this? I've inserted a picture of my code as well.

06-27-2018 11:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@lmcohen2 wrote:

However, it is only appearing as I want it to when I use an indicator and manipulate it to display U16 as a binary 16 digit number with leading zeros.

The data is always 16 bits. It is just a question of whether or not you are displaying the data in a useful manner.

@lmcohen2 wrote:

If I don't wire the output from logical shift to the indicator, the data isn't transferred in U16 binary 16 digit with leading zeros form. Is there a way to get around this?

How are you verifying the data? This sentence makes no sense to me. And your image does not show where your data is supposed to be going. Again, your integer is 16 bits, period. It is just a matter of how you have the data being displayed.

Also, just change your slider to be a U16 data type instead of a I16 and then converting the data type.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

06-27-2018 11:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

06-27-2018 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am just confused as the data needs to be sent to a microcontroller in the exact form as the indicator displays it (16 bit with trailing zeros). If it doesn't have the trailing zeros, the microcontroller will not understand the message. I am just overthinking this?

06-27-2018 12:21 PM - edited 06-27-2018 12:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How are you communicating with the controller and how does it want the data (U16? Flattened binary string? Formatted binary string consisting of the characters 0 and 1, Etc.). Do you have a driver subvi?

06-27-2018 12:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am using an Aardvark to communicate through SPI. There is an Aardvark subvi for writing SPI and it requires data that is an array of unsigned bytes U8. So I am concerned that the data I am trying to write will be misinterpreted and result in errors.

06-27-2018 01:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So you need to cast your U16 to an array of two U8s. Simple as that! (Again be careful with byte order).

What does the documentation say exactly?