- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Call Library Node Questions: proper way to provide buffer to DLL and why output string is NOT equal to provided buffer size?

03-03-2023 03:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have some questions that while they have not prevented me from making operational software, I can't be sure that there isn't some sort of issue (possibly with memory) that may end up becoming a problem in some unforeseen corner case or after a sufficiently long uptime. I want to know the correct way of doing things.

I can not provide actual documents or VI's due to a variety of reasons but I believe my description and images should be plenty good to get informed answers from you all.

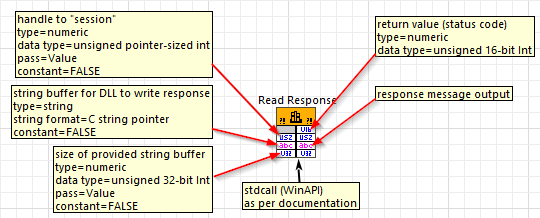

So, I have this DLL that I use to communicate with a machine. The manufacturer provided me with documentation, I'd say it is on the good side, not perfect but I have seen far, very far, worse than this. Basically I send string commands, and when the machine has response ready it alerts me and then I must call a "Read Response" method of DLL to retrieve the response string. Response lengths are quite variable depending largely on which request of mine it is responding to. The Read Response method call (stdcall WinAPI, according to documentation) takes parameters including a string buffer (I assume this is "giving" a certain memory space to the DLL) an integer designating the size of the buffer (not sure why this is necessary since, as well as a handle for the connection which all the other methods take as well.

Like I said earlier, the response messages vary in length. If a particular message is too large for the buffer that I provide, I basically get the first part of the message up to the size of my buffer. I then need to make subsequent calls to retrieve more of the message. In general, I can be 99.999% sure I have everything and do NOT need to make another call to the method, when the received message size is LESS than the size of the buffer I provided. The 0.001% corner case is if the message size is perfectly divisible by the size of my buffer BUT I can always call the Read Response method one extra time, receiving a NO DATA status return code, and thus know for sure I got everything.

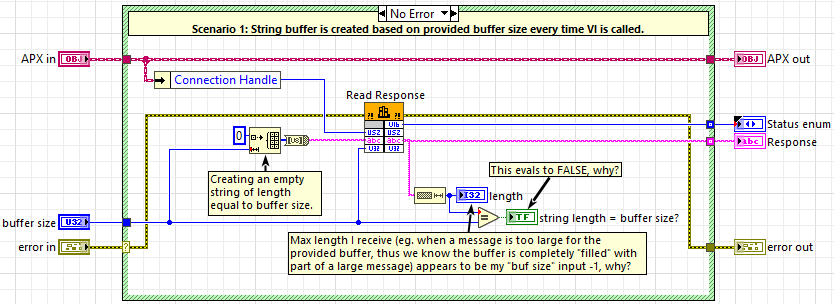

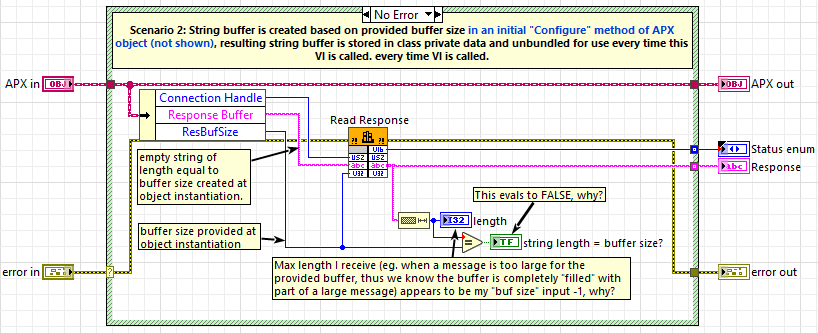

While playing around with this I noticed something odd. Even if there is a response message longer than my buffer (so that the first part completely maxes out my buffer string) when I evaluate the output string length EQUAL to provided buffer length, it is FALSE (NOT equal). It appears the max output string is my buffer length -1. I don't know why. My best guess is it has something to do with C string termination using up that last byte and "counts" against the buffer size inside the DLL and is simply not something LabVIEW retains when the string comes out of the Call Library Node. Does that sound like a plausible explanation?

Second Question:

So far I've been taking an integer (buffer size) from my front panel terminal, initializing a new U8 array of that size, converting the U8 to string, and that is what I provide to the Call Library Node on the buffer terminal. In practice, I never really change which size buffer I actually want during a session and just use the same size all the time, so it's not terribly important to have this terminal on my VI. So I was thinking of simply providing my "APX" object (see below) a buffer size to use in the configuration method I use when I create the object, I would have the configuration method initialize the U8 array, convert to string, and store in the object private data, then simply unbundle it in all the DLL methods that require providing a buffer. Here's where I'm totally out of my depth. Would this second scenario be like giving the DLL the exact same memory space for writing messages everytime I call these library nodes and put me at risk for some nasty consequences? Could a subsequent or parallel call corrupt what's on the wire coming out of an earlier call to the library node? So what is the proper way to provide a buffer to a DLL, create a new one every time or can I just reuse the same LabVIEW string stored in my object?

03-04-2023 06:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First and foremost, I'm trying to help but can only offer up some educated guesses. If rolfk happens to hop in on this thread, I would urge you to completely ignore any of my guesses and follow his advice exclusively.

Q1: Here is my fairly educated guess. Yes, it seems likely that C's null-termination of strings is the reason that the maximum length of the LabVIEW string you can retrieve is 1 less than the buffer size you declare as an input parameter.

Q2: The dll seems to expect *you* to provide an available buffer and to define its size. With that in mind, it seems like you should be able to keep reusing the same LabVIEW string over the course of repetitive calls to the dll. My instinct is that you could use an oversized LabVIEW string constant, longer than the max you'll ever receive back. The dll will be given a more-than-sufficient buffer, can fill it with the meaningful characters and the null terminator, then the deep down code handling this LabVIEW dll interface will return a LabVIEW string containing only the meaningful characters.

-Kevin P

03-04-2023 12:51 PM - edited 03-04-2023 12:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is a very good reason why you must also provide the length of the buffer you pass in. Unlike LabVIEW arrays and strings (which are passed as handle and always have an int32 in front indicating how many elements are available) your DLL wants you to pass in a pointer to a pre allocated memory buffer. But there is no standard way in C to determine the size of the allocated buffer memory and if the function just writes into that pointer without caring about that and happens to write beyond the actual allocated buffer you get a buffer overrun error. In the best case this crashes immediately. If you are unlucky it “only” overwrites other info in your program which MIGHT crash at some later point when LabVIEW tries to access the corrupted memory. Or it just corrupts some data elsewhere that messes with your measurements and may never be noticed until the hydraulic pump your program should have tested fails and causes an airplane to crash down!!

And for parameters that you configure as String Data Pointer, LabVIEW does indeed scan the buffer for a zero byte after the DLL call returns and resizes the according string to the last character before that zero byte. If you don’t want that to happen but need to pass a C pointer anyhow you have to pass it as byte array data pointer to the DLL function.

03-04-2023 01:08 PM - edited 03-04-2023 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

For your second question, no not really. LabVIEW generally creates copies of data buffers when you branch a wire and your Unbundle by Name effectively is a wire branch too. That string remains in the private object data but is required for the DLL call too. LabVIEW does have some optimization mechanics and they could kick in if you configured that string parameter as constant as that would tell LabVIEW that the DLL function won’t modify the memory buffer. But as your function just does that, setting that const qualifier would be the completely wrong thing to do and it would cause very weird problems where the string in the object data could seem like being magically getting modified despite that dataflow rules would say it can’t.

i would usually just use a reasonable constant on the diagram and do an Initialize array in every VI. If you want this somehow configurable you could add the size integer to the class data and use that but it sounds not that useful.

And you can even leave away the Initialize Array and configure the minimum size of that parameter to be the value of your size parameter. But I still prefer to do the explicit Initialize Array.as that makes it clear that the buffer allocation was taken care of, without having to open the Call Library Node configuration to check.

03-04-2023 01:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

Q2: The dll seems to expect *you* to provide an available buffer and to define its size. With that in mind, it seems like you should be able to keep reusing the same LabVIEW string over the course of repetitive calls to the dll. My instinct is that you could use an oversized LabVIEW string constant, longer than the max you'll ever receive back. The dll will be given a more-than-sufficient buffer, can fill it with the meaningful characters and the null terminator, then the deep down code handling this LabVIEW dll interface will return a LabVIEW string containing only the meaningful characters.

-Kevin P

Are you saying I could probably just have a string constant of a length that is vastly overkill, and the DLL would only ever write a message the length of the integer I give for the buffer size parameter? It sounds like the important thing is to always make sure the buffer size parameter is <= to the actual string buffer parameter.

I do remember when I first started initial testing of this DLL I would unexpectedly crash LabView a lot. Eventually it stopped happening so I thought nothing of it. I probably had something very wrong with how I was interacting with this DLL in those early days that I corrected later on (I have gone through a few project re-writes between then and now).

I wish I could test various things I’m thinking about but it being the weekend I’m not about to drive in to work to satisfy my curiosity and earn a night’s stay in my wife’s doghouse.

thank you to all who took the time to read and respond to this, I appreciate it.

03-05-2023 06:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm going to defer to rolfk who *did* pop into the thread. I could only offer guesswork and there's no more need for that.

-Kevin P