- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Out of Memory Error

08-11-2023 03:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello. I am currently working on a number of different VI's that use the JDP Actor framework to communicate across VI's and other computers on the network. On some of our VI's we have been getting a generic error that just says "Out of Memory" and then the program crashes. Using the NI resource monitor, we are not able to pinpoint the exact source of this memory usage. From using Window's Resource Monitor however, we can see that in some cases LabVIEW is using upwards of 2GB of memory, which seems excessive. However this error does not occur with all instances of our VI's using the JDP Messenger Library. Currently it seems like it only occurs on the VI's that are using the TCP protocol VIs. Is this something that has been observed before? Or any tips on how to debug the issue further?

08-11-2023 05:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not observed before. It would be good if you could tell which actor the out of memory happens. Also, what do you mean by the program crashing? Normally, an out of memory only stops the execution of the actor it happens in, rather than the whole program.

10-17-2023 12:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Good Afternoon,

I am following up on this post as a peer working on the same project. In this application, we have ~10 actors communicating across TCP using the JDP Messenger framework with the TCP Messengers. The communication includes messages as well as Observer/Notifiers. After about 8 hours of runtime, we obtain a “Not Enough Memory to Complete This Operation” (NEMTCTO) error. We have diagnosed the memory usage of the various actors and found no buildup of active memory in the running code. We also monitored the event queues of each actor and found the actors function normally, processing events in ~real time up until the NEMTCTO error occurs. After scouring the internet, it seems that this error is actually an OS (Windows) error, and not directly raised by LabVIEW. There are several threads out on the internet related to NEMTCTO under LabVIEW, and at least one of them fingered TCP communication.

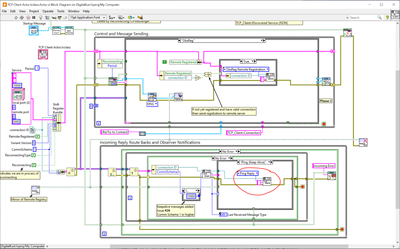

After drilling down into the code, the error seems to occur between actors making use of TCP communication. In troubleshooting the memory error, we became suspicious of the TCP Connection Actor.vi and the TCP Client Actor.vi as potential candidates for originating this error. As shown below for TCP Client Actor (but Connection has a similar design), there is a “Control Sending. . . .” as well as an “Incoming Message . . ” loop accessing the TCP channels via the JDP Science TCP Write.VI. Our theory for this error was that when LabVIEW does the time slicing of this VI, it allows multiple threads to simultaneously access the JDP Science TCP Write.vi on the same channel causing a race condition between the two write TCP Write.vi procedures. In addition, the keep-alive TCP Write in the lower loop seems to break the paradigm of marshalling all writes through the upper loop.

In our first test, we added a 0ms Delay after the TCP read as shown above, based on some titbits found on the internet. This was supposed to help the OS do a better job of timeslices, or some such this. While this seemed to help, maybe, it did not solve the problem.

Next, we set the JDP Science TCP Write.vi was to non-reentrant and memory error testing was repeated. With this TCP Write.vi set to non-reentrant, we have not obtained the NEMTCTO error and have seen continuous testing for 6 consecutive days.

At this point, we cannot say conclusively that we have solved the problem, only that the problem has not occurred in a “long time.” We never found any particular smoking gun that indicated the problem was about to happen, nor can we say why a race condition would be problematic here. One possibility is that the ping write interferes with the TCP channel data stream and the reader attempts to read too many bytes. If this is true, however, we do not have proof. (In most of our test cases the writer and reader are operating on the same machine, and because it is an OS error we have not been able to trace the error to a specific VI at the point when it occurs.)

If changing TCP Write to non-reentrant is actually solving the problem rather than just masking it, and it is solving the problem in the way we think it is, then it might be better to move the lower loop TCP Write up to the upper loop or to implement a per-TCP-channel mutex on TCP Write. We have also considered the possibility that the TCP Writer reentrancy change is solving the problem but in a completely different part of the framework for completely different reasons. Since we do not have the depth of understanding of this framework that the author does, we thought it would be best to report and let drjdpowell take it from here.

P.S. Thank you very much for the framework. We find it to be very simple and powerful.

10-17-2023 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That is really good debugging, thank-you!

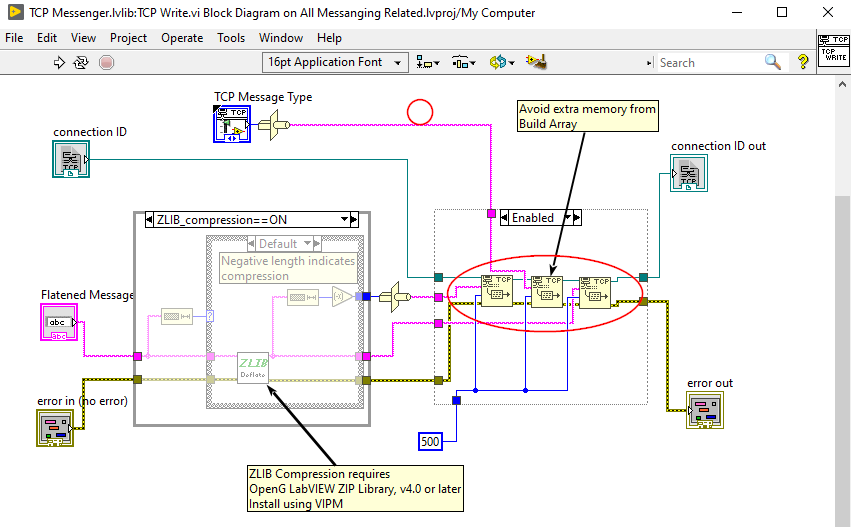

It looks like this bug was introduced in the latest 2.0.7 version of Messenger Library, and was caused by me trying to reduce the number of memory copies made in sending a TCP message. I switched from concatenating the three bits of info sent (which involves a new array allocation and copy, which I wanted to avoid) to sending the three bits in sequence. I follishly forgot that this makes TCP Write unsafe to call in parallel, as a message is no longer sent atomically. If you switch the disable structure in TCP Write, shown below, it should fix the issue:

10-17-2023 04:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here is a version with the fix: