UNIX Time

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Code and Documents

Attachment

Overview

This VI will output the current UNIX or POSIX time.

Description

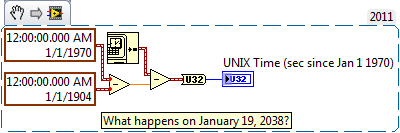

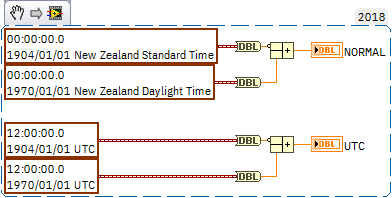

This VI converts from the LabVIEW based time (epoch Jan. 1, 1904) to UNIX time (epoch Jan. 1, 1970). In addition, since LabVIEW's timestamp takes into account your time zone and daylight savings settings this VI will extract that data from a Windows DLL (kernel32.dll) and subtract it to get the correct UNIX time.

For more information about UNIX time visit the wikipedia entry "UNIX Time".

Requirements

- LabVIEW 2012 (or compatible)

Steps to Implement or Execute Code

- Run the program

Additional Information or References

VI Block Diagram

**This document has been updated to meet the current required format for the NI Code Exchange.**

Example code from the Example Code Exchange in the NI Community is licensed with the MIT license.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Both images are broken, please try to embed them again.

LabVIEW Community Manager

National Instruments

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Updated, thanks for the comment.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

When I open this vi on my system the timestamp constant ("12:00:00.000 AM 1/1/1970" above) appears as "10:00:00.000 PM 12/31/1969". Is this due to the time zone on my system (Pacific)? Do I need to change the constant to get correct behavior? Thanks.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

It looks as if LabVIEW is automatically converting that time. The code was written in Central Time so Pacific would be two hours earlier, hence the 10:00PM. Since the code already accounts of time zones (set in Windows), you will need to change that constant back to "12:00:00.000 AM 1/1/1970". You can always check to make sure the time is correct by going to any of the sites with Javascript Unix Clocks. Here's one (http://www.epochconverter.com/epoch/clock.php).

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

What is the license on use of this VI?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

You and anyone else are free to modify, use, edit, sell, distribute, etc. this example for any purposes you need so long as it does not conflict with any licensing or restrictions National Instruments, LabVIEW, or your local, state, or federal governments may have..

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

I'm running a modified version of this VI on Windows 7 / LV2011 and have experienced that it renders a system time that is one hour advanced of the current system time (no timezone or DST info involved). If I exchange the UNIX epoch timestamp by the float value 2082844800, which according to various sources is the hand-calculated value of the UNIX epoch, then I get the expected current time. Just an observation.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Call to Windows kernel DLL is probably not a great idea.

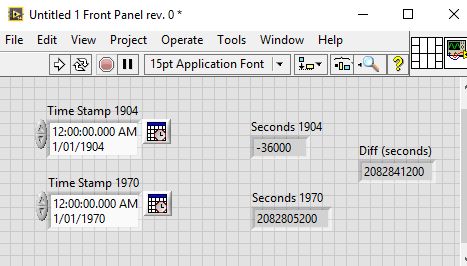

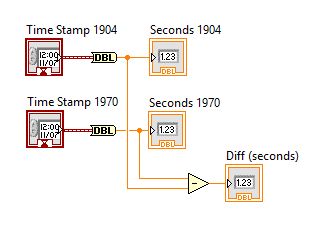

A complete LabVIEW solution would be to subtract a UNIX epoch timestamp from a LV epoch timestamp, then subtract the result from the current time and convert to a U32.

If you don't hate time zones, you're not a real programmer.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

![]()

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

When I ran "UNIX_Time_lv82.vi" on my PC, I got Unix seconds that was wrong by exactly 3600 seconds.

I think that in my timezone (GMT+10) the problem might lie in the conversion of the offset constant "12:00:00.000 AM 1/1/70" using the "To Double" operator.

For my timezone, LabVIEW might be calculating that Jan 1, 1970 falls during our Daylight Savings period here.

When I modify this constant to read 1:00:00.000 AM 1/1/70 on my block diagram, I get the correct Unix time from the VI.

This suggests that for universally correct operation of this VI, it should interrogate not only the PC's current offset from GMT (through the kernel.dll call) but also need to know what offset was (assumed to be) in effect on 1/1/1970?

Or is there another explanation for why the VI is wrong by one hour here?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Please try my example above. ( VI snippet )

Unix time is simply the number of seconds that has occurred since Jan 1, 1970. There is no timezone or daylight savings component.

http://stackoverflow.com/questions/18530530/how-does-unix-timestamps-account-for-daylight-savings

If you don't hate time zones, you're not a real programmer.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

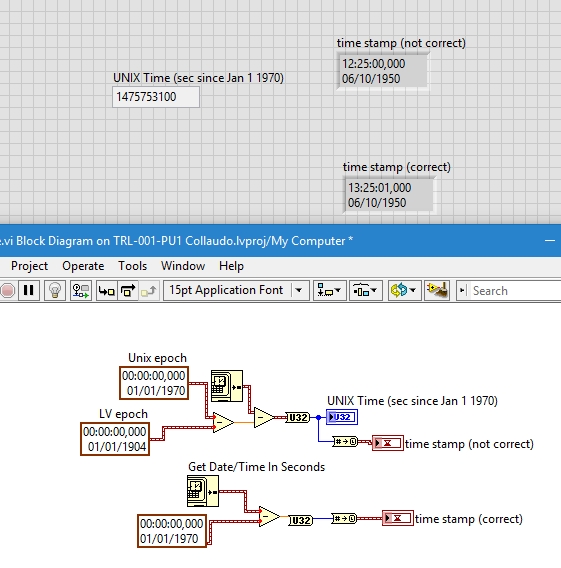

The problem I think is caused by Daylight Savings having been in effect here in Eastern Australia at 12:00:00 AM on 1/1/1970 while at 12:00:00 AM on 1/1/1904 it was not.

If I convert your two TImestamp constants to DateTime Records, and examine the DST value for each, for 1/1/1970 it is set to '1' here while for 1/1/1904 it is set to '0'. So this appears to be a southern hemisphere problem only.

Using the "Timestamp To Double" conversion appears to treat the Timestamp constant as "Local Time" (including timezone offset and DST value).

For correctness at my timezone offset (GMT+10) then, I should probably replace your Timestamp constants with "11:00:00.000 AM 1/1/1970" and "10:00:00.000 AM 1/1/1904" so that the seconds diference between 1904 and 1970 is correctly calculated from midnight GMT to midnight GMT.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Can you please explain your math?

T_now - T_unixepoch is correct

not yours.

T_now is the "distance" from 1904-epoch (from 1904 to now)

T_unixepoch is the distance from 1904 to unixepoc (1970).

the simple difference between the two gives the seconds elapsed from 1970 (unixepoch) to now, that is unix time by definition.

As a side note, labview timestamp is a 128bit (see: http://www.ni.com/tutorial/7900/en/), special structure.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Ironman, if your comment was directed to me:

Try the following, I am sure (if you are based in the northern hemisphere) you will get a different answer to me.

As a result, I can't do this operation directly to convert between LabVIEW time and Unix time because a 3600 second error results.

The source of the error, as I pointed out before, is because Daylight Savings occured in the southern hemisphere on 1-1-1970 but not on 1-1-1904.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

no, I was replying to philipsbrooks.

If I apply his example, I get wrong numbers. I verify the U32 number with internet webpage that does the "UNIX U32<->dateTime" conversion.

With my math, I get the correct number.

The "time stamp (correct)" is the same of my windows clock, so it's correct. And of course the U32 is correct: if I put it in web converter I get my datetime exact again.

As regard your example, I don't understand your math (you do a difference between 1970 and 1904, which leads to wrong result), and I don't think that DST should be included. Every operation is independent from DST, everything is UTC. Time is UTC, every other thing is just an offset that doesn't exist. Time is just a number, not "a number + offset" that depends of the zone. Time is the same time everywhere on Earth.

regards

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

@Ironman_ - The LabVIEW function "Get Date/Time in Seconds" returns the current time.

On Windows systems, the reported current time by "Get Date/Time in Seconds" will include an offset for DST (based on your OS settings).

If you subtract the difference between the two Epochs from the current time, you will always get the correct UNIX time, regardless of DST offset.

If you don't hate time zones, you're not a real programmer.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

This is a bit of an old topic but I just wanted to clarify a little about the issue that @Ironman_ is seeing on the being offset but 3600 seconds, this is a bit of an odd little issue that is seen when:

- Timezone implemented daylight savings between 1904 & 1970

- DST was active in the local zone on 1/1/1970

This is a combination of events that only really occurs in the southern hemisphere.

In order to work around this you just need to calculate the difference between epochs when DST is not active, either through setting the timezone on BOTH the timestamps you are using to calculate differences or setting the timestamp to a common date when DST was not active (6/6/1904 & 6/6/1970)

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

I got bit by the 1 hour difference today. I attribute it to the timestamp constant for UNIX epoch being set to 1:00:00.00 in the block diagram. Changing that to proper midnight using 12:00:00.000 removes the one hour lag. The Win32 approach otherwise works for me on Win11, LV 2021, Eastern US standard time.