- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Kinect 2 - Haro3D VI Library

03-18-2017 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Kinect does not use the colors to determine the shape of the part so you don't need to put it on a white surface. However, if the mat is black, it might not return enough light (the Kinect uses near IR light to read shapes) but in general, it works.

The easiest would be to try to measure the shape of your mat using a single position of the Kinect, or by moving just a little bit in the X-Y directions (taking data from different point improves resolution, but not necessarily accuracy).

You can also try to add objects on the floor next to the mat to help the Kinect tracking if you decide to move the Kinect to improve resolution.

Finally, if you have a Kinect, you can try it for free with the Haro3D library. You get a 30-day free trial by default.

Good luck.

12-13-2017 04:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are the programs available to test the hololens wih labVIEW?

12-13-2017 06:04 PM - edited 12-13-2017 06:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Shivik89,

Two example programs for the Hololens are provided with the installation of the Haro3D library.

There are additional example programs for the Hololens in the 3D Vision group: A robot application and the same robot application but with the added feature of sharing between multiple Hololens.

Notice that a new release of the Haro3D library will be available beginning of 2018 that includes the use of cloning of 3D objects for large number of holograms. A completely new example program demonstrating the new features will also be made available at the same time. Check the 3D Vision group regularly!

12-19-2017 04:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Marc,

I saw your example to get the x, y and z values of the kinect. Could you send me the code?

12-19-2017 07:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

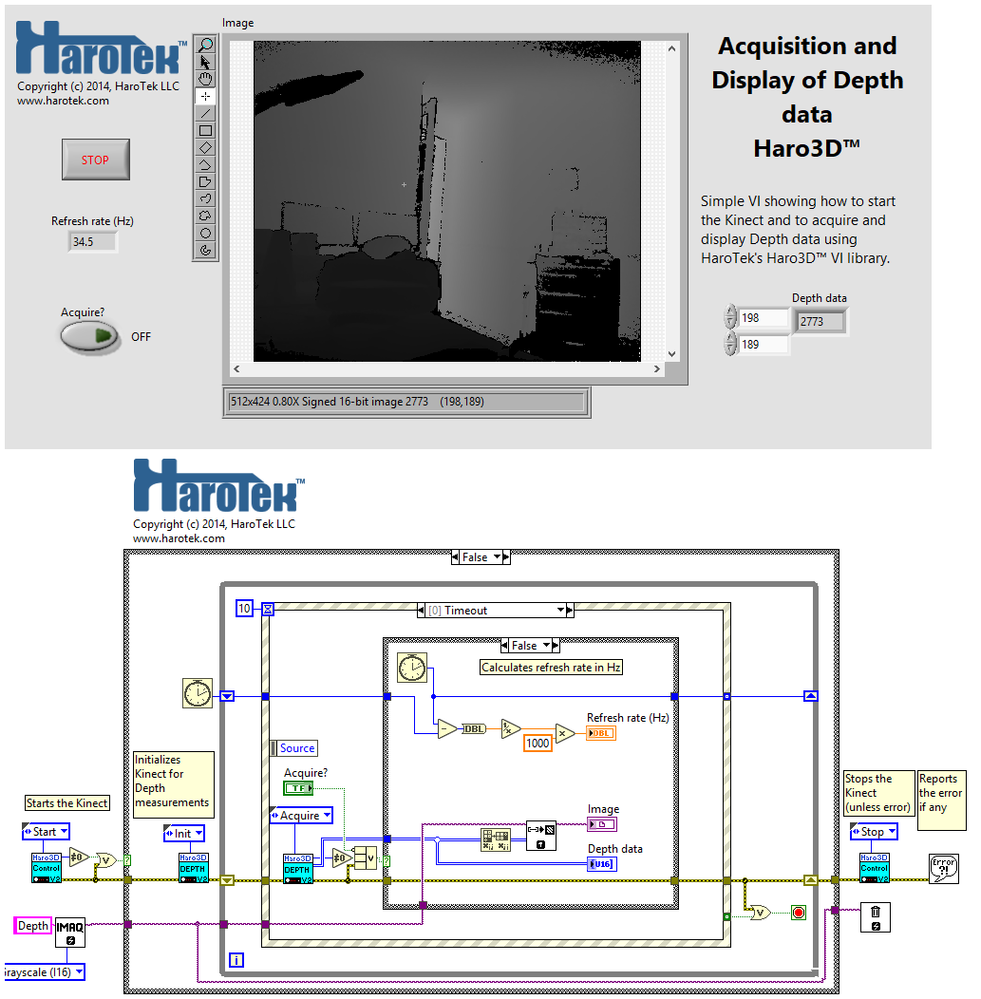

The attached VI is the same than the one presented in here (available through a VI snippet) except for the Kinect API VIs that have been updated to the latest version.

Good luck.

12-26-2017 06:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Marc,

Thank you for the code. I would like to track a apple or banana with a Kinect and than get the X Y and Z coordinates. I have your example's, but with bodies you only track bodies from people. Do you have any idea how i should do this? I was thinking with cloud detection maybe. Maybe you have a example code?

Patrick.

01-01-2018

10:07 AM

- last edited on

02-13-2025

08:18 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Patrick,

The Kinect can intrinsically track only joints and bodies. To track any other type of objects, you have to develop your own code.

You can use the Haro3D library to acquire clouds of points from the Kinect (see the Cloud of Points example) for that purpose. You can use both shape and color information. Spheres are the easiest to track because LabVIEW provides a VI to fit 3D points on a sphere (Fitting on a Sphere.vi).

An example of such tracking is provided here.

Please, share your progress with us!

Good luck.

01-04-2018 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Marc,

I can get the x and y coordinates from a picture, but now i want to get the Z coordinate. I'm trying with the HARO Depth now. The HARO Depth has status, depth data and error out as outputs and depth data i'm try to use. From depth data you can make a 2d array and i thought if you set the numerics of the array then your output is the Z value. When i do that the coordinates don't match. See picture in the annex. I hope you understand me, my English is not so good.

Patrick

01-04-2018 11:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I do not know how you created your IMAQ image but if you used the ArrayToImage function, the image should correspond to the array.

I have modified the Depth Example to display the depth array using an IMAQ display and an array control. As you can see below, the values of the two controls correspond (and I have tested several locations), when considering the Transpose 2D array function. I have also included the code in LabVIEW 2016.

Just in case, notice that the row and column of the image do not correspond to the X-Y coordinates of the object in the real space coordinate system. With the depth, you only get the Z values. If you want the X-Y-Z coordinates in the real space, you have to use the Cloud function.

02-08-2018 03:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello, we are basically using the program to pull data out from labview in text file or excell etc. We are only ever having one person in front of the kinect. We need to obtain the XYZ coordinates for their shoulder, elbow, and wrist for both left and right arm as real time as can be in a text file. The problem we are running into is trying to unbundle the cluster of data sets. We have unbundled it to each joint position and gotten it to output the xyz data in columns in seperate text files for each body and their joint(0). The problem is that we do not know what actual joint is assigned to what variable joint in labiew (0-8). This makes us unable to use the data for we do not know which data is for what joint. Also stepping back in the unbundling of the data, we do not know which body is assigned when the kinect sees someone. This means that we have 9 output files, one for each body. Every time we restart labview or another person is in the screen it randomly assigns the actual body to a random variable body. Is there a way we can limit the number of bodies to only one to be seen therefore fixing the issue of us having to figure which body variable is assigned to our actual body. Also reading through the manual I did not see where the joints corresponded to each joint variable. Are these randomly assigned too, or do you have the joints designated to certain joint variables already. Lastly there are 9 joints(0-8) in the code but the manual says 25 joints. Are the 9 variables in labview the actual real data read from the correct and the rest of the 16 joints inferred? Also is either the shoulder, wrist. or elbow inferred. Attached is a screenshot of the code, along with two outputs of our data, 1 is of a body that was not assigned, and the other of the body with the xyz positions for its joint(0) text file 1 is the empty data, and text file 5 is the filled one, you have to scroll down to where the kinect sees it.

Thank you!