-

NI Community

- Welcome & Announcements

-

Discussion Forums

- Most Active Software Boards

- Most Active Hardware Boards

-

Additional NI Product Boards

- Academic Hardware Products (myDAQ, myRIO)

- Automotive and Embedded Networks

- DAQExpress

- DASYLab

- Digital Multimeters (DMMs) and Precision DC Sources

- Driver Development Kit (DDK)

- Dynamic Signal Acquisition

- FOUNDATION Fieldbus

- High-Speed Digitizers

- Industrial Communications

- IF-RIO

- LabVIEW Communications System Design Suite

- LabVIEW Electrical Power Toolkit

- LabVIEW Embedded

- LabVIEW for LEGO MINDSTORMS and LabVIEW for Education

- LabVIEW MathScript RT Module

- LabVIEW Web UI Builder and Data Dashboard

- MATRIXx

- Hobbyist Toolkit

- Measure

- NI Package Manager (NIPM)

- Phase Matrix Products

- RF Measurement Devices

- SignalExpress

- Signal Generators

- Switch Hardware and Software

- USRP Software Radio

- NI ELVIS

- VeriStand

- NI VideoMASTER and NI AudioMASTER

- VirtualBench

- Volume License Manager and Automated Software Installation

- VXI and VME

- Wireless Sensor Networks

- PAtools

- Special Interest Boards

- Community Documents

- Example Programs

-

User Groups

-

Local User Groups (LUGs)

- Aberdeen LabVIEW User Group (Maryland)

- Advanced LabVIEW User Group Denmark

- ASEAN LabVIEW User Group

- Automated T&M User Group Denmark

- Bangalore LUG (BlrLUG)

- Bay Area LabVIEW User Group

- British Columbia LabVIEW User Group Community

- Budapest LabVIEW User Group (BudLUG)

- Chicago LabVIEW User Group

- Chennai LUG (CHNLUG)

- CSLUG - Central South LabVIEW User Group (UK)

- Delhi NCR (NCRLUG)

- Denver - ALARM

- DutLUG - Dutch LabVIEW Usergroup

- Egypt NI Chapter

- Gainesville LabVIEW User Group

- GLA Summit - For all LabVIEW and TestStand Enthusiasts!

- GUNS

- High Desert LabVIEW User Group

- Highland Rim LabVIEW User Group

- Huntsville Alabama LabVIEW User Group

- Hyderabad LUG (HydLUG)

- Indian LabVIEW Users Group (IndLUG)

- Ireland LabVIEW User Group Community

- LabVIEW GYM

- LabVIEW LATAM

- LabVIEW Team Indonesia

- LabVIEW - University of Applied Sciences Esslingen

- LabVIEW User Group Berlin

- LabVIEW User Group Euregio

- LabVIEW User Group Munich

- LabVIEW Vietnam

- Louisville KY LabView User Group

- London LabVIEW User Group

- LUGG - LabVIEW User Group at Goddard

- LUGNuts: LabVIEW User Group for Connecticut

- LUGE - Rhône-Alpes et plus loin

- LUG of Kolkata & East India (EastLUG)

- LVUG Hamburg

- Madison LabVIEW User Group Community

- Mass Compilers

- Melbourne LabVIEW User Group

- Midlands LabVIEW User Group

- Milwaukee LabVIEW Community

- Minneapolis LabVIEW User Group

- Montreal/Quebec LabVIEW User Group Community - QLUG

- NASA LabVIEW User Group Community

- Nebraska LabVIEW User Community

- New Zealand LabVIEW Users Group

- NI UK and Ireland LabVIEW User Group

- NOBLUG - North Of Britain LabVIEW User Group

- NOCLUG

- NORDLUG Nordic LabVIEW User Group

- North Oakland County LabVIEW User Group

- Norwegian LabVIEW User Group

- NWUKLUG

- Orange County LabVIEW Community

- Orlando LabVIEW User Group

- Oregon LabVIEW User Group

- Ottawa and Montréal LabVIEW User Community

- Phoenix LabVIEW User Group (PLUG)

- Politechnika Warszawska

- PolŚl

- Rhein-Main Local User Group (RMLUG)

- Romandie LabVIEW User Group

- Rutherford Appleton Laboratory

- Sacramento Area LabVIEW User Group

- San Diego LabVIEW Users

- Sheffield LabVIEW User Group

- Silesian LabVIEW User Group (PL)

- South East Michigan LabVIEW User Group

- Southern Ontario LabVIEW User Group Community

- South Sweden LabVIEW User Group

- SoWLUG (UK)

- Space Coast Area LabVIEW User Group

- Stockholm LabVIEW User Group (STHLUG)

- Swiss LabVIEW User Group

- Swiss LabVIEW Embedded User Group

- Sydney User Group

- Top of Utah LabVIEW User Group

- UKTAG – UK Test Automation Group

- Utahns Using TestStand (UUT)

- UVLabVIEW

- VeriStand: Romania Team

- WaFL - Salt Lake City Utah USA

- Washington Community Group

- Western NY LabVIEW User Group

- Western PA LabVIEW Users

- West Sweden LabVIEW User Group

- WPAFB NI User Group

- WUELUG - Würzburg LabVIEW User Group (DE)

- Yorkshire LabVIEW User Group

- Zero Mile LUG of Nagpur (ZMLUG)

- 日本LabVIEWユーザーグループ

- [IDLE] LabVIEW User Group Stuttgart

- [IDLE] ALVIN

- [IDLE] Barcelona LabVIEW Academic User Group

- [IDLE] The Boston LabVIEW User Group Community

- [IDLE] Brazil User Group

- [IDLE] Calgary LabVIEW User Group Community

- [IDLE] CLUG : Cambridge LabVIEW User Group (UK)

- [IDLE] CLUG - Charlotte LabVIEW User Group

- [IDLE] Central Texas LabVIEW User Community

- [IDLE] Cowtown G Slingers - Fort Worth LabVIEW User Group

- [IDLE] Dallas User Group Community

- [IDLE] Grupo de Usuarios LabVIEW - Chile

- [IDLE] Indianapolis User Group

- [IDLE] Israel LabVIEW User Group

- [IDLE] LA LabVIEW User Group

- [IDLE] LabVIEW User Group Kaernten

- [IDLE] LabVIEW User Group Steiermark

- [IDLE] தமிழினி

- Academic & University Groups

-

Special Interest Groups

- Actor Framework

- Biomedical User Group

- Certified LabVIEW Architects (CLAs)

- DIY LabVIEW Crew

- LabVIEW APIs

- LabVIEW Champions

- LabVIEW Development Best Practices

- LabVIEW Web Development

- NI Labs

- NI Linux Real-Time

- NI Tools Network Developer Center

- UI Interest Group

- VI Analyzer Enthusiasts

- [Archive] Multisim Custom Simulation Analyses and Instruments

- [Archive] NI Circuit Design Community

- [Archive] NI VeriStand Add-Ons

- [Archive] Reference Design Portal

- [Archive] Volume License Agreement Community

- 3D Vision

- Continuous Integration

- G#

- GDS(Goop Development Suite)

- GPU Computing

- Hardware Developers Community - NI sbRIO & SOM

- JKI State Machine Objects

- LabVIEW Architects Forum

- LabVIEW Channel Wires

- LabVIEW Cloud Toolkits

- Linux Users

- Unit Testing Group

- Distributed Control & Automation Framework (DCAF)

- User Group Resource Center

- User Group Advisory Council

- LabVIEW FPGA Developer Center

- AR Drone Toolkit for LabVIEW - LVH

- Driver Development Kit (DDK) Programmers

- Hidden Gems in vi.lib

- myRIO Balancing Robot

- ROS for LabVIEW(TM) Software

- LabVIEW Project Providers

- Power Electronics Development Center

- LabVIEW Digest Programming Challenges

- Python and NI

- LabVIEW Automotive Ethernet

- NI Web Technology Lead User Group

- QControl Enthusiasts

- Lab Software

- User Group Leaders Network

- CMC Driver Framework

- JDP Science Tools

- LabVIEW in Finance

- Nonlinear Fitting

- Git User Group

- Test System Security

- Developers Using TestStand

- Product Groups

-

Partner Groups

- DQMH Consortium Toolkits

- DATA AHEAD toolkit support

- GCentral

- SAPHIR - Toolkits

- Advanced Plotting Toolkit

- Sound and Vibration

- Next Steps - LabVIEW RIO Evaluation Kit

- Neosoft Technologies

- Coherent Solutions Optical Modules

- BLT for LabVIEW (Build, License, Track)

- Test Systems Strategies Inc (TSSI)

- NSWC Crane LabVIEW User Group

- NAVSEA Test & Measurement User Group

-

Local User Groups (LUGs)

-

Idea Exchange

- Data Acquisition Idea Exchange

- DIAdem Idea Exchange

- LabVIEW Idea Exchange

- LabVIEW FPGA Idea Exchange

- LabVIEW Real-Time Idea Exchange

- LabWindows/CVI Idea Exchange

- Multisim and Ultiboard Idea Exchange

- NI Measurement Studio Idea Exchange

- NI Package Management Idea Exchange

- NI TestStand Idea Exchange

- PXI and Instrumentation Idea Exchange

- Vision Idea Exchange

- Additional NI Software Idea Exchange

- Blogs

-

Events & Competitions

- FIRST

- GLA Summit - For all LabVIEW and TestStand Enthusiasts!

- Events & Presentations Archive

- Optimal+

-

Regional Communities

- NI中文技术论坛

- NI台灣 技術論壇

- 한국 커뮤니티

- ディスカッションフォーラム(日本語)

- Le forum francophone

- La Comunidad en Español

- La Comunità Italiana

- Türkçe Forum

- Comunidade em Português (BR)

- Deutschsprachige Community

- المنتدى العربي

- NI Partner Hub

-

lizhuo_lin

on:

Stereo vision (OpenCV and Labview comparison)

lizhuo_lin

on:

Stereo vision (OpenCV and Labview comparison)

-

张斌

on:

Point cloud registration tool

张斌

on:

Point cloud registration tool

-

GohanTYO

on:

Qt+PCL+OpenCV (Kinect 3D face tracking)

GohanTYO

on:

Qt+PCL+OpenCV (Kinect 3D face tracking)

-

Klemen

on:

Qt GUI for PCL (OpenNI) Kinect stream

Klemen

on:

Qt GUI for PCL (OpenNI) Kinect stream

-

efreet

on:

OpenCV and Qt based GUI (Hough circle detection example)

efreet

on:

OpenCV and Qt based GUI (Hough circle detection example)

-

Spalabuser

on:

Homography mapping calculation (Labview code)

Spalabuser

on:

Homography mapping calculation (Labview code)

-

rameshr

on:

Optical water level measurements with automatic water refill in Labview

rameshr

on:

Optical water level measurements with automatic water refill in Labview

-

xuexue0224

on:

Kalman filter (OpenCV) and MeanShift (Labview) tracking

xuexue0224

on:

Kalman filter (OpenCV) and MeanShift (Labview) tracking

-

Aaatif

on:

Serial data send with CRC (cyclic redundancy check) - Labview and Arduino/ARM

Aaatif

on:

Serial data send with CRC (cyclic redundancy check) - Labview and Arduino/ARM

-

hsaid

on:

Color image segmentation based on K-means clustering using LabVIEW Machine Learning Toolkit

hsaid

on:

Color image segmentation based on K-means clustering using LabVIEW Machine Learning Toolkit

Stereo setup parameters calculation and Labview VDM 2012 stereo evaluation

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

There has been some interest in the new stereo vision library that is used in the Labview Vision Development Module 2012.

I was (and still am) interested in testing the performance of the library as are some other people on the NI forums that are having problems obtaining a proper depth image from a stereo vision system.

In this post I will present my results that I obtained so far and also attach a small VI, that can be used to calculate the parameters of the stereo setup. Based on this THEORETICAL calculations it is possible to obtain the disparity for a certain depth range and also calculate the depth accuracy and field of view.

First off, I tried to test the stereo library with the setup shown in Figure 1.

Figure 1. Setup for the initial test of the stereo library.

Two DSLR cameras were used with the focal lenghts of both set to 35 mm. The cameras were different (different sensors, different focal lenghts), and the setup was really bad (the cameras were attached to the table using a tape). Also note that the NI Vision prefers a horizontal baseline, or a system where the horizontal distance exceeds the vertical distance between the two cameras (NI Vision Manual). Ideally, the vertical distance between both sensors should be equal to zero and the horizontal distance separated by the baseline distance. I selected the disparity according to the parameters calculation (see the attachment) and I really was not expecting great results, and sure enough, I didn't get them.

So, I changed my stereo setup. I bought two USB webcameras and rigidly mounted them as shown in Figure 2. The system was further mounted on a stand.

Figure 2. Stereo setup with two webcameras with baseline distance of 100 mm.

I followed the same procedure (calibrating the cameras (distortions) and the stereo system (translation vector and rotation matrix)) and performed some measurements. The results of the reconstructed 3D shape are shown in Figure 3 (with texture overlaid).

Figure 3. 3D reconstruction with overlaid texture.

The background had no texture (white wall), so I expected that the depth image of the background would not be properly calculated, but the foreground (the book) had enough texture. To be honest, I was dissapointed as I really expected better results. In order to enhance the resolution the object needs to be closer or the the focal lenght needs to be increased.

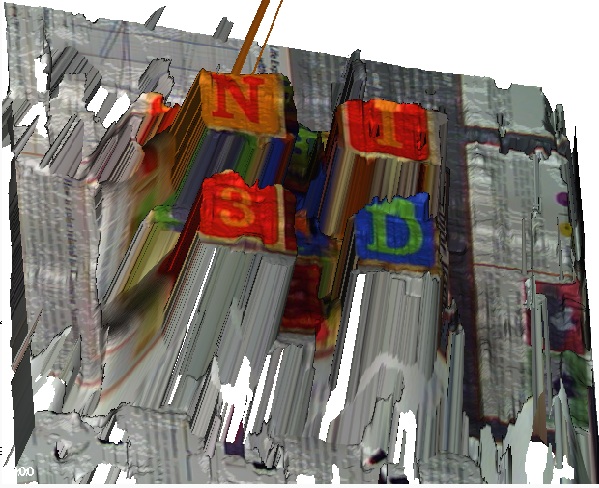

But before I tried anything else, I took the example that came with the stereo library and calculated the 3D cloud of points (similar as in Figure 3). The results are shown in Figure 4.

Figure 4. The 3D reconstruction with texture for the supplied LV stereo example.

To be honest again, I was expecting better results. I am confident that this setup was made by experts with professional equipment and not some USB webcameras. Considering this, I am asking myself the following question: Is it even reasonable to think that any good results can be achieved using webcameras? Looking at the paper titled "Projected Texture Stereo" (see the attached .pdf file) it would also be interesting to try using a randomly projected pattern. This should improve the algorithm when matching the features of both images and consequently make the depth image better and more accurate.

I really hope that someone tries this before I do (because I do not know when I will get the time to do this) and shares the results.

If anyone has something to add, comment or anything else, please do so. Maybe we can all learn something more about the stereo vision concept and measurements.

Be creative.

https://decibel.ni.com/content/blogs/kl3m3n

"Kudos: Users may give one another Kudos on the forums for posts that they found particularly helpful or insightful."

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.