- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Is there an API constant for machine epsilon?

Solved!01-02-2017 09:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm needing to do some floating point compares and I'm used to using the LabVIEW machine epsilon constant for this. Is there an equivalent constant to use in TestStand?

Solved! Go to Solution.

01-03-2017

10:35 AM

- last edited on

11-04-2024

03:59 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Taki,

I assume you're trying to avoid comparing numbers you know to be mathematically equivalent and get incorrect responses due to the quirks of the bitwise representation of a floating point. If so, there's a couple of things I'd suggest taking a look at:

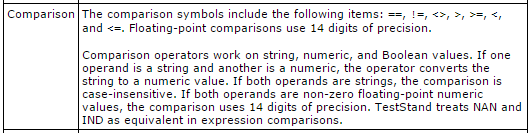

http://zone.ni.com/reference/en-XX/help/370052R-01/tsfundamentals/infotopics/operators_expression/ This is the help documentation for Expression Operators in TestStand. If you look in the section for "Comparison," you'll see that comparisons involving floating point only compare to 14 bits of precision by default. That's a low enough precision that you're likely to run into artifacts related to the bitwise representation.

That help document also has a link to: http://zone.ni.com/reference/en-XX/help/370052R-01/tsfundamentals/infotopics/special_constant_values/ At the top, we see a discussion of the Localized Decimal Point option. I suspect this is the epsilon constant you're looking for.

If that doesn't leave you failing safe, there's always the option to pass your value into your VI to handle your comparisons there. Although, this shouldn't be required. I know I've used "safety net" programming options in the past for no reason other than peace of mind.

01-03-2017 10:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Links don't work for me.

Basically, I need the rounding error for a given machine representation. The wikipedia page says that for a double it's 2*2^(-53).

01-04-2017 09:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Strange. I checked the links and they don't work for me either. Although, I can copy/paste the text into a browser and find the page just fine.

The wikipedia page is likely assuming the full floating point is used. If you're using a comparison in TestStand, the help documentation states it only uses 14 digits of precision.

If we take the special constant value into consideration, we'll see:

It'd appear a design decision was made to assume anything less than this value was likely to be noise in a test and gets ignored when you're working with floating point in TestStand. This is purely a guess on my part as to why we look at 14 digits for comparisons.

Either way, if you're using the direct comparison options from TestStand itself, your rounding error will be much larger than the typical floating point rounding error as only 14 digits are considered. 5*10^(-14) versus ~2.2*10^(-16). But, you're less likely to run into issues comparing two things that are mathematically equivalent as the rounding error inherent to a floating point would be truncated by using this method.

Does that help?

01-04-2017 10:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It helps a little. I guess it means that TestStand epsilon will actually be much greater than machine epsilon. This is a bit problematic for the type of comparisons that I'm doing.

I'm using TestStand to do verification of embedded software where we command a floating point setpoint and expect the UUT to return the actual setpoint to within the floating point rounding error. (i.e. Use TestStand to sent a setpoint of 0.3, Read Back the UUT setpoint which is actually .2999999. . . ., the numeric limit step I would normally use for this case is a GELE with 0.3+/- machine epsilon.)

There is some danger of having false passes if TestStand can only compare to 14 digits of precision for floats.

Would it be better for me to typecast to U64 and do a bitwise comparison? Either that or I could do the custom comparison in LabVIEW.

01-05-2017 10:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I wanted to hit on a couple of points.

GELE 0.3 +/1machine epsilon is much greater than the 14 points of precision. If you're expecting the UUT to reply within the rounding error, you can remove the GELE 0.3 component as the 14 points of precision was roughly 200x the rounding error. This would prove true if the difference was purely within the rounding error and would test false if the difference were off by more than that 200x. This would be a bit more accurate than 0.3 rather than less accurate. If there's potential for noise in your system and that's why you want to move up to 0.3, then by all means lets consider the other options.

Typecasting to U64 likely means evaluating more than a comparison. Can you visualize two numbers that are close to each other that would use a different exponent? For example, let's use decimal. 99 and 100 are off by 1. We'd see 9.9*10^1 and 1*10^2. We could move this to the decimal realm and see a value that's within your threshold of 0.3 but still be off by an exponent. Typecasting to U64 means you'd need to write code to compare the exponent prior to the mantissa to see how close the two numbers really are. You might get values that are within your threshold but would fail the bitwise comparison because all values with a different exponent component of the bitwise comparison would fail.

If the 14 digits is a concern for you, I'd lean towards the comparison in LabVIEW. It'd be a rather trivial VI to write as it sounds like you already have the VI.

01-11-2017 03:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I agree that it is a trivial VI to write. Was just hoping to find something native in TestStand instead of having to carry around a custom function and the LabVIEW Runtime.

Noise doesn't really play into the particular system under test. We send a serial string to the UUT and expect a serial string back. In theory, the only error should occur when the UUT converts the serial string IEEE float into a a real float and then repacks it back to an IEEE float representation to transmit on the serial bus. (i.e. any inaccuracy should be true rounding inaccuracy on the microcontroller which is smaller than 14 digits of precision)

Good discussion though and I did learn something new about TestStand so I can mark this off as solved.